What is Change Data Capture?

Change data capture (CDC) is the process of capturing data changes made to a database as they occur and sending those changes “downstream” in real-time to at least one destination.

Change Data Capture Benefits

Change data capture became a preferred method for extracting data over batch-based extracts for ETL pipelines initially, and then ELT pipelines as well. While there are many alternatives to change data capture for data extraction, they do not provide the same level of benefits.

- Near real-time visibility - Change data capture is arguably the fastest, lowest latency method for keeping data in sync across systems. It’s commonly used for replicating data in a database cluster.

- Lowest load on source systems - Change data capture has been known to add 1-3% additional load to existing deployments, which is significantly less than the alternatives, including batch extracts. Over time the loads on target systems have decreased as well.

- Improved scalability - many batch-based pipelines can get to a point as data volumes grow where the extraction time exceeds their batch window. Change data capture enables data to be sent continuously across 24 hours at a faster rate than any database transaction rates, which allows CDC to handle just about any scale.

- Preserves all changes - Change data capture also captures all changes and deletes, which is not the case with batch extracts, which only capture the current state of data, not all the changes. Updates can write over previous changes.

- Data backfill and recovery - Change data capture, in part because it was designed for database recovery, makes it easy to access the history of all changes and use them to backfill data whenever needed.

The few but major challenges with change data capture have traditionally been the cost and complexity of implementing CDC. These have no longer been major challenges with some of the newer offerings on the market.

How Change Data Capture Works

Change data capture (CDC) works by recognizing a change event in a source data system. It then streams (or batches up and sends) changes so that a downstream system can act on that change, usually by updating a target system to reflect new information.

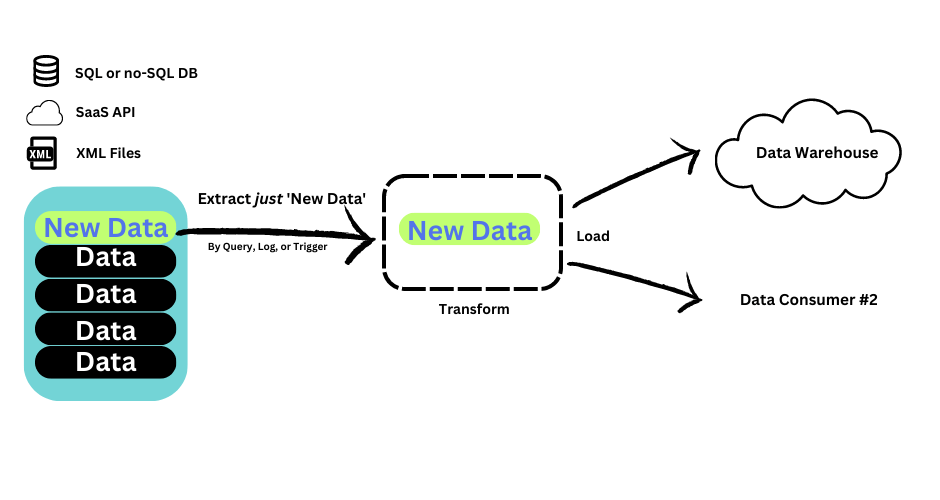

There are typically three steps with CDC:

- Detect and capture change events from a source database like PostgreSQL, MySQL, or MongoDB; or an app like Salesforce.

- Stream events directly (replication) or perform any relevant transforms (streaming ETL).

- Deliver changes to at least one target including data warehouses, data lakes, and applications.

The goal of using CDC is to do all these things with low latency* and with reduced stress on the source database compared to other methods.

Let’s look at a common scenario. Say you’re a company that manages customer records in a relational database, like PostgreSQL, and powers data analysis from a data warehouse, like Snowflake. When a customer record is updated in Postgres, CDC mechanism takes note and updates the corresponding record(s) in Snowflake.

That’s a simplified use case, but the pattern is a very common one: migrating data updates from an RDBMS to a data warehouse. (Need a refresher on different types of data storage systems? We’ve got you.)

*"Low latency" is a subjective term. Though CDC is often marketed as a real-time data solution, that’s not always the case. Depending on the method you use, there can be a bit of latency in your CDC pipeline, as we'll see.

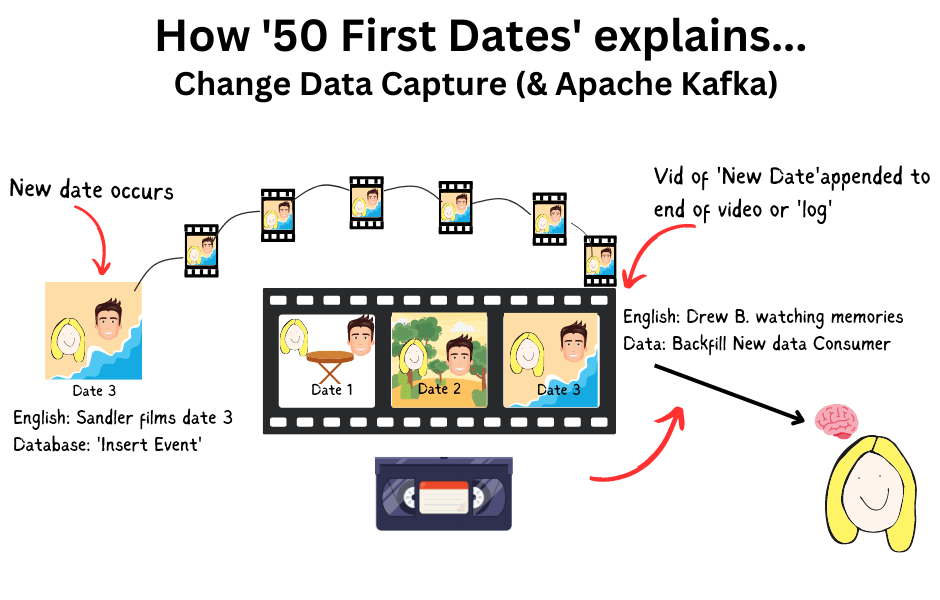

An analog example of change data capture - 50 first dates

The 2004 flick 50 First Dates starring Adam Sandler and Drew Barrymore is a great real-world, non-software example of how change data capture works.

In the movie, one of our main characters suffers from severe amnesia after an accident and is unable to retain new memories. Our other lovestruck character doesn’t lose hope, though.

Instead, he creates a videotape for her to watch each morning when she wakes up. The tape linearly summarizes her life, the news, and the major milestones. He adds on to the end of the tape each day so she is always ‘stateful’ with regard to current reality.

Our creative hopeless romantic has effectively employed an efficient, yet simple change data capture process. The videotape of events can be thought of as a database or continuous log, and new memories can be thought of as ‘append-only’ events, or changes.

- Appending the new video of each new date to the end of the videotape is the essence change data capture… an append-only exactly-once log.

- Instead of re-creating the tape from scratch each day, which would be very time-consuming and equivalent to a nightly full database export, Adam* appends onto the end of the tape.

- The videos are added in the exact order they occurred.

- When Drew* starts out knowing nothing each day, the videotape is able to backfill her knowledge automatically..

- The tape is never destroyed so the videotape "log" can be shared with others should others need to remember… this scalable process of sharing the append log is at the heart of change data capture.

*We forgot the real names of Adam Sandler and Drew Barrymore's characters and did not look them up while writing this article. It's easier this way.

Yes, this analogy breaks down in places. But at a high level, it works. Adam Sandler data puns aside for now, let’s explore what efficient change data capture means when implemented within the enterprise. This article will particularly resonate with you if your data volumes are large, growing quickly, or being synced too slowly from source to destination.

Change Data Capture Methods

The three most common ways to implement change data capture are:

- Query-based change data capture

- Change data capture using triggers, and finally

- Log-based change data capture (the preferred approach)

What is Query-Based Change Data Capture?

The first change data capture method involves simple SQL queries on tables in the source database. This is also known as polling.

Since CDC sources tend to be relational databases, administrators will be quite comfortable using SQL to manipulate tables. This makes the query method approachable for most teams.

To set this up, you create a CRON job or similar to run the query at a set interval.

The source tables must have an extra column to track when each record was last changed. This is usually a timestamp column, but incremented version numbers can be used, as well, with a bit of extra legwork.

| id | firstname | lastname | address | time_updated |

| 0001 | Joe | Shmoe | 123 Main St | 2023-02-03 15:32:11 |

To find changes to the table, you’d run a query against it to select records where the timestamp value is greater than the time at which you last ran the query. For example, if you last queried the customers table at 7 AM on February 1:

SELECT * FROM customers WHERE time_updated > ‘2023-02-01 07:00:00’;

Typically, you’d configure a recurring query at a standard time interval. The selected records would be used to update the target at that same cadence.

Advantages:

- Easy to implement: It's a simple SQL query run on a schedule.

- Permissions are simple: Only read privileges are required.

- Good fit for: Smaller and slower OLTP databases where a full audit trail isn't required.

Disadvantages:

- Add a column to tables: Adding a new column to track timestamps might not be feasible.

- Can’t capture deletes: When you completely remove a record from the table — this is known as a hard delete — the query won’t detect it. You can work around this by using soft deletes. This means you retain all records, but add a boolean deleted field. However, this isn’t usually ideal because it can bloat tables with useless records.

- Latency: This is a batch method. There will always be some lag between data updates in the source and target.

- Performance: Every time you run the query, you’re polling the entire table. With a large table, you’ll run into performance issues. This tends to be particularly problematic when your table starts fairly small and grow significantly — a common occurrence. You won’t be able to query it as often as you like, and each time you run the query, your entire system can get bogged down, sometimes for hours.

What is Trigger-Based Change Data Capture?

Databases like PostgreSQL, MySQL, and SQL Server have built-in tooling for automatically invoking a function whenever a change is made in a table. These functions, or triggers, vary a bit in these respective databases, but the high-level workflow remains the same (see Postgres Trigger Docs, MySQL Trigger, and SQL Server Triggers).

You can define a function and replicate all the change events to another table internally within the database, often called an audit table or shadow table.

Triggers are useful and popular in scenarios like:

- Complex data validation (For example, when a new row is created in table customers, a new row should also exist in table orders).

- Keeping an audit log of the database changes.

- Handling database errors.

Here comes the big BUT… Triggers cannot be used with high-velocity data.

Before log-based CDC, triggers were quite popular for change data capture. Today, they're far less common. (We challenge you to find a single Medium article or engineering blog from a respectable company detailing their enterprise trigger-based CDC at scale!)

This is likely because, if you are looking to scalably implement change data capture, trigger-based CDC is simply not the best option.

While some triggers are more costly than others, the cost on the database will be too high as a continuous deluge of triggers will add too much overhead for any dataset at scale.

Advantages:

- Real-time: Triggers capture changes immediately.

- Complete data capture: Including delete events.

- Supports metadata: For example, you can track which statement caused the change event.

Disadvantages:

- Performance: Triggers impact the performance of the source database, especially at scale. This is because each trigger creates additional write operations. This may be insignificant at first, but can become debilitating on large datasets with frequent changes.

- Getting the data out of the source is complex: Even after the trigger, you still need to get the data out of the "shadow table" in the source system. This introduces more complexity, and often latency.

- Complex with multiple tables: When you need to capture data from multiple tables, each one needs its own trigger function. The upkeep often becomes unsustainable.

What is Log-Based Change Data Capture?

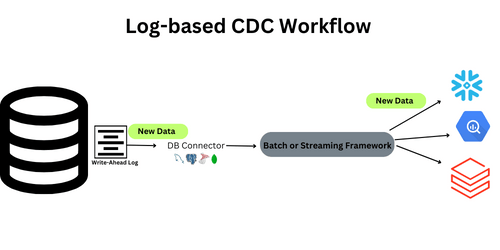

Log-based change data capture is a data design pattern in which database change events (updates, inserts, deletes) are captured from the write-ahead log (WAL) of the database (or binlog in MySQL) and delivered to downstream data consumers.

The WAL is a transactionally consistent, exactly once record of all changes in the database. It’s used to ensure data isn't lost in the event of a system failure. This makes it the perfect source capturing changes for three reasons:

- It is the fastest way to capture any change.

- It’s guaranteed to show each change exactly once

- It puts a very low load on the database because all you’re doing is a file read.

Because of these three benefits, WAL-based CDC has become the most popular approach for change data capture. .

Log-based CDC requires an additional component to process and capture change events from the WAL (unlike queries and triggers, which leverage native capabilities of the database). All three methods will also require some way to deliver events. Usually this is in the form of a message broker or other form of streaming with source and target connectors.

Implementation and configuration vary a bit as each database type has its own version of the write-ahead log. The Postgres log-based CDC or MySQL log-based CDC roughly looks like this:

Log-based CDC is the gold standard of change data capture implementations because it captures all events, in exact order, in real-time, all without adding any load to the source database. That's why most enterprise-scale solutions offering managed CDC include a connector that reads directly from the write-ahead log.

Building an end-to-end change data capture pipeline is complicated, especially at any reasonable scale and low latency. You are better off leveraging existing proprietary offerings, or extending open source than trying to build your own.

Advantages:

- Ordering and completeness: Every event (including deletes) is captured in order, and you can retain full history.

- Latency: Latency as low as milliseconds.

- Easy on the database: Doesn't increase the database load.

Disadvantages:

- Complex: Custom-coded connectors are challenging to engineer.

- Permissions: Requires more permissions to use the WAL.

Want to learn more? Gunnar Morling (lead on the Debezium project, and who has likely written more about CDC than any human alive) summed the differences between query-based and log-based methods here.

What Isn't Change Data Capture?

A nightly cron job running SELECT * and extracting the full current snapshot of the database in batch mode is not change data capture.

Yes, the changed data will ultimately be reflected in the downstream system for database replication, but, in this instance, a daily state of the data is being extracted and loaded, as opposed to all the change events. You can actually miss changes overwritten by other changes in between two batch extractions. Batch jobs also have much larger latencies and can load a database more than CDC.

Batch vs Real-time CDC: Exploring Latency

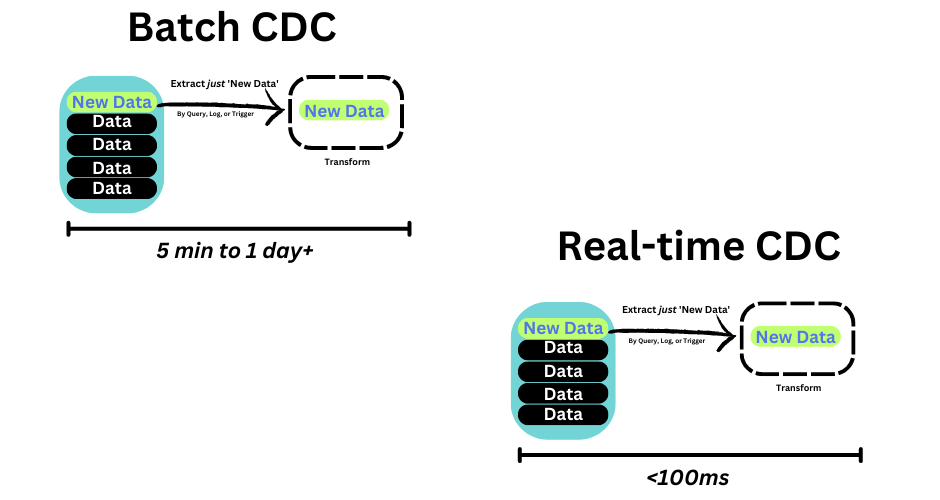

There are some examples of “batch” CDC. PostgreSQL change data, MySQL change data, or change data from any other source can be captured in one of two ways:

- Through a batch process.

- Through a real-time (streaming) process.

Now, in most modern change data capture implementations, the change data is available immediately to be sent to downstream systems like SnowFlake, BigQuery, Databricks, and beyond. The CDC pipeline is reacting instantaneously to a change event, making this real-time CDC.

But every organization's latency needs are different. Batch CDC pipelines can run once a day, or once every five minutes. Depending on the use case, that time delay might be perfectly acceptable. The cost savings of extracting new data only might be enough of a benefit to justify batch CDC.

When Should You Use Change Data Capture?

Change data capture is a hugely useful pattern for just about any modern business. It helps companies get more value from their data in a faster and more cost-effective way.

By implementing CDC on a database like Postgres or MySQL, companies not only keep the source database running more efficiently. They stream the data to analytics and production faster so data teams can have more real-time visibility, which helps drive more business value.

What analytical and operational use cases require, or are best supported by change data capture?

The best use cases are those that require real-time visibility. For these use cases, without a solid change data capture implementation, we start to see problems like:

- Someone places an order for an item that appears to be in stock from an online retailer... only to find out that it was not in stock. (The store's website was out of date with the database).

- Someone makes a financial mistake based on outdated information on their bank account.

- Fraudulent activity isn't caught in time, and the perpetrator rides off into the sunset.

- A logistics company doesn't know where their shipment is and can't make informed decisions for new orders.

You get the idea.

When Should Change Data Capture Not Be Used?

By and large, log-based change data capture will be the preferred approach to use to extracting data. But you might choose not to implement it when:

- There is no value in lower latency data feeds for the organization.

- Data volumes for the table or database are small and under no workload stress.

- You are not allowed to directly access data. This could be for internal business policies or regulatory reasons (e.g. HIPAA or PII.) You can set up transformation rules to obfuscate or delete fields in transformations, but make sure you are adhering to your data policies.

Historically speaking, we hear of two reasons teams have chosen not to implement CDC. Either:

- Legacy processes are batch based and don’t need or support CDC.

- CDC was just too much work, especially when batch worked just fine.

- We don’t have access to the WAL, only access to specific views or tables.

A Reddit thread in May of 2023 captures the above well. You can read the full discussion here.

As a final note here, if you're building a new application, it might make more sense to make it stream-native directly from production to the new application. This can be done by, say writing to Kafka, Kinesis, or Gazette.

Change Data Capture Alternatives

A Google search for how to implement change data capture in Postgres, MySQL, or another database will quickly become overwhelming. You'll find scores of potential solutions across vendors, as well as open-source, Cloud-native, and custom code implementations.

In this next section, we’ll help you sort through the noise and provide options and steps for the 4 most popular database management systems: MySQL, PostgreSQL, SQL Server, and MongoDB.

Paid & Open-Source CDC Solutions

| Solution | Summary | Pricing | Postgres & MySQL | SQL Server | Mongo | Dynamo | Firestore |

| Estuary Flow | Open-source and fully managed streaming CDC connectors for cloud with transforms in streaming SQL. | Open-source or $1/GB on incremental data each month. | X | X | X | X | X |

| Fivetran / HVR | Fully-managed batch connectors for on-prem or cloud. 5-minute syncs. | Monthly active rows. | X | X | |||

| Airbyte | Open-source batch connectors with upgradable service available. 5-minute syncs. | Open-source or enterprise pricing. | X | X | X | ||

| Confluent | Fully managed real-time CDC connector built on top of Debezium. | Priced on compute and data transfer used each month. | X | X | X | X | |

| Striim | Fully managed real-time CDC connectors for on-prem or cloud. | Priced on compute and data transfer used each month. | X | X | X | ||

| Debezium | Popular open-source project for relaying change data into Kafka. See our analysis. | NA | X | X | X | ||

| Maxwell’s Daemon | Written in Java. Outputs from the event stream. Similar to Debezium but not as popular. | NA | MySQL only |

Native CDC Solutions

MySQL & PostgreSQL

The major cloud providers each offer their own zero-ETL services. If you are using, say, AWS Aurora MySQL and only need to move data to Redshift, then these native services may be an affordable fit. Google Cloud and Microsoft also offer their own options.

Note this only allows you to move cloud-hosted MySQL change data only within these environments, and there are no features enabling transformation, governance, and more.

This Medium article provides a good overview of the pros and cons of using zero-etl.

In AWS, DMS may be an option as well, but it also has its own tradeoffs.

SQL Server

Microsoft is working on functionality to more easily move data within Azure environments.

MongoDB

MongoDB has a built-in connector sending change data into a Kafka topic. You can read more about it here.

Manual CDC Solutions

Manual CDC solutions are those discussed above (triggers, queries, and log-based)— the same methods that underly all the paid and managed tools here.

How to implement change data capture step-by-step

There are several options for implementing change data capture from different databases and applications to various targets. If you would like to implement CDC for specific targets, you can go to the following guides.

- MySQL CDC guide

- Postgres CDC guide

- SQL Server CDC guide

- DynamoDB CDC guide

- How to extract data from Firestore, and the Firestore CDC guide

Today change data capture is often used for applications just by using CDC with the underlying application database. Log-based CDC continues to be the lowest-load option for extracting data from most applications. While most applications are not designed to support CDC or event-driven capture, it is starting to occur with SaaS applications such as Salesforce.

These guides will help you get started. But even as you implement your first change data capture deployments, you should look ahead for others. Most companies end up with many CDC sources and targets over time. So you should consider a more general-purpose change data capture and streaming ETL platform such as Estuary.

No-code Real-time Change Data Capture

You might have noticed Estuary Flow as an option for real-time change data capture and streaming ETL for these sources and targets. Estuary Flow works on an ecosystem of open-source connectors, including CDC connectors we build and maintain in-house. Flow uses the log-based CDC method, but implements end-to-end change data capture in a different, more elegant way.

Flow is a no-code product built on Gazette, a distributed pub-sub streaming framework that expands the use of write-ahead logs with journals for each stream in an end-to-end streaming ETL pipeline. Flow adds the no-code source and target connectors, and the ability to implement any streaming or batch transforms and other compute needed for your data pipelines.

Benefits of Estuary Flow include...

- Many-to-many ETL pipelines that allows you to move the same data to multiple targets for multiple use cases with the same pipeline.

- Support for both streaming and batch transforms, along with the ability to use SQL and TypeScript for any type of streaming or batch compute.

- The ability to add new targets at any time, backfill historical data as needed directly from Estuary without having to re-extract from the sources.

- Exactly-once processing guarantees using Gazette's unique exactly-once semantics in real-time and batch so you don’t have to deduplicate data.

- Scales for massive changes: true elastic scale with a decoupled storage-compute architecture.

- Support for schema drift: Data is validated against schema to test for changes, which you can evolve without halting the pipeline.

You can try Flow for free here!

We welcome your questions, comments, and (friendly!) debate. Find our team on Slack or LinkedIn!

CDC Frequently asked questions (FAQ)

- What is change data capture?

Change data capture (CDC) is the process of capturing data changes made to a database as they occur and sending those changes “downstream” in real-time to at least one destination. - What are the different types of change data capture?

The different types of change data capture are: query-based, trigger-based, and log-based, which is the most preferred approach with the lowest load on systems and the lowest latency. - Does change data capture guarantee I get the data exactly once?

The write-ahead long guarantees that a chance exists exactly once. But that does not guarantee your CDC-based integration delivers exactly once. Some, like Debezium, can only guarantee at least once. Others deduplicate at the target to implement exactly once delivery. Change ordering can also be an issue. Make sure you research each vendor carefully. - What is change data capture latency?

The latency of change data capture is usually the end-to-end latency from when data changes in the source database to when the change appears in the target. The most common reasons for high latency is the time it takes to load data into a data warehouse and then run any transformations as part of ELT.