If you’ve found yourself wondering how to back up Apache Kafka with a data lake — or even if it’s possible to use Kafka itself as a data lake — you’re not alone.

As data engineers, we’re living in an era where questions like this come up quite often. As organizations have grown, so have their data needs.

Traditionally, the sources of data for organizations were operational tools e.g. CRMs, and transactional systems among others. This data would typically be generated in known periods and have known structure.

During this era, the analytics needs of the business could easily be satisfied by extracting, processing and storing the data in a batch manner in pre-modeled data warehouses.

However, with the increase in complexity of businesses and the data they generate, the size and speed at which data was being generated increased exponentially.

The formats and sources have also become more varied with new sources such as clickstreams and sensors coming to play. It is no longer viable to work with data in a batch manner only.

Businesses are also now expecting to be able to take action on the data generated, immediately after it’s generated!

Real-time data streaming and processing is now a key value-add for businesses, but a completely new set of problems is now upon us as Data Engineers.

In this article, we explore one of the pitfalls of real-time data streaming, backfill, and solutions that you can explore.

Real-time data streaming and processing

Real-time data processing involves extracting, relaying, and using data as soon as it’s generated. It involves:

- Streaming: Sending and receiving the data

- Processing: Performing transformations and aggregations on the data into appropriate formats.

- Storing: Storing the raw and/or processed data for analysis

- Distributing: Making the data available for analysis by downstream users

This architecture requires the following components:

- A data broker: The data (usually referred to as messages) is transmitted from source to destination by a system known as a broker. Apache Kafka, for instance, is a popular message broker.

- Storage: Usually a data lake, the emitted messages are stored for analysis. This can be object storage solutions such as Estuary Flow on AWS S3 or GCP Cloud Storage.

- A processor: The messages are then transformed and aggregated. Ideally, this needs to be done immediately after the message is received… within milliseconds.

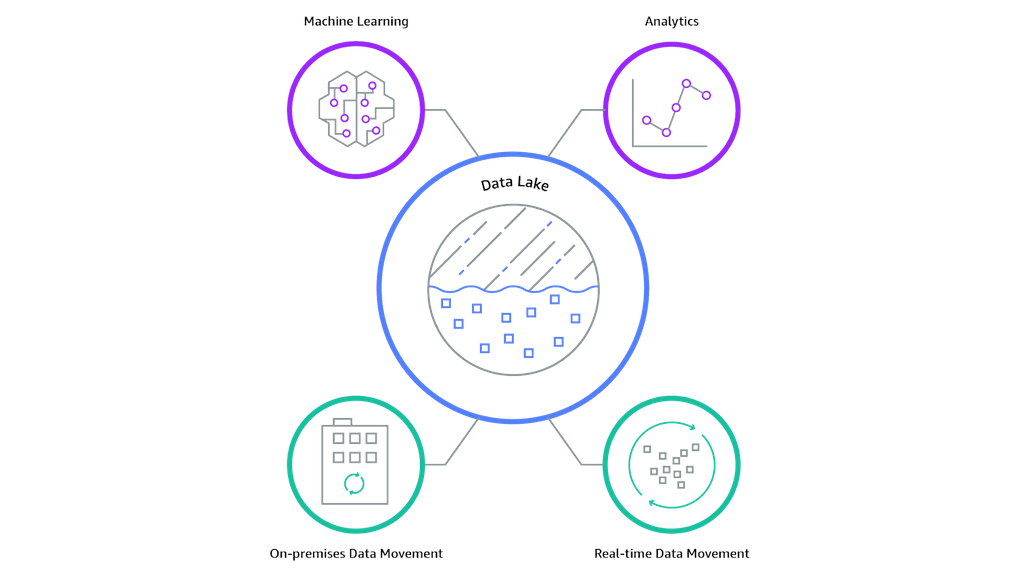

The advent of the data lake

The data lake (a term coined in 2011 by James Dixon, the then CTO of Pentaho) architecture flips the thinking of data warehouses to “store everything, figure out what’s useful later”.

In the data lake, data received from the broker, for instance, is stored in its raw format. Depending on the business’ needs, the data may then be transformed and loaded into a more traditional warehouse or may be analyzed and decisions made from the lake directly.

Data lake illustration from AWS

Data lakes have a couple of advantages:

- Faster time to implementation: Data lakes do not require upfront time-consuming modeling work from Data Engineers. You just need an S3 bucket and a way to get data there!

- Less complex pipelines: Since the data is dumped as-is, all the complex transformation processes are no longer needed hence simpler pipelines that are easier to deploy, maintain, and debug.

- Flexible data formats: The data loaded into the lake doesn’t need to follow a pre-defined structure. This allows you to store anything you’d like, and figure out the rest later!

But also a couple of pitfalls:

- May not be suitable for real-time processing: For businesses that want to make their decisions as soon as they have the data, dumping data into a lake first and then analyzing it, increases the time to action which can get very costly

- High management requirements: Without proper data governance policies, the lake may become hard to utilize and access

- It’s unstructured data: The raw data loaded into the lake must be (re)structured for analysis to take place. Extra ETL pipelines will be needed to achieve this

Introduction to Apache Kafka

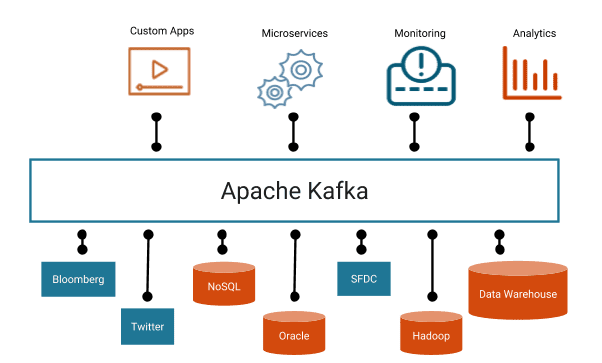

Kafka is a distributed event store and stream processing platform that was built at LinkedIn and made open source in 2011.

Kafka comprises a cluster of distributed servers (brokers) that store key-value pairs of events (messages) received from generating systems (producers). Multiple producers can write messages to a Kafka cluster. Similar messages can be put in the same stream (a topic).

The messages written into the topics can then be read by other systems and applications (consumers) which then process the data. Multiple consumers can read from multiple topics.

The distributed nature of Kafka provides a level of fault tolerance that has made it one of the most popular message brokers. Messages are replicated across brokers with a default factor of ‘3’! This means if one of the servers fails, at least two more have the data.

Kafka is also popular because it guarantees “exactly-once” processing of events. Unlike other brokers, messages are not deleted after consumption; they are retained for a configurable period. By default, the period is 7 days. Events can also be retained as long as they haven’t reached a configurable size.

There are pros and cons to using Kafka as your message broker, but that’s outside our scope. You can read more here.

The Kafka backfill problem

Now that you understand how Kafka works, picture a case where you’re using real-time data processing for fraud detection in a transactional banking system. You’d like to run every initiated transaction through a fraud detector before executing it.

You may have an architecture that works as follows:

- A user initiates a transaction.

- The transaction’s metadata is pushed by the producer system to a Kafka topic.

- A consumer reads the transaction from the topic and runs a fraud detection algorithm on it.

- The output of the algorithm is then pushed to another Kafka topic.

- The output is read by the initial producer system which then allows or disallows the transaction from proceeding.

Now, let’s think through how you might go about step (3): the fraud detection algorithm. You might want to compare against the user’s previous behavior, e.g. transaction sizes. This means we need to compare the message just received from the Kafka topic to historical data about that user.

Or, let’s say you change the algorithm and you’d like to run it on all transactions since inception to compare the performance of both.

Or you’ve just spun a new database and would like to dump every transaction initiated since the beginning, together with the output of the algorithm.

Or you want to run heavy analytic workloads on your data while also performing fast real-time processing on new data.

These scenarios represent one problem encountered when dealing with real-time streaming; backfilling.

So, how do you solve this? How do you design your data architecture such that both real-time and historical data are available when and as needed? Let’s explore a couple of options.

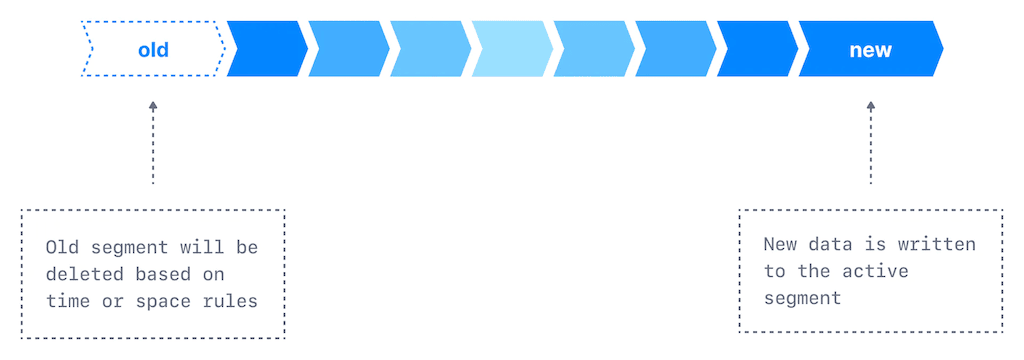

Use Kafka as your data lake

Kafka is designed to retain events for at least 7 days. You can just increase the retention to “forever” for some or all of your topics!

You can also take advantage of log compaction. For all key-value pairs with the same key, Kafka will retain the most recent key-value pair. For instance, if you’re logging all updates from a specific database column (change data capture), you retain the most recent value of that column, for that key.

Illustration of Kafka retention from Conduktor

This way, any products, analytics, or workflows that you’re building that require both real-time and historical data will read everything directly from Kafka!

You can go this route, but should you?

Reasons you could

- Kafka’s fault-tolerant nature means you’re highly unlikely to lose any of your data.

- Kafka scales quite easily. It can store as much data as you want

Why you probably shouldn’t

- Treating Kafka as your source of truth will increase the operational workload required to ensure data integrity, correctness, uptime, etc. You will need a team of Kafka experts who understand how far you can push it and can make sure it’s usable as a database (or pay Confluent).

- Kafka isn’t a database. Different types of databases have optimizations to meet their specific needs. For instance, OLAP databases use advanced column-based techniques for fast analytics. You lose out on all the advantages of using an actual database

- You’re going to spend a tonne on storage costs. It’s quite easy to scale Kafka, but that means spending more money to pay for the storage required to keep all your data. When you get to TBs worth generated daily, the costs can be massive.

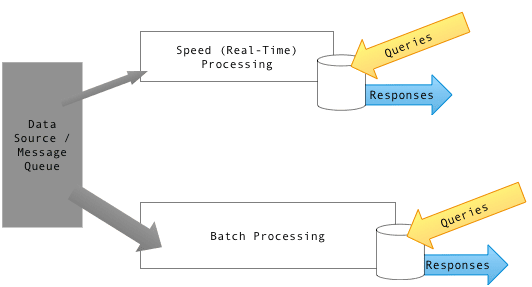

Implement the Lambda architecture

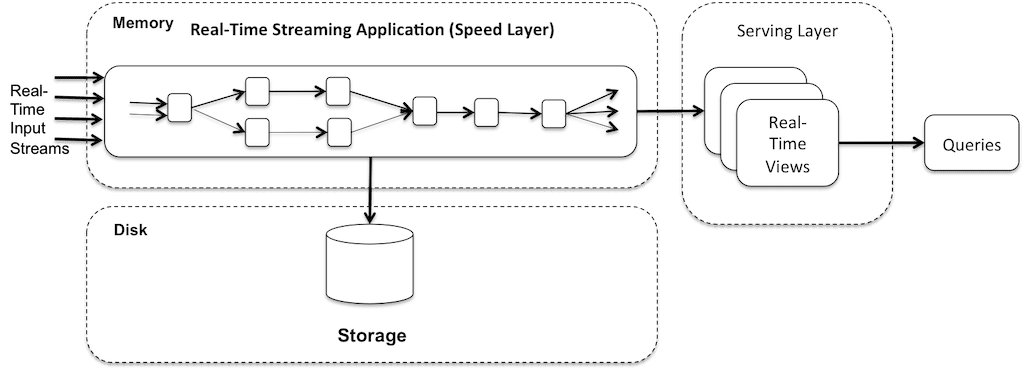

This architecture was first described by Nathan Marz in his article “How to beat the CAP theorem” in 2010. It combines both streaming and batch processing; it consists of three layers:

- Batch layer

- Speed layer

- Serving layer

Incoming data from, say, a message queue is passed to the batch and streaming layers simultaneously.

Lambda architecture with separate serving layers from Wikipedia

Batch layer

This layer processes incoming data in batches and stores the output in a centralized storage such as a data warehouse.

It aims to have a mostly complete and accurate view of the data. Views are re-computed on all the available data with every batch computation. This layer always lags the speed layer

Batch processing frameworks such as Apache Hadoop, Apache Spark, and MPP databases such as Amazon Redshift, and BigQuery are typically used in this layer.

Speed layer

This layer processes real-time data as it arrives. The output is usually stored in a NoSQL or in-memory databases

It aims to provide a low-latency view of the most recent data and not necessarily the complete view. Output from the speed layer is used to fill the missing data from the batch layer.

Stream processing frameworks such as Apache Kafka, Apache Storm, Apache Flink, Amazon Kinesis, and NoSQL stores such as Apache Cassandra are typically used here.

Serving layer

This layer is used to query output from the batch and streaming layers, quickly and efficiently.

This layer can be structured as:

- A single point of access

A unified serving layer that stitches together outputs from both layers and is used to query both batch and streaming data - Two points of access

The layer is split into two; one to query the batch and one to query streaming data.

This architecture makes more sense but there are some key problems to consider:

- Complexity: Multiple layers with different concerns are an operational nightmare. You need to keep track of separate codebases (that will also inadvertently have duplicated logic), and maintain and monitor multiple distributed systems.

Imagine the single pipeline you have, now multiply the overhead to deal with it by orders of magnitude

- Data quality is not guaranteed: Both layers need to guarantee that data is processed in the same way. Guaranteeing this is no joke

- Re-computation of data: The batch layer must recompute data every time to generate fresh views. This is may not always be necessary

Jay Kreps in his article “Questioning the Lambda Architecture” summarises the issues of the Lambda architecture pretty well: The problem with the Lambda Architecture is that maintaining code that needs to produce the same result in two complex distributed systems is exactly as painful as it seems like it would be. I don’t think this problem is fixable.

Implement the Kappa architecture

This architecture improves on Lambda architecture by getting rid of the batch layer.

Kappa architecture from O’Reilly

Data received is processed in real-time and stored in fast-distributed storage. When queried, the data is essentially replayed into a stream in Kafka. According to Jay Kreps, “Stream processing systems already have a notion of parallelism; why not just handle reprocessing by increasing the parallelism and replaying history very, very fast?”

Therefore, both batch and streaming data are treated as streams. We keep the speed of querying real-time data while having access to historical data!

With this setup, there’s no need to keep track of duplicate codebases and systems.

This setup inherently solves issues with the Lambda architecture, but has its own problems:

- There are limits to how far back you can go: For scenarios where you’re querying for a very long time back in history, the amount of data queried might not be suitable for reading as a stream. You probably want a batch-enabled system for this

- Complex analytical needs: A real-time architecture might not be suitable for analytical questions that require complex joins for instance

Build your own streaming/real-time OLAP database

If you already have an OLAP database, you might consider setting up a Kappa-like architecture. You might want to set it up such that you replay events from the database into a broker.

This however is not very viable. OLAP databases were not built with streaming needs in mind. You might need to implement a lot of custom tooling to make the architecture work with an OLAP database.

For instance, Uber attempted to build off a Hive database but ran into issues with regard to building a replayer that would maintain order of events and not overwhelm their Kafka infrastructure.

Use a streaming system with an inbuilt real-time data lake

As an alternative to building your own infrastructure, you can use a platform such as Estuary Flow that provides real-time streaming with an inbuilt real-time data lake.

Estuary Flow is a real-time data operations platform that allows you to set up real-time pipelines with low latency and high throughput without you provisioning any infrastructure.

It’s a distributed system that scales with your data. It performs efficiently whether your upstream data inflow is 100MB/s or 5GB/s. You can also backfill huge volumes of data from your source systems in minutes.

To get started, you can create a free trial account on Flow’s website

Conclusion

In this article, we’ve explored why real-time streaming is becoming more important for businesses and how you can solve one of its toughest pitfalls: working with both real-time and historical data.

We’ve looked at the following options:

- Using Kafka as your data lake

- Implementing the Lambda architecture

- Implementing the Kappa architecture

- Building your own real-time OLAP database

- Using a streaming system with an inbuilt real-time data lake

Each method has pros and cons, but how you see them depends on your use case and needs.

Which factors are most important to your organization, and what would you choose? Let us know in the comments.