Who is this guide for?

Welcome to the complete guide to change data capture in MySQL!

This resource is designed to support a wide range of data-focused and data-adjacent professionals who meet two simple requirements:

- You’re part of an organization that uses the relational database MySQL, or intends to migrate to it.

- You’re aware of the industry buzz around change data capture (CDC) and want to learn more.

Business leaders: you need a no-nonsense foundation to make decisions. We cover the essentials of MySQL and CDC, plus the pros and cons of different approaches.

Engineers and IT professionals: you intimately know the systems you work with, but figuring out the best changes to make can be time-consuming. Here you’ll find a succinct intro to a range of MySQL CDC methodologies.

Implementing a new data architecture or strategy requires understanding and buy-in from a variety of teams that may not work together day-to-day. Having a common starting point can make the collaborative process easier. That’s what this guide is for.

Who is Estuary?

Estuary is the company behind Flow, a next-generation DataOps platform that offers real-time, scalable, flexible data integration capabilities. That includes real-time CDC for MySQL, plus much more.

Our team has decades of combined experience in data engineering. And we understand the roadblocks data-driven companies face because we’ve lived them.

While we’re excited to discuss Flow’s CDC capabilities, that’s not the point of this guide. Instead, we aim to provide a holistic overview of MySQL CDC and give you the tools to make the right choice for your unique use case.

Looking for something else? Check out our CDC Guides for other systems:

Let’s get started.

MySQL: an Introduction

MySQL is a popular open-source relational database. Though it’s free to use, MySQL has been owned and sponsored by a corporation since its inception: first by the Swedish MySQL AB, and later by Sun Microsystems, which is now the Oracle Corporation.

There are a handful of other popular relational databases, including PostgreSQL, Oracle, SQLServer, and DB2. Here are a few reasons you might choose MySQL specifically.

- MySQL was developed for fast performance out of the box, but offers customization for advanced users with specialized use-cases, including 16 possible storage engines.

- It’s known as an extremely secure database, featuring a unique security layer and encryption capabilities. It’s fault-tolerant and ACID compliant, making it an all-around reliable option.

- It’s free and open-source, providing flexibility, but official support channels are available through its corporate ownership.

- Compared to other RBDMS, it’s simpler to set up and start working with.

- MySQL is well-suited for CDC workflows.

MySQL is commonly used as the primary transactional database for a data stack. That is, it acts as the quick, robust system of record that powers applications and keeps track of rapidly changing information.

Consider one very popular application for MySQL: e-commerce. An online store needs a transactional database to track data about customers and inventory, and to process sales. MySQL is an ideal choice because it’s a fast and secure row-based relational database.

Just as an online store is unlikely to be the only application a retailer builds, MySQL is unlikely to stand alone as a data system. Relational databases simply aren’t ideal for all workflows — most notably, data analytics.

While MySQL is great at managing the transactions that make sales and record-keeping possible, it’d be a poor fit for analysis. And for our hypothetical retailer to succeed, they need to analyze their store’s performance, marketing, inventory, and more. Data warehouses are designed to power analysis like this. However, they are ill-suited for transactional workflows, making them complementary to systems like MySQL.

To learn more about different types of data storage, see this blog post.

Why Change Data Capture for MySQL?

In common use patterns like the one described above, MySQL will be the most up-to-date data storage system in the stack. But your data warehouses and other storage systems, which in turn power analytical applications, also need to be accurate.

This is a classic data integration challenge. You need to get the latest data from MySQL to all your other systems in a way that’s:

- Timely, in a world that expects fresh data within minutes or seconds

- Reliable in the face of inevitable errors and downtime

- Minimally harmful to performance, even as your data grows to terabyte scale

When implemented correctly, change data capture can meet all these requirements.

Change data capture, or CDC, is the process of recognizing a change in a source data system so that a downstream system can act on that change, usually by updating a target system to reflect new information.

MySQL is one of the most commonly requested sources for CDC. Fortunately, it’s also well suited to it.

- For database administrators, simpler CDC methods are easy to set up using SQL alone.

- Advanced, real-time CDC methods are also attainable using MySQL’s binary log, or binlog.

In the next section, we’ll cover these methods in more depth.

MySQL Change Data Capture Methods

When we talk about change data capture these days, we’re generally referring to real-time CDC. In this type of CDC setup, data changes from MySQL are reflected in the targets within seconds or milliseconds. Real-time CDC is event-driven, scales better, and has been challenging — at least historically.

However, CDC has no temporal requirement by definition. Various methods exist, and they can be batch or real-time.

In general, batch methods tend to be easier to implement, but introduce latency and can have a significant performance impact. With real-time CDC, data changes from the source database, like MySQL, are reflected in the target systems almost immediately. Event-driven CDC scales better, but has historically been more challenging.

In the following sections, we’ll describe three approaches that fall into both categories:

- CDC using queries (batch)

- CDC using triggers (batch)

- CDC using the binlog (real-time)

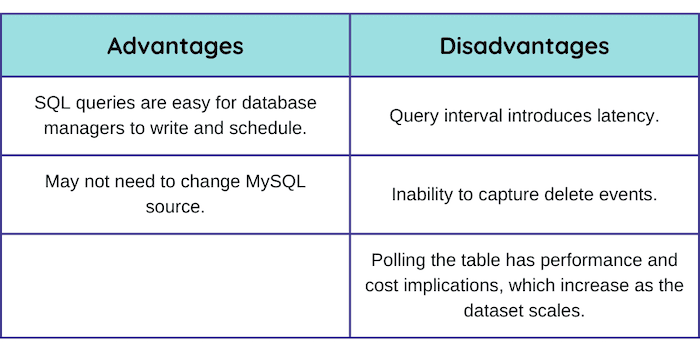

Queries for MySQL Change Data Capture

SQL queries are a simple method for batch CDC in MySQL. They can be set up on the command line or in a client application like MySQL Workbench.

For this method to work, the source table’s schema needs to have a version number or timestamp column indicating when it was last updated. If it doesn’t, you’re better off using the trigger method, which we’ll cover below.

Your SQL query will look something like:

plaintextSELECT * FROM my_table WHERE time_updated > time_last_query’;You can run the query at a recurring interval using MySQL’s built-in Event Scheduler.

Now, the selected records still need to make it to the target system, which is where the real complexity of this method lies. Most solutions involve either a bespoke ETL pipeline or a use a challenging event-driven framework like Kafka Connect.

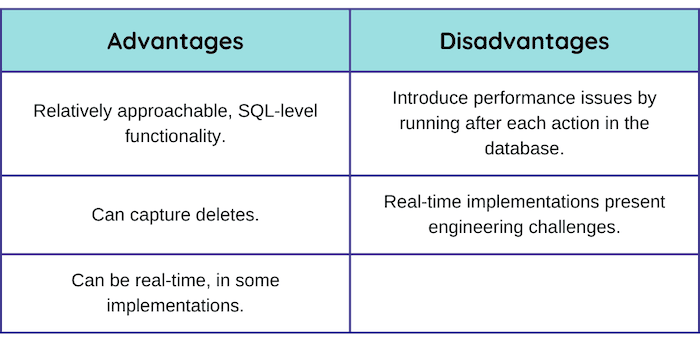

Triggers for MySQL Change Data Capture

An alternative method uses MySQL triggers: functions that listen for insert, update, and delete events on a given table.

You can write these events to a second table, or use them to update timestamps, as mentioned in the section on queries above.

At this point, you have a log of the changes, but it’s still inside MySQL. Once again, you have to move them to the destination system.

From there, you’ll face the same challenge as when using queries: either building or purchasing a pipeline solution to write the changes to the target system.

Triggers also have a significant performance impact on the database. A good practice is to run the trigger against a replica of the main table, which adds another layer of complexity.

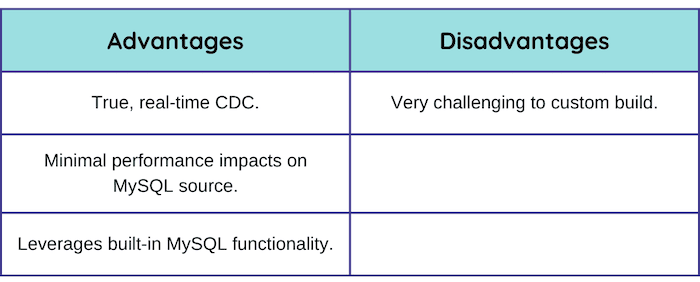

MySQL Binary Log for Real-time Change Data Capture

Both queries and triggers are methods of tracking change events in MySQL that introduce latency and can bog down performance.

But there’s another way. You can capture changes in real time by taking advantage of built-in MySQL functionality.

When enabled, the binary log (binlog) tracks change events in the database and stores them as a binary file. The binlog was designed as a source for database replication, as well as some data recovery implementations. This makes it ideal for driving event-based CDC — what many have come to think of as true CDC.

(Hint: Check out the terminology reference at the bottom of the post for more details on these concepts.)

Here are the basic steps (note that for a managed MySQL instance, like Amazon RDS or Google Cloud SQL, names and operations can be slightly different):

- Make sure the binary log is enabled on your database

- Make sure that all tables in the database have a primary key. This makes processing change events simpler and is required my many CDC setups.

- (For most implementations) Make sure the

binlog_formatsystem variable is set to the defaultROW. - Make sure the binlog expiration period is at least several days (the default is 30 days, which is a good amount of time to guard against failures).

- Parse the binlog data with a plugin or connector into isolated change events.

- Set up a messaging queue or event bus that can relay the binlog messages to the target system.

Most of the real work happens at steps four and five: these systems are notoriously hard to set up. Capturing the decoded change events into a Kafka topic, for example, is not a simple plug-and-play solution.

Fortunately, platforms and services are becoming available to make this method more accessible.

The open-source platform Debezium paved the way to making event-based CDC approachable. Debezium translates events into JSON and uses Kafka as an event bus. It offers out-of-the-box connectors to popular sources, including MySQL.

Many other MySQL CDC services are becoming available that make the user experience even simpler. Some leverage Debezium as their open-source foundation; others use a different architecture.

When you’re evaluating managed CDC services and open-source platforms, consider your individual needs, including:

- The size and shape of your source data.

- How much data engineering work you can take on.

- The type of destination system you need to move the data to.

Let’s look a closer look at one managed CDC service: Estuary Flow.

Estuary Flow for Real-Time MySQL CDC

Estuary’s DataOps platform, Flow, includes a connector for MySQL CDC.

So, what exactly does that mean?

Flow is a centralized platform for building real-time data pipelines that connect a wide variety of source and destination data systems using open-source connectors. But unlike a typical ETL platform, Flow has an event-driven runtime, allowing true, real-time CDC.

When you use the MySQL source connector, Flow acts as a log-driven, real-time CDC pipeline.

After running some basic configuration on your database, as outlined above, the MySQL connector and Flow take over the last two steps. They integrate with the binlog to capture change events to your Flow data collection. Cloud-storage-backed collection data is streamed through the Flow runtime, which is built on Gazette, an open-source streaming broker that was also built by Estuary’s engineering team.

Your data collections captured from MySQL are automatically backed up to a data lake in cloud storage. From there, they can be streamed to one or more target systems using any available materialization — or destination — connector. This happens with millisecond latency.

Features of Flow as a MySQL CDC pipeline:

- High degree of customization using the intuitive GitOps workflow or UI: Add additional data sources or multiple destinations to your CDC pipeline, and combine or separate streams of data without direct engineering requirements on the streaming broker.

- Ability to add transformations without an additional tool: Aggregations, joins, and stateful stream transformations are all possible.

- Minimize performance impact of historical backfills: Backfilling data from MySQL tables is important for data integrity, even in event-driven CDC, but re-reading large tables can cause performance impacts or even errors like data duplication. Flow avoids having to re-read the whole MySQL table by writing a checkpoint of where it left off in that source table.

Key MySQL Features and Terms for Change Data Capture

Real-time MySQL CDC requires you to leverage some of MySQL’s defining features and components. Here’s a quick reference:

Binary log (binlog):

The MySQL binlog, or binary log, is a database transaction log that keeps track of change events in the source MySQL database and writes them to a binary file. It’s a native MySQL feature, but it isn’t enabled by default. But enabling the binlog allows two important workflows:

- Data recovery operations in case of failure. When a server goes down and must be restored from a backup, the binlog events are used to catch up with what has occurred since the backup was created.

- Replication. There are many workflows that require database replicas, and more importantly (for the sake of this guide) MySQL’s replication capabilities are the basis of CDC.

The binlog retention period is 30 days by default, which is usually an optimal amount for CDC setups.

Source-replica architecture

In MySQL, the main server is called the source. Servers to which the source is replicated are called replicas.

Replication

Several types of replication are available in MySQL, all of which use the binary log. Replication can be:

- Asynchronous (the default), semisynchronous, or delayed. Asynchronous replication can still happen in near-real-time.

- Row-based (the default) or statement based. Row-based replication only copies the changes that have occurred, rather than the entire SQL statement. See the binlog format docs for more information.

Typically, CDC setups use the defaults.

Additional Resources

Estuary resources:

- Blog series on CDC

- Flow documentation

- Flow and the MySQL CDC connector code on GitHub

- Get started with Flow today

Other resources:

- MySQL documentation for topics mentioned: triggers, event scheduler, binary log

- Custom Postgres CDC use-cases: Shopify, Netflix, Fundbox

- Technical blogs on Postgres CDC: Rockset