Operational databases are the digital workhorses that handle the constant influx of data that our modern world generates. From tracking warehouse inventory to managing customer orders for a global eCommerce giant, operational database examples and use cases are endless.

When it comes to getting the most out of operational databases, you should know the challenges they pose. Many companies run into these issues and often get creative in finding workarounds. Now the smart move is to avoid reinventing the wheel and take a page from others' experiences.

To make your data management journey even smoother, we've put together this handy guide. It will give you a head start in understanding these operational database challenges and how others have tackled them.

What Is An Operational Database?

An operational database, also known as a transactional database or OLTP (Online Transaction Processing) database, is a database management system designed to efficiently manage an organization’s day-to-day transactional operations. These operations typically include the creation, retrieval, updating, and deletion of data.

Operational databases are typically used to support core business activities like order processing, inventory management, customer relationship management (CRM), and more. Unlike a data warehouse, which is made for decision-making and analysis, the operational database can efficiently store data with all the crucial details like customer data, product listings, sales records, and other important data.

Operational databases are refreshed in real-time so you can instantly access the most updated data available. These databases work really well in systems where fast and accurate data retrieval is important, like in eCommerce platforms or banking systems.

Here’s what makes them special:

- Stability: They can accommodate distributed systems in their infrastructure. So even if one part faces issues, the entire system continues to operate smoothly.

- IoT Features: The operational database system connects well with the Internet of Things (IoT), always watching, checking, and offering its own solutions.

- Growth Support: Modern operational database systems can adjust and grow as businesses need. They're fast and can manage many users at the same time.

- Quick Insights: Operational databases provide immediate feedback to help make better choices. It can mix in other tools to improve its feedback based on what the user wants, without changing the main database.

5 Key Features Of Operational Databases

- Real-time Processing: Operational databases are ideal for real-time or near-real-time data processing. They provide immediate responses to user requests which makes them a great fit for applications that need quick access to transactional data.

- Normalized Data Structure: They use a normalized data structure to minimize data redundancy and maintain data integrity. This includes organizing data into smaller, related tables and establishing relationships between them using keys (e.g., primary keys and foreign keys).

- Concurrent Access: Operational databases support concurrent access by multiple users or processes. This means that multiple users can interact with the database simultaneously and the database system ensures data consistency and integrity through mechanisms like locking and transaction isolation levels.

- High Transaction Volume: Operational databases can handle a high transaction. These transactions can include inserting, updating, deleting, and retrieving individual records or small sets of records. They are optimized for rapid, short-duration transactions and are capable of processing numerous transactions per second.

- Data Consistency and ACID Compliance: Operational databases adhere to the ACID (Atomicity, Consistency, Isolation, Durability) properties to maintain data integrity and reliability, even when the system fails. These properties guarantee that database transactions are executed in a way that ensures data consistency and prevents data anomalies.

Challenges & Solutions In Operational Database Management: 5 Examples To Consider

In these database operational management systems, every day brings new challenges and with those challenges come opportunities for innovative solutions. Let’s take a look at 5 real-world examples that show the hurdles these organizations faced in managing their operational databases and the creative ways they found to overcome them.

Example Problem 1: Scalability Issues

As businesses grow, their operational databases face a tough job: managing increased data volumes and serving ever-growing user requests. When databases can’t keep up with these, users experience delayed response times, potential outages, and sometimes even data losses.

Uber, the global ride-sharing giant, is a prime example. With their growth, they needed real-time data processing to connect drivers and passengers, adjust pricing based on demand, and analyze metrics related to riders, drivers, trips, and finances.

However, their existing database system had latency issues, with data ingestion taking anywhere from 1.5 seconds to 3 minutes, depending on the source. The company, which heavily relied on real-time data, found this inconsistency in data processing to be a major issue.

Proposed Solution: A Distributed Database System

Uber turned to SingleStore, a distributed database system, to address their scalability challenges. SingleStore's design uses a massively parallel processing architecture that lets it run on multiple machines.

This setup not only supports faster data ingestion but also combines new and existing information to provide quick answers to queries. For Uber, this meant that they could make real-time decisions based on analytical results and respond quickly to changing market dynamics.

Here’s how it works:

- Load Distribution: Spreading user requests across servers means no single server gets overwhelmed.

- Data Redundancy: Storing data across multiple servers boosts data availability and resilience. If one server fails, another picks up the slack.

- Enhanced Performance: With the right setup, data retrieval becomes faster as the system can pull data from multiple sources simultaneously.

Example Problem 2: Data Integration Difficulties

Operational databases often need to interact with other databases or applications. However, the process isn't always smooth. Mismatched data formats, disparate systems, or even different update frequencies can all complicate integration. This difficulty causes incorrect data merges, loss of data, or even system downtimes.

For example, a global bank wanted to comply with Anti-Money Laundering (AML) transaction monitoring and the Financial Conduct Authority (FCA). However, they hit a roadblock. The bank used different systems, from mainframes to cloud platforms. Integrating data from all these systems into their central data lake was a challenge, especially with limited internal resources.

Proposed Solution: Middleware Solutions & Data Mapping Techniques

Banks can use middleware solutions to handle data integration challenges. Middleware acts as an important connector that bridges different systems for a smooth data flow. For a global bank dealing with different systems, middleware can seamlessly link them to ensure data consistency.

Coupled with data mapping, it helps align data from different sources correctly in the database. This combined approach not only makes the bank's data processes more efficient but also guarantees that it has precise and comprehensive data to support its Anti-Money Laundering efforts.

- Seamless Integration: Using middleware and data mapping, banks can merge disparate databases without loss or misinterpretation of data.

- Operational Efficiency: Smooth data flow between integrated systems means businesses can function more efficiently and avoid downtime or data retrieval delays.

- Cost Savings: While there might be an initial investment in integration tools or expertise, in the long run, it eliminates the costs associated with manual data handling or system errors.

Estuary Flow offers a specialized solution for operational databases. It understands the complexities of integrating data from different sources. So if you are facing challenges with mismatched data formats and system disparities, Flow is ideal for your business.

Estuary Flow prioritizes real-time data movement and transformation so even when data comes from different systems, it remains consistent and up-to-date. This way, it directly addresses the issues of incorrect data merges and potential system downtimes.

Example Problem 3: Security Vulnerabilities

Operational databases, being at the core of business operations, are attractive targets for malicious actors. Database security weaknesses can result in unauthorized access, data tampering, or even data theft. These breaches can not only cause financial damages but chip away at the trust and value of a brand.

Let’s take a look at Medical Informatics Engineering’s (MIE) example, a prominent developer of electronic medical record software. MIE's database stored important information like names, social security numbers, medical conditions, and more.

Because of a lapse in their security measures, an outsider accessed and exposed a vast amount of this data on the internet. The security breach drew a lot of media coverage, led to multiple legal actions, and dealt a severe financial blow to MIE.

Proposed Solution: Multi-Layered Security Protocols & Regular Audits

Multi-layered security protocols involve a combination of firewalls, encryption, user authentication methods, and role-based access controls. Such protocols guarantee that only authorized individuals access the database and that the data transmitted is encrypted.

Periodic security audits help identify and rectify vulnerabilities. Automated tools are used to monitor database activity and flag any unusual actions for review. Let’s see some of the advantages of implementing robust security measures.

- Trust and Compliance: Enhanced security measures build customer trust and help businesses comply with data protection regulations.

- Protection of Sensitive Data: With strong security measures in place, sensitive information remains out of reach from unauthorized entities.

- Avoid Financial and Reputational Damage: When companies protect their financial data against breaches, they can avert potential financial losses and protect their reputation.

Example Problem 4: Poor Data Quality

Data quality serves as the foundation of every functional database. If the data isn't right, everything built on it can crumble. Wrong data equals bad choices, inefficiencies, and lost money.

Sectors like healthcare need accuracy and if you have unreliable data stored in your operational database, it can be devastating. For example, in the U.S. healthcare system, misidentification is more than just an administrative hiccup, and mismatched patient data has become a critical concern.

Beyond the obvious health risks, the economic consequences are also severe. Patient misidentification results in about 35% of insurance claims getting denied. This discrepancy can then hit hospitals hard, with an annual financial loss of up to $1.2 million for an average hospital.

Another major issue of data quality is that of duplicate records. It's not uncommon for a patient to discover multiple records under their name in a hospital's database. With hospitals reporting an 8% to 12% rate of duplicate records, the situation is concerning.

Proposed Solution: Data Quality Monitoring & Regular Data Audits

Data quality monitoring uses tools and processes to continuously check and validate the data for accuracy, consistency, and completeness. This makes sure that the data entering the operational database meets the required quality standards.

Regular data audits involve periodically reviewing the data to identify any inaccuracies or inconsistencies. This helps rectify any errors and ensures that the data stays reliable over time. Here are the benefits of focusing on data quality:

- Operational Efficiency: High-quality data reduces the chances of errors in operations for smooth business processes.

- Informed Decision Making: Accurate data ensures that business decisions are based on reliable information.

- Financial Savings: When businesses fix poor data quality issues, they can prevent financial losses arising from misguided decisions or operational inefficiencies.

Example Problem 5: Latency Issues In Real-time Data Updates

Operational databases are expected to provide data on demand, instantly. When they can't, it's called a real-time data access delay. This lag can hinder business processes, especially when milliseconds matter. There can be many reasons for slow access speeds – overloaded systems, inefficient data structures, or network issues.

Consider Coin Metrics – a company that provides important financial data. They collect data from different sources and deliver it to their users in real time. One of their primary tools for this is the WebSocket API which streams data live. However, this tool can be glitchy, especially during high market activity.

These interruptions were a big problem for Coin Metrics which relied on constant data flow. Users needed up-to-date data. Any delay or latency meant that decisions could be based on slightly outdated data, posing a risk of financial setbacks for the traders.

Proposed Solution: Data Caching & Database Optimization

Data caching plays a major role in improving system performance. When frequently accessed data resides in 'cache' memory, the system bypasses the longer process of fetching it from the primary database for swifter data retrieval.

Similarly, optimizing the database improves efficiency. The redundant data is eliminated through effective indexing and refining queries which reduces the access times for data. When these strategies work together, the database experience becomes smoother and more responsive.

- Immediate Data Retrieval: Caching provides rapid responses to frequent data requests and reduces wait times.

- Consistent User Experience: With data delays minimized, traders can trust the platform to provide accurate, timely information, solidifying brand reliability.

- Optimal Resource Use: With a superior query processing system, the database processes requests using fewer resources, thus serving more users efficiently.

How Does Estuary Flow Help Tackle Operational Database Challenges?

Operational databases play an important role in many businesses. But as they scale, they run into challenges like data movement, transformation, and extensibility. Estuary Flow is our DataOps platform designed to streamline these processes and maintain the efficiency and reliability of your databases. It comes with different useful features to tackle typical operational database problems.

Estuary Flow captures data from various sources and ensures real-time Change Data Capture (CDC) from databases. With it, you can access the most current data. Flow is versatile when it comes to data processing since it supports both streaming SQL and Javascript, letting you handle transformations in different ways.

Key Features Of Estuary Flow

- Extensibility & Adaptability: Lets you easily add connectors and guarantees transactional consistency for accurate data views.

- Reporting & Data Structuring: Offers real-time data flow reports and converts unstructured data into structured formats for easier data management.

- Reliability & Survivability: Comes with built-in testing for data accuracy and features like cross-region and data center support to prevent disruptions from external issues.

- Efficiency & Scalability: Uses low-impact CDC for efficient data use and is designed to manage large data volumes, allowing businesses to grow without facing data management challenges.

- Data Capture & Real-time Updates: Efficiently captures data from various sources, like databases and SaaS applications, and ensures real-time Change Data Capture (CDC) for timely information access.

- Transformations & Materializations: Provides powerful streaming SQL and Javascript transformations, along with low-latency views across systems, making sure the data remains current and easily accessible.

Conclusion

Operational database examples highlight their significance in our daily lives. They are the architects behind eCommerce transactions, healthcare records, airline reservations, and countless other important functions. Imagine a world without them – it would throw our modern society into chaos.

Operational database management systems bring a lot to the table but they do come with their fair share of challenges. It's important to take a good look at these hurdles and figure out effective solutions. When you tackle these problems head-on, you can make sure your operational databases stay robust and in sync with their core objectives.

If you’re looking to enhance your database operations, Estuary Flow perfectly complements operational databases. With its advanced features and capabilities, it can help ensure that your operational database remains a strength, not a bottleneck. Explore what Estuary Flow has to offer by signing up for free or contacting our team for further queries.

Frequently Asked Questions

If you've encountered your fair share of head-scratchers about operational databases, we'll answer some of the most common questions you may have come across.

How do operational databases differ from data warehouses?

Operational databases, which can include traditional relational databases like Microsoft SQL Server or NoSQL databases, are designed for day-to-day, transactional operations. They usually contain current, detailed, and granular data. Such databases are typically structured to support specific applications or business functions, and the data changes frequently.

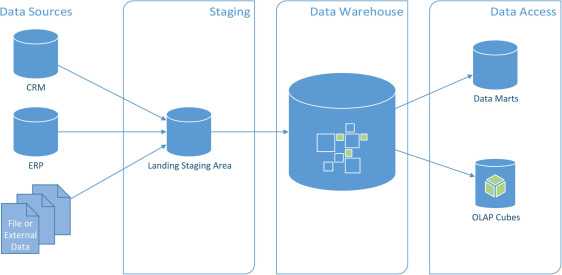

Data warehouses are specialized repositories that store and analyze historical data. They are not typically used for transactional operations but instead serve as a centralized location for aggregating, organizing, and analyzing data from various sources.

A data warehouse stores historical data in a denormalized, consolidated format. It often uses techniques like data modeling, ETL (Extract, Transform, Load) processes, and data aggregation to optimize data for reporting and analysis.

How can middleware solutions aid in data integration difficulties?

Middleware bridges different systems with varying data formats and structures. It can perform data transformations and mappings to convert data from one format to another. This helps when integrating data from different sources into a unified format, making it compatible for analysis with advanced complex analytical tools.

Middleware solutions can route data to the right destinations. They also provide orchestration capabilities so you can define and automate workflows for data integration. They use connectors and adapters to connect to various data sources, including databases, cloud services, APIs, and more. This lets you access data from multiple platforms and systems.

Why is data redundancy important in distributed database systems?

Data redundancy helps improve fault tolerance and system availability. In distributed databases, different components like network connections, servers, and storage devices can fail. Redundant data copies make sure that even if one copy becomes unavailable because of a failure, the system can still retrieve the data from another copy.

Redundant data can be used to distribute the workload across different nodes in a distributed system. When you have multiple copies of data, requests for that data can be directed to different nodes. This prevents overloading of any single node and improves system performance and response times. It also enhances data access performance as you can retrieve data from the nearest or least loaded node.

What are the implications of latency issues in real-time data updates?

Latency can result in:

- Delayed updates hinder real-time decision-making.

- High latency can strain server and network resources.

- Latency disrupts workflows and operational efficiency.

- Inconsistent data causes discrepancies and confusion.

- In trading or finance, latency can result in financial losses.

- Industries with strict regulations face compliance challenges.

- Slow updates can frustrate users and affect their experience.

- Outdated information causes incorrect decisions and actions.