Why is it so important to understand the difference between data ingestion vs ETL? The answer is simple: it directly impacts how well your organization can use its data resources. In a world where data equals opportunity, making the right call between data ingestion and ETL could be the secret to tapping into unexplored potential and staying ahead of the competition.

To help you make the right decision, we put together this helpful guide. We’ll explore 13 crucial differences between data ingestion and ETL, which one you should pick, and how the choice you make can truly shape the way your data infrastructure functions.

What Is Data Ingestion?

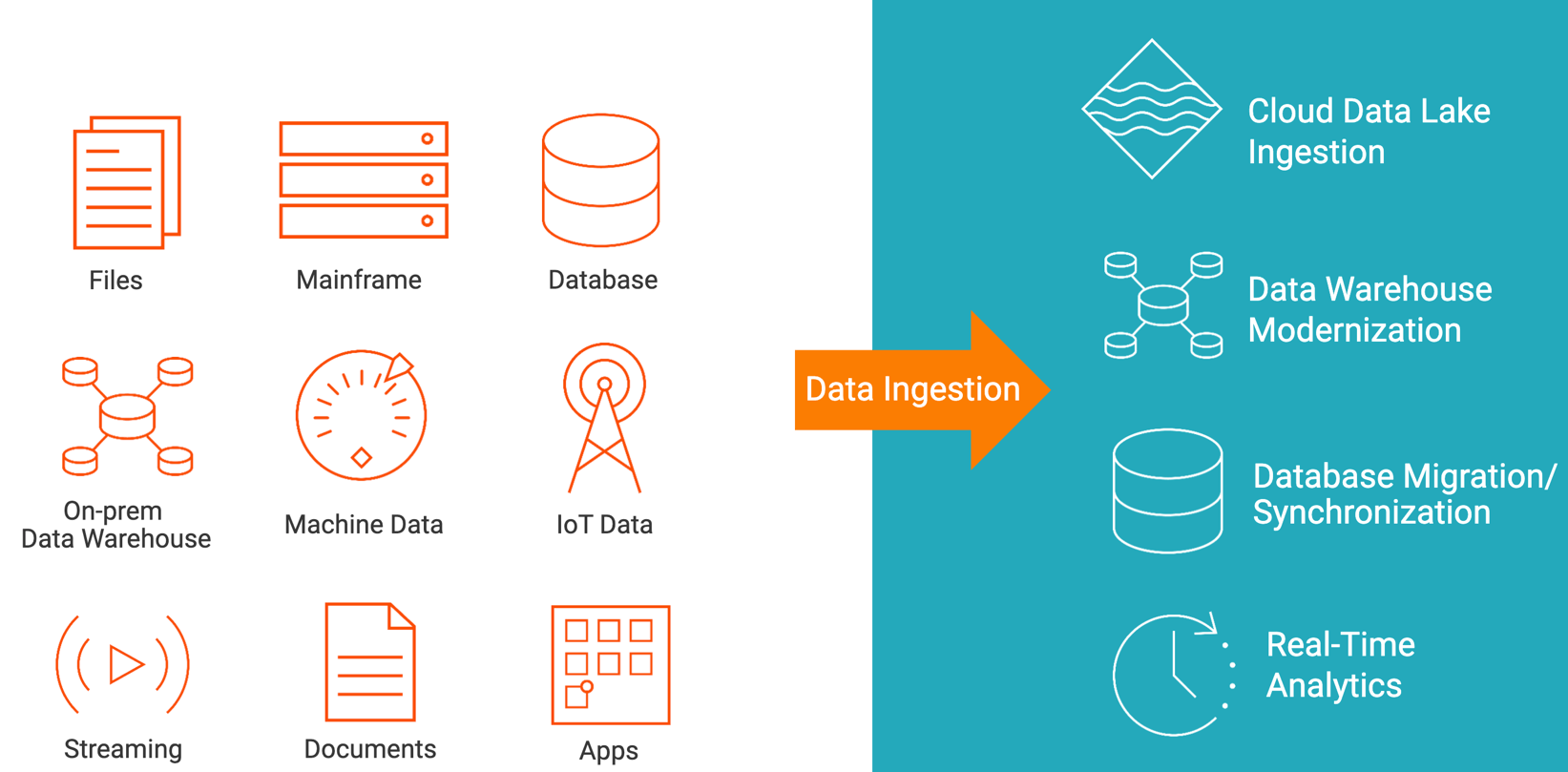

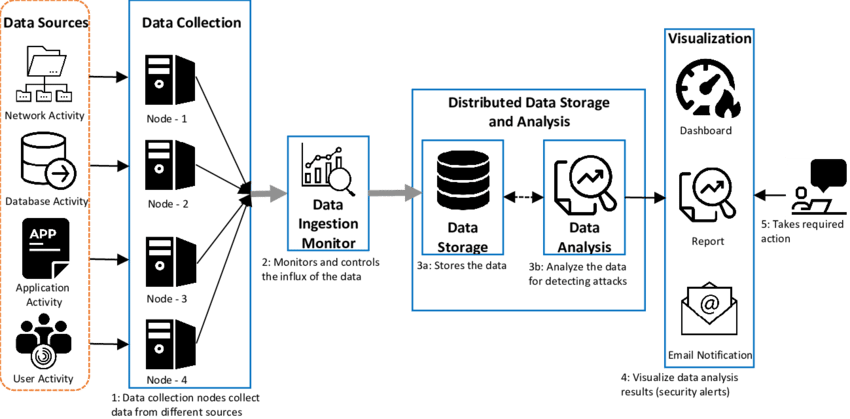

Data ingestion is the process of collecting and importing raw data from various sources into a target system or storage platform, without applying extensive transformations. It ensures the rapid and secure transfer of data and preserves its integrity for further analysis or storage.

Data ingestion populates a storage or processing system like a data lake, data warehouse, or database with real-time or batch data from sources like logs, sensors, or external databases. It simplifies data access and preparation and sets the stage for downstream operations like data transformation and analysis.

What Is ETL?

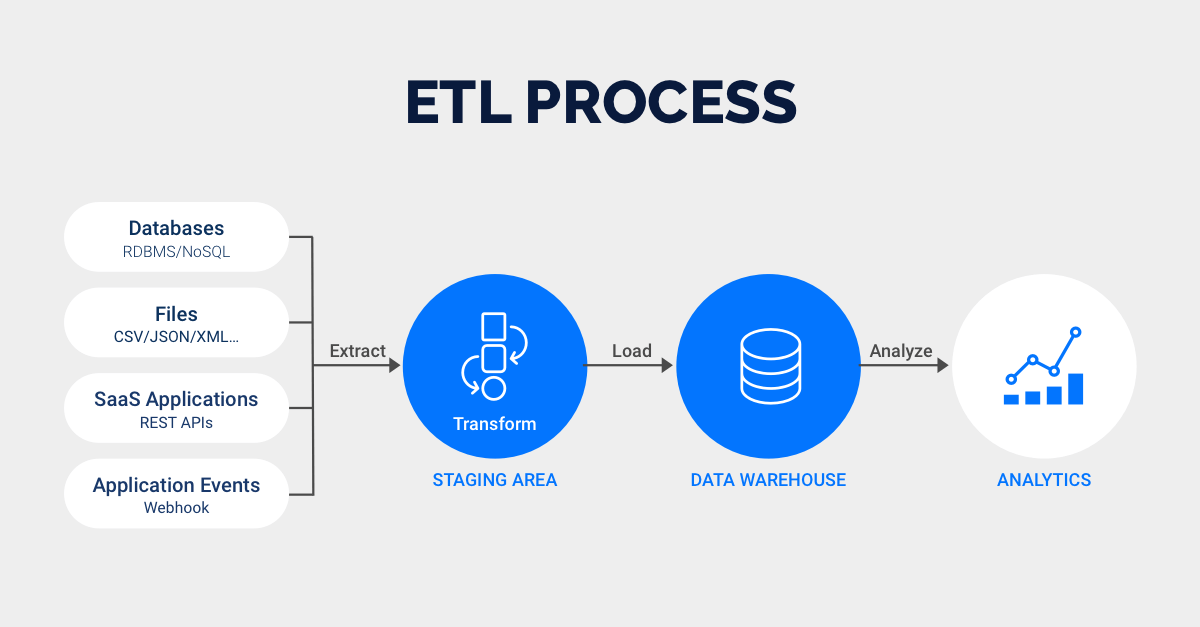

ETL, or Extract, Transform, Load, is a data integration process used in data warehousing and business intelligence (BI). Let’s look at what ETL entails:

- Extract: Data is collected from various sources like databases, files, or applications.

- Transform: Data is then cleaned, structured, and manipulated to fit a consistent format, resolving inconsistencies and errors. This step can include filtering, aggregating, and enriching the data.

- Load: The transformed data is loaded into a target destination, typically a data warehouse, where it can be readily accessed for analysis and reporting.

ETL helps maintain data quality and ensures that you can make informed decisions based on accurate and unified information from different sources.

Estuary Flow is a tool that provides an easy-to-use and scalable streaming ETL solution for efficiently capturing data from sources like databases and SaaS applications. With Flow, you can add transformations before loading data into your destination systems.

But it’s not traditional ETL – it’s a data streaming solution with its own, unique process. Flow provides real-time CDC (Change Data Capture) support and provides real-time integrations for apps that support streaming.

Data Ingestion vs ETL: The 13 Key Differences

When it comes to data ingestion and ETL, it's not just about choosing one over the other. Understanding the differences between the two approaches is crucial for designing efficient and effective data pipelines. Let’s discuss these differences in detail.

Data Ingestion vs ETL: Purpose

Data Ingestion Purpose

Data Ingestion is the initial step in the data pipeline. It serves the primary purpose of moving raw data from various sources into a central location or data processing system. It focuses on efficiently collecting data and making it available for further processing or analysis.

ETL Purpose

ETL has a broader purpose. It includes data extraction from multiple sources, transforming it to conform to predefined data models or quality standards, and then loading it into a target data store. ETL is designed not only for data transfer but also for data transformation and enhancement.

Data Ingestion vs ETL: Processing Order

Data Ingestion Processing Order

Data ingestion is typically a one-way process that occurs before any data transformation or loading. It prioritizes the quick transfer of data into the system which makes it suitable for real-time or near-real-time streaming data ingestion where data needs to be rapidly available for use.

ETL Processing Order

ETL is a multi-step process that follows a specific sequence. It starts with data extraction, followed by transformation, and then loading into a target system. Its sequential steps make it more structured and controlled. ETL processes are usually executed as batch jobs or scheduled tasks which may not align with real-time processing needs.

Data Ingestion vs ETL: Transformation

Transformation In Data Ingestion

Data ingestion typically involves minimal to no data transformation. It primarily focuses on copying data as-is from source systems to the target storage. Any transformation that occurs in data ingestion is usually very little, like data type conversion or basic validation.

Transformation In ETL

ETL is where major data transformations occur. Data is cleaned, standardized, and enriched during the transformation phase. This can include operations like aggregations, calculations, joins, and data quality checks. The transformation step is crucial for ensuring that data is accurate, consistent, and suitable for analysis.

Data Ingestion vs ETL: Complexity Levels

Data Ingestion Complexity

Data ingestion is relatively straightforward in terms of complexity. The primary challenges in data ingestion relate to data volume, velocity, and source connectivity but the process itself is less complex.

ETL Complexity

ETL is inherently more complex because of the extensive data transformations involved. It requires:

- Design of transformation logic

- Handling various data formats

- Dealing with schema changes

- Managing dependencies

- Ensuring data quality

Data Ingestion vs ETL: Use Cases

Data Ingestion Use Cases

Data ingestion is used for rapidly collecting and storing data from various sources. Use cases for data ingestion include data warehousing, log file collection, and data lake population. It's especially helpful when you need real-time or near-real-time data availability without the overhead of complex transformations.

ETL Use Cases

ETL helps in situations where data needs to be transformed, cleaned, and integrated before it's loaded into a structured data warehouse or analytics platform. ETL is commonly used in business intelligence, reporting, and data analysis where data quality and consistency are critical. Use cases include financial reporting, customer analytics, and integrating data across multiple business systems.

Data Ingestion vs ETL: Error Handling Mechanisms

Error Handling In Data Ingestion

Data ingestion’s error-handling mechanisms are typically simpler. It involves basic error logging and notification systems to alert administrators when data ingestion fails. While some validation checks may be in place, data ingestion is less concerned with complex error handling as the primary goal is to capture data efficiently.

Error Handling In ETL

ETL processes are more advanced in error handling because of their data transformation and integration nature. It has mechanisms to identify, log, and handle errors at various stages of the transformation process. This can include strategies like data validation, retry mechanisms, and data reconciliation to ensure data accuracy.

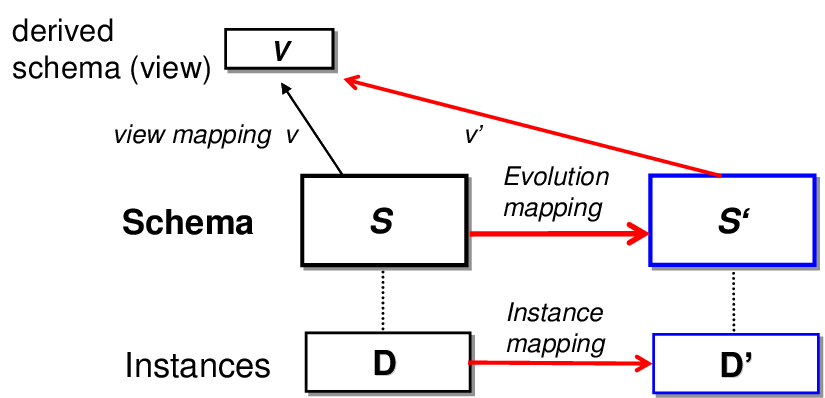

Data Ingestion vs ETL: Schema Evolution

Schema Evolution During Data Ingestion

Data ingestion typically assumes a schema-on-read approach. It doesn't impose rigid schema requirements on incoming data which makes it more flexible when dealing with data from different sources. Schema evolution during data ingestion is often simpler and changes in data structure are adjusted without major disruptions.

Schema Evolution During ETL

In ETL processes, schema evolution is more complex as changes in source data structures require adjustments in the ETL logic and the target schema. Handling schema evolution in ETL involves data mapping, schema versioning, and possibly backfilling historical data to align with the new schema.

Data Ingestion vs ETL: Monitoring Levels

Monitoring In Data Ingestion

Monitoring in data ingestion is directed at tracking the status of data pipelines. This is to ensure that data sources are being ingested as expected and identify potential issues in the ingestion process. It involves basic health checks and system-level monitoring to ensure data availability.

Monitoring In ETL

ETL processes need more comprehensive monitoring. This includes tracking data quality, the progress of data through each ETL stage, and the detection of anomalies. ETL monitoring uses advanced techniques like data profiling, data lineage tracking, and alerting mechanisms to ensure the integrity of transformed data and the overall ETL pipeline.

Data Ingestion vs ETL: Data Latency

Data Ingestion

Data ingestion processes are designed for low-latency data delivery. They capture and store data rapidly as it becomes available from source systems. Since data latency in ingestion is typically minimal, it provides real-time or near-real-time access to the data in its raw form.

ETL

ETL processes tend to introduce higher data latency compared to data ingestion. In ETL, the complexity of transformations and the size of the dataset influence the data latency which can cause longer delays before the data is ready for analysis.

Data Ingestion vs ETL: Aggregation

Aggregation In Data Ingestion

Data ingestion by design doesn't perform data aggregation as it focuses on the immediate capture of data. Data aggregation typically happens at later stages of the data processing pipeline, like during data analysis or reporting.

Aggregation In ETL

ETL processes frequently include data aggregation as a crucial step. Aggregation in ETL is about summarizing and condensing data to make it more manageable and suitable for analytical purposes. It can include operations like calculating averages, totals, or counts, and it is an integral part of preparing data for reporting and analytics.

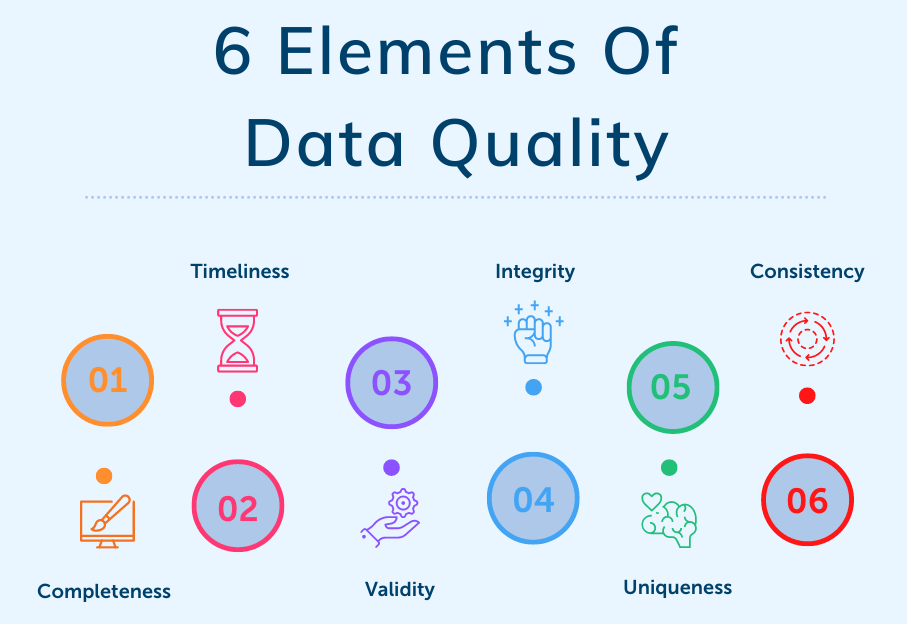

Data Ingestion vs ETL: Data Quality

Data Ingestion

Data ingestion does not focus extensively on data quality. While basic validation checks may be applied to ensure the integrity of ingested data, the primary goal is to capture data as it is. Data quality checks and improvements are typically addressed later in the data processing pipeline.

ETL

Data quality checks, cleansing, and transformation are important parts of ETL processes. Before loading data into the target system, ETL ensures that it is accurate and consistent. This focus on data quality makes ETL ideal for data warehousing and analytics where data reliability is needed.

Data Ingestion vs ETL: Development Time

Data Ingestion Development Time

Data ingestion processes are quicker to develop and implement. Since data ingestion is primarily about moving data from source to storage with minimal transformation, it involves less complexity in terms of coding and development. In many cases, data ingestion solutions can be set up rapidly, especially when using off-the-shelf tools designed for this purpose.

ETL Development Time

ETL development takes more time and effort. You have to design and implement complex transformation logic, error handling, data quality checks, and schema mapping – all of which are time-consuming. Even though ETL development is an important step, it comes with a trade-off of increased development time.

Data Ingestion vs ETL: Scalability

Data Ingestion

Data ingestion processes can be highly scalable especially when designed with a focus on parallel processing and distributed architectures. Many data ingestion tools and platforms are built to accommodate scaling and are used in applications that require handling large amounts of raw data quickly.

ETL

ETL processes, while also scalable, face more challenges when scaling up. The complexity of ETL transformations and the need for data consistency can introduce bottlenecks as data volumes grow. Proper planning and architecture are needed to ensure ETL pipelines can scale efficiently.

Data Ingestion | ETL | |

| Purpose | Move raw data into a central location. | Extract, transform, and load data into a target data store. |

| Processing Order | One-way: before any transformation. | Sequential: extraction, transformation, loading. |

| Transformation | Minimal to no transformation, basic operations. | Major data transformations, cleaning, and enrichment. |

| Complexity | Relatively straightforward. | More complex with extensive transformations. |

| Use Cases | Rapid data collection and storage. | Data transformation, cleaning, integration. |

| Error Handling | Simpler, basic error logging. | Advanced error handling, data validation. |

| Schema Evolution | Flexible schema-on-read. | Complex, adjustments to ETL logic. |

| Monitoring | Basic, tracking data ingestion pipeline status. | Comprehensive, data quality and progress tracking. |

| Data Latency | Low latency, real-time or near-real-time access. | Higher latency due to transformations. |

| Aggregation | No data aggregation. | Includes data aggregation. |

| Data Quality | Basic data integrity checks. | Focus on data cleaning and quality. |

| Development Time | Quicker development, minimal transformation. | More time and effort due to complex logic. |

| Scalability | Highly scalable with parallel processing. | Scalable but faces challenges with complexity. |

Data Ingestion vs ETL: Choosing The Right Approach

The choice between data ingestion and ETL depends on your specific data integration needs and the characteristics of your data sources. Let’s discuss the key factors to consider when deciding between the two approaches.

Pick Data Ingestion When

Data Freshness Is A Priority

Data ingestion is ideal when real-time or near-real-time access to data is crucial. For instance, in the financial industry, stock market data needs to be continuously ingested to make split-second investment decisions.

Minimal Data Transformation Is Required

When the data arriving from the source doesn't require significant transformation or cleansing before being used, data ingestion is preferable. An example could be website clickstream data that needs to be loaded into data warehouses for analytics.

Source & Target Systems Are Compatible

Data ingestion is suitable when the source and target systems are already compatible in terms of data formats and structures. In the case of IoT devices that transmit data in a standardized format (e.g., JSON), directly ingesting this data into a cloud-based storage system can be efficient.

Cost-Effectiveness Is A Major Consideration

Data ingestion can be cost-effective when dealing with large volumes of data because it often involves fewer transformation steps compared to ETL. For example, a social media platform ingests user-generated content, like images and videos, into its storage infrastructure.

Pick ETL When

Complex Data Transformations Are Necessary

Choose ETL when data requires significant cleaning, transformation, and enrichment before it can be used for analytics. An example is in the healthcare industry where electronic health records from various sources need to be standardized, cleaned, and integrated into a data warehouse.

Historical Data Needs To Be Analyzed

When historical data needs to be analyzed alongside new data, ETL is crucial. In retail, sales data from previous years often requires joining with current sales data for trend analysis and forecasting.

Aggregation & Summarization Are Required

ETL is suitable when data needs to be aggregated or summarized for reporting and business intelligence purposes. For instance, a manufacturing company may use ETL to aggregate production data to generate daily, weekly, and monthly reports.

Data Quality & Consistency Are A Priority

ETL is a better choice when maintaining high data quality and consistency is crucial. In the banking sector, data extracted from different branches or departments needs to be standardized and errors need to be rectified before consolidation in a central data store.

Regulatory Compliance Is Essential

ETL should be used when strict regulatory compliance is required. In the pharmaceutical industry, data related to clinical trials and drug development must be transformed and loaded in a way that complies with data integrity and traceability regulations like 21 CFR Part 11.

Get The Most Out Of Your Data With Estuary Flow

Estuary Flow is a real-time data ingestion and ETL platform that can help businesses of all sizes streamline their data workflows and get the most out of their data. It offers a wide range of features that make it easy to extract, transform, and load data from a variety of sources into your target destination.

One of the key features of Estuary Flow is its flexibility. It can be used to build simple data pipelines, like moving data from a database to a data warehouse, or complex pipelines that involve multiple data sources and transformations. Flow also supports both batch and streaming data processing, so you can comfortably go with the approach that best suits your needs.

Another major feature of Estuary Flow is its ease of use. It has a user-friendly interface that makes it easy to create and manage data pipelines even for users with no prior coding experience. Flow also offers a variety of pre-built connectors and transformations which further reduces the time and effort required to build and deploy data pipelines.

Other key features include:

- Estuary Flow processes data in real-time so you can get insights from your data as soon as it is generated.

- It can be used to process data in real-time and stream it to an analytics dashboard, like Tableau or Kibana.

- It supports a wide range of data sources, including databases, message queues, file systems, and cloud storage services.

- Flow offers a variety of data transformation capabilities, including filtering, aggregation, and joining, to clean and prepare your data for analysis.

- It is designed to be scalable and reliable so you can be confident that it can handle your data needs, no matter how large or complex they may be.

- Estuary Flow includes features like data validation, schema management and enforcement, and auditing capabilities to help you ensure the quality and governance of your data.

Conclusion

Both data ingestion and ETL have their strengths and weaknesses and the decision often comes down to balancing speed and data quality. In practice, many organizations find that a combination of data ingestion and ETL is the most effective way to meet their data needs.

So when you're trying to decide between data ingestion vs ETL, take a good look at your needs and weigh the trade-offs. This way, you can make a smart choice that fits in with your organization's data strategy.

Estuary Flow is a reliable, cloud-based platform that can collect, clean, and transform data from a variety of sources into a unified data warehouse or lake. This powerful and easy-to-use tool saves time and money and will help make better decisions based on your data. Flow is also fully managed so you can focus on your business without having to worry about taking care of your data infrastructure.

Try Estuary Flow for free today or contact our team for more details.

Frequently Asked Questions About Data Ingestion vs ETL

We have already gone pretty deep into explaining the differences between data ingestion and ETL. But no matter how detailed we've been, questions can still pop up. So here we are, ready to tackle those queries.

Is ETL the same as data ingestion?

ETL and data ingestion are related but not the same. Data ingestion involves collecting and importing raw data from various sources into a storage system, which can include structured, semi-structured, or unstructured data.

On the other hand, ETL extracts data from source systems, transforms it into a structured format, and loads it into a data warehouse or database for analysis. ETL is a broader process that includes data ingestion but goes beyond it to ensure data is properly formatted and prepared for analysis, making it more structured.

Are there cost implications associated with choosing data ingestion over ETL?

Data ingestion is typically less expensive as it minimizes processing and storage costs. ETL requires additional resources for data transformation and manipulation which results in higher costs because of increased computing and storage needs.

Therefore, if cost efficiency is a primary concern, opting for data ingestion can be more budget-friendly but it may sacrifice data quality and consistency since ETL processes can help clean and enhance data.

What are the 2 main types of data ingestion?

The two main types of data ingestion are batch ingestion and real-time (streaming) ingestion. Batch data ingestion involves collecting and processing data in predefined batches, often on a scheduled basis, making it suitable for historical and non-time-sensitive data.

Real-time ingestion continuously collects and processes data as it arrives which makes it ideal for time-sensitive, up-to-the-minute information. Both methods help in data pipelines to support various analytics and decision-making needs.

Are there specific industries where data ingestion and ETL are generally preferred over one another?

Data ingestion and ETL have different levels of preference across industries.

Data Ingestion Preferred Industries

- Social Media: Real-time data ingestion is crucial for monitoring user-generated content.

- Finance: Stock market feeds and transaction data require rapid ingestion.

- IoT: Devices generating constant streams of data need efficient ingestion.

- Retail: eCommerce sites use data ingestion for real-time inventory updates.

ETL Preferred Industries

- Healthcare: Medical records, insurance claims, and patient data require complex transformation and integration.

- Marketing: Customer data from multiple sources need cleansing and consolidation.

- Manufacturing: ETL is used for quality control and supply chain optimization.

- Education: Institutions process student data, curricula, and administrative records through ETL for reporting and analysis.