In an era driven by the relentless data surge, the distinction between data ingestion vs. data integration has never been more critical. While these two terms might seem to be interchangeable, they actually represent separate phases in the data lifecycle.

Without a clear understanding of their differences, your organization might end up swamped with loads of raw data, struggling to make sense of it or get any real value out of it.

This comprehensive guide is all about shedding light on the what, why, and how of data ingestion and data integration. We'll look into these topics to give you a clear picture of what they are and how they work, ultimately showing you how data ingestion and data integration impact the world of data.

What Is Data Ingestion?

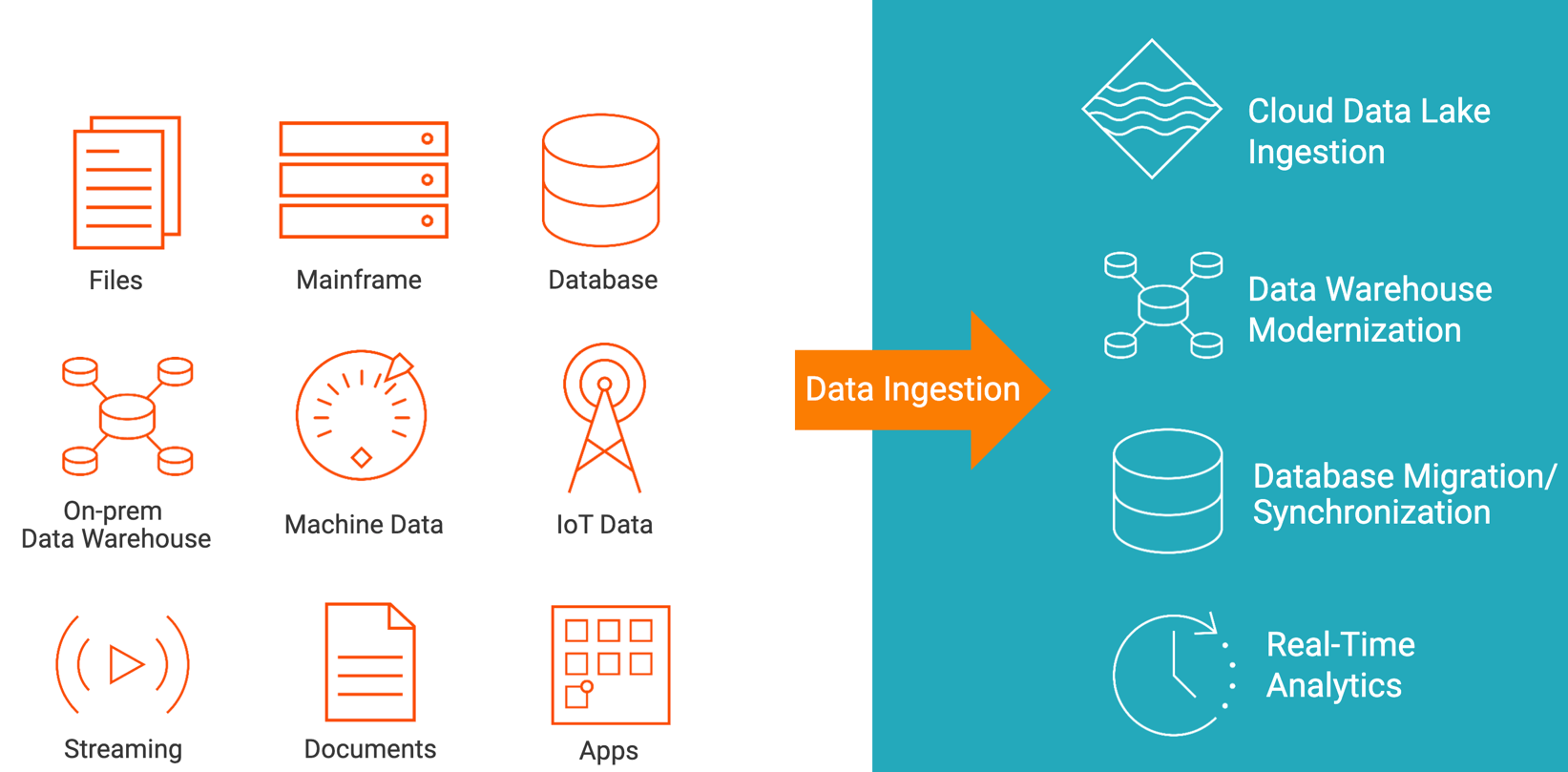

Data ingestion is the process of collecting, transferring, and importing raw data from various sources or formats into a storage or processing system, typically a database, data warehouse, or data lake. It ensures that data is efficiently and reliably loaded for further analysis and use. This way, you can manage and utilize this information in a more structured and accessible way.

How Does Data Ingestion Work? Understanding The Data Ingestion Architecture

Data ingestion is the initial step in a data pipeline which makes sure that data from different origins is efficiently gathered and ready for further processing. Let’s go through a detailed breakdown of how data ingestion works:

Data Sources

The data ingestion pipeline begins with identifying data sources. These sources can include databases, application logs, external APIs, IoT devices, and more. Each source can have different formats and structures.

Data Ingestion Layer (Extraction)

For each data source, connectors or data extraction tools are configured. These connectors are specifically designed to connect to and extract data from a particular source. Configuration includes specifying connection details like credentials and access protocols.

Data Collection Layer

The extracted data is then collected and stored temporarily, often in a buffer or a staging area. This staging area acts as a holding ground for incoming data and prevents data loss or corruption during the ingestion process.

Data Transport Layer

Data is transported from the staging area to the destination. This can occur through various methods, including batch processing or real-time streaming. Data is moved securely with error-checking and retry mechanisms to ensure data integrity.

Data Format Preservation

Importantly, data ingestion retains the original format of the data. It does not perform any transformation or manipulation of the data content and ensures that it remains unchanged from the source.

Data Loading Layer (Storage)

Once data is transported to its destination, it is loaded into a storage system. This storage can be a data lake, a data warehouse, or a database, depending on the architecture of the data platform.

Metadata Management

In some cases, metadata, like source details, timestamps, and data lineage, is attached to the ingested data for tracking and governance purposes.

What Are The Types Of Data Ingestion?

There are 3 primary types of data ingestion: batch, streaming, and hybrid. Let’s look at these data ingestion types in more detail.

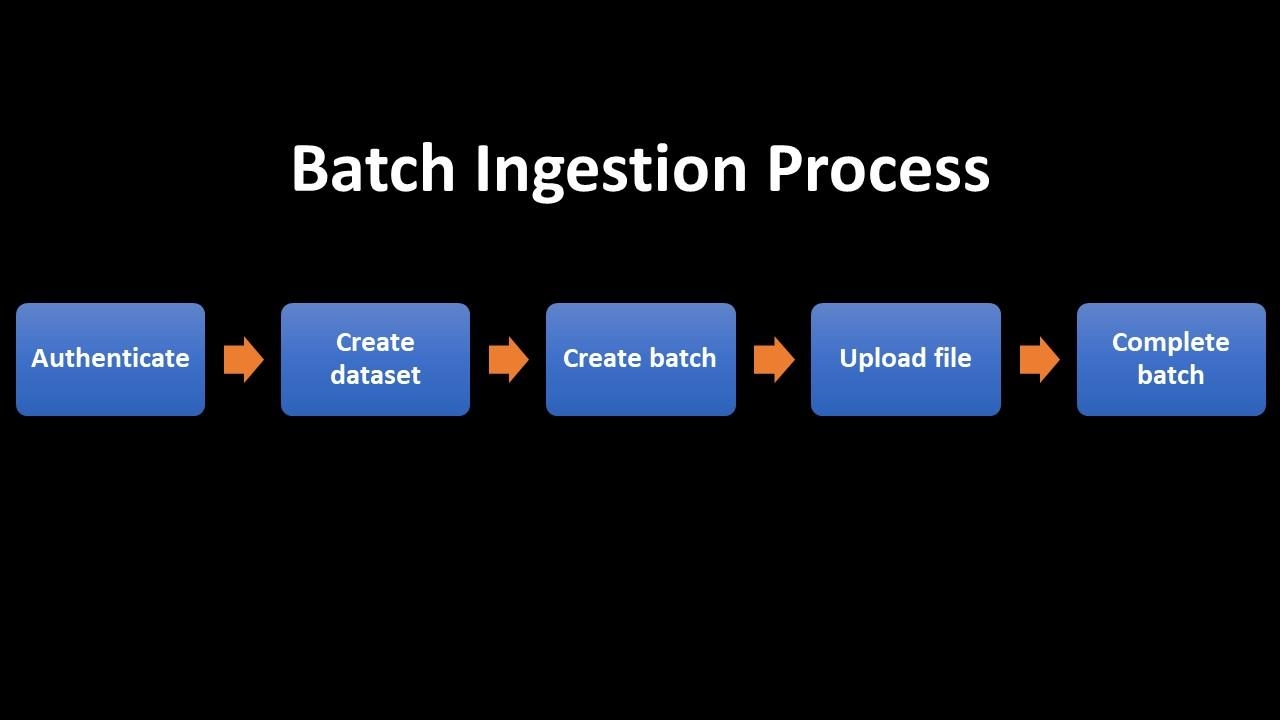

Batch Data Ingestion

Batch data ingestion is the periodic and systematic transfer of data in predefined chunks or batches. In this method, data is collected over a specific time period, like hourly, daily, or weekly, and then processed as a single unit.

The data is often stored in flat files, databases, or data warehouses. Batch ingestion is efficient for handling large volumes of data and is suitable for scenarios where real-time processing is not a requirement. It is especially useful when dealing with historical or archival data.

Streaming Data Ingestion

Streaming data ingestion is a real-time method that involves the continuous and immediate transfer of data as it is generated. Data is ingested and processed in near real-time for quick analysis and decision-making.

Streaming ingestion systems often use data ingestion tools to handle data flows. This approach is important in applications where real-time data analysis and rapid response to events are critical, like monitoring systems, fraud detection, and IoT data processing.

Hybrid Data Ingestion

Hybrid data ingestion combines elements of both batch and streaming methods. It lets organizations handle data in a flexible manner and chooses the most appropriate ingestion approach based on the specific use case.

Hybrid ingestion is used when historical data is mixed with real-time data so that you benefit from the advantages of both batch and streaming approaches. For example, a hybrid approach can be employed in financial services to blend historical transaction data with real-time market data for investment analysis.

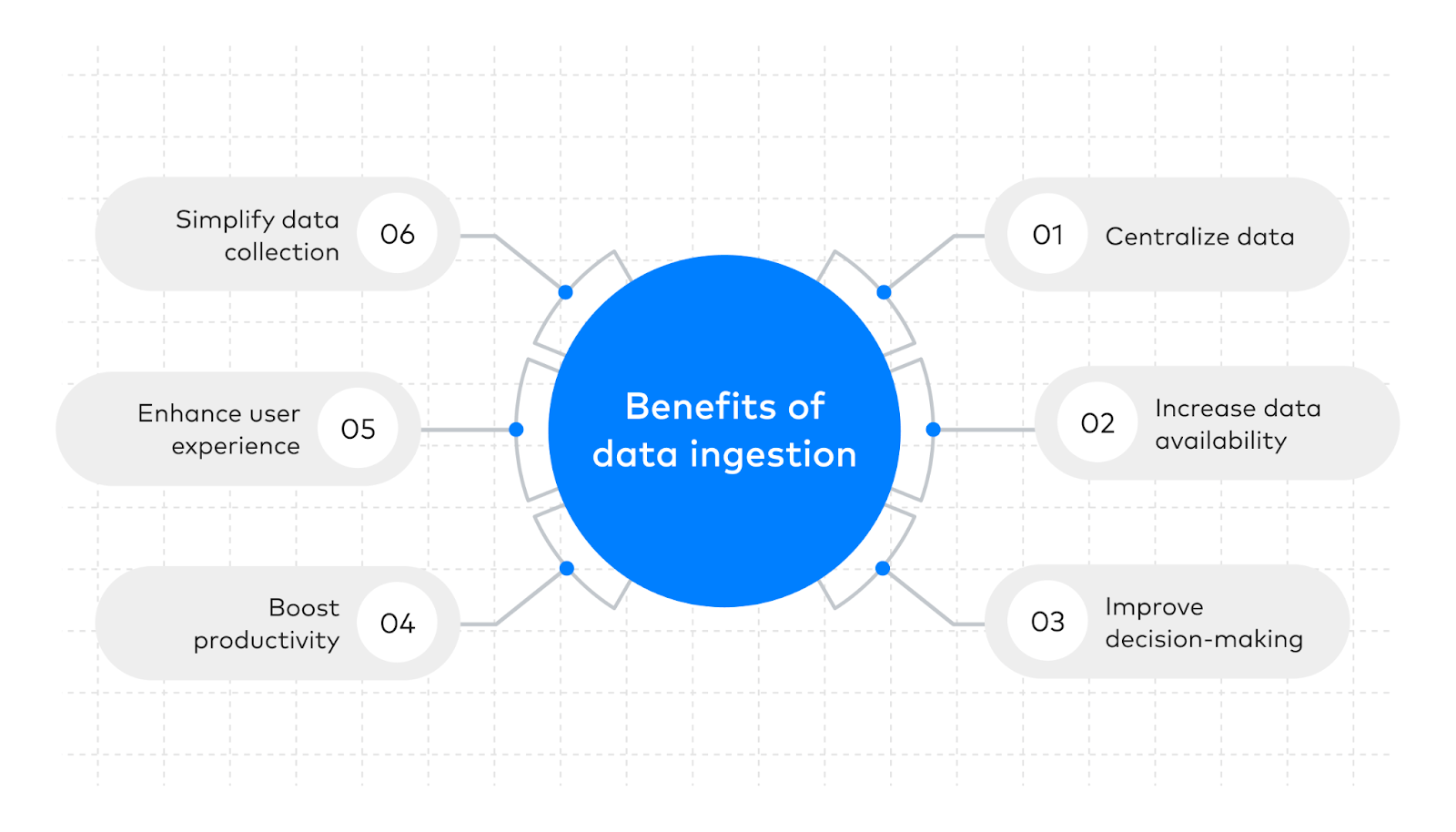

What Are The Advantages & Challenges Of Data Ingestion?

Advantages | Challenges |

| Ingestion processes can be designed to capture and deliver data in real-time and provide the most up-to-date information | Maintaining data quality and governance can be complex during data ingestion, especially when dealing with sensitive or regulated data |

| Data ingestion systems can be scaled easily to accommodate growing data volumes | Managing large volumes of data in real-time scenarios can be challenging |

| Ingestion processes help streamline the flow of data within an organization and make it easier for teams to access, share, and work with data efficiently | Storing all ingested data, even if it's not immediately needed, can result in high storage costs |

| Data ingestion pulls data from multiple sources like databases, IoT devices, APIs, and external vendors | Data ingestion processes can cause data duplication, especially when multiple data sources contain overlapping information |

| Data ingestion consolidates data from various sources into a centralized location for creating a single source of truth for analysis and reporting. | |

| It supports versioning to keep track of changes and revisions to data over time. | |

| Automated data ingestion reduces the risk of human error during data entry and transfer |

Use Cases Of Data Ingestion

Data ingestion is used in different scenarios where it serves as the initial step in the data processing pipeline. Let’s discuss its applications in detail and understand the role it plays in ensuring efficient and timely data collection.

Log & Event Data Ingestion

In scenarios where log and event data is generated continuously, data ingestion is used to collect and store this information. Applications include server logs, application logs, network logs, and security event logs. Ingesting these logs in real time helps in proactive monitoring, troubleshooting, and security incident detection.

IoT Data Ingestion

With the proliferation of IoT devices, data ingestion is crucial for handling the enormous volume of data that sensors and devices generate. It lets organizations collect data from IoT sources like smart devices, industrial sensors, and environmental monitoring systems.

Social Media Data Ingestion

Social media platforms generate vast amounts of data, including user-generated content, comments, and interactions. Data ingestion tools can collect and store this data for sentiment analysis, marketing research, and brand monitoring.

Web Scraping & Crawling

Data ingestion is essential for web scraping and crawling tasks which involve extracting data from websites, APIs, and other online sources. This is particularly useful in eCommerce for price monitoring, news aggregation, and competitive analysis.

Financial Data Ingestion

Financial institutions rely on data ingestion to collect market data, transaction records, and trade feeds in real time. This data is crucial for algorithmic trading, risk assessment, and financial analysis.

Healthcare Data Ingestion

In the healthcare sector, data ingestion is used to collect patient records, medical imaging data, and sensor data from wearable devices. This data can be analyzed for clinical research, remote patient monitoring, and healthcare decision support.

Supply Chain & Logistics

In the supply chain and logistics industry, data ingestion is employed to collect and handle shipments, inventory, and transportation data. Real-time visibility into the movement of goods helps optimize operations and reduce delays.

Environmental Monitoring

Environmental agencies use data ingestion to collect data from remote sensors and weather stations. This data is crucial for weather forecasting, climate monitoring, and disaster management.

Streaming Media Services

Streaming media platforms ingest content like video and audio streams in real-time. This ensures smooth playback for users and allows for content distribution to a global audience.

Sensor Data In Smart Cities

Smart city initiatives use the data ingestion process to collect information from various sensors, including traffic cameras, air quality sensors, and smart meters. This data is employed for urban planning, traffic management, and environmental quality improvement.

How Do SaaS Tools Like Estuary Flow Help In Efficient Data Ingestion?

Estuary Flow, our fully managed service, is a UI-based platform designed for building real-time data pipelines. While the powerful command-line interface empowers backend engineers with advanced data integration features, Flow also extends its usability to data analysts and other user groups. These non-technical users can actively manage data pipelines or data flows, thanks to the intuitive web application.

Flow prioritizes user-centricity, serving as a managed solution built upon Gazette, a tailored open-source streaming broker similar to Kafka. It's specifically designed to cater to the needs of the entire team, extending its benefits beyond just the engineering department.

Key features include:

- Feature-Rich: Flow provides a variety of features to help businesses manage their data pipelines, like monitoring, alerting, and error handling.

- Scalable & Reliable: Estuary Flow is designed to be scalable and reliable so it can handle even the most demanding data ingestion workloads.

- Real-Time Data Processing: It can process data in real time which means that businesses can get insights from their data as soon as it is generated.

- Pre-Built Connectors: Estuary Flow provides a variety of pre-built connectors for popular data sources and destinations, like databases, message queues, and file systems. This reduces the need for developers to rebuild custom connectors for every data source.

What Is Data Integration?

Data integration is the process of combining data from various sources, formats, and systems to provide a unified, comprehensive view of information. It ensures that data can be seamlessly accessed, shared, and utilized across an organization for effective data analysis, reporting, and decision-making.

This integration may involve data extraction, transformation, and loading (ETL) or real-time data synchronization to create a consistent and accurate data repository.

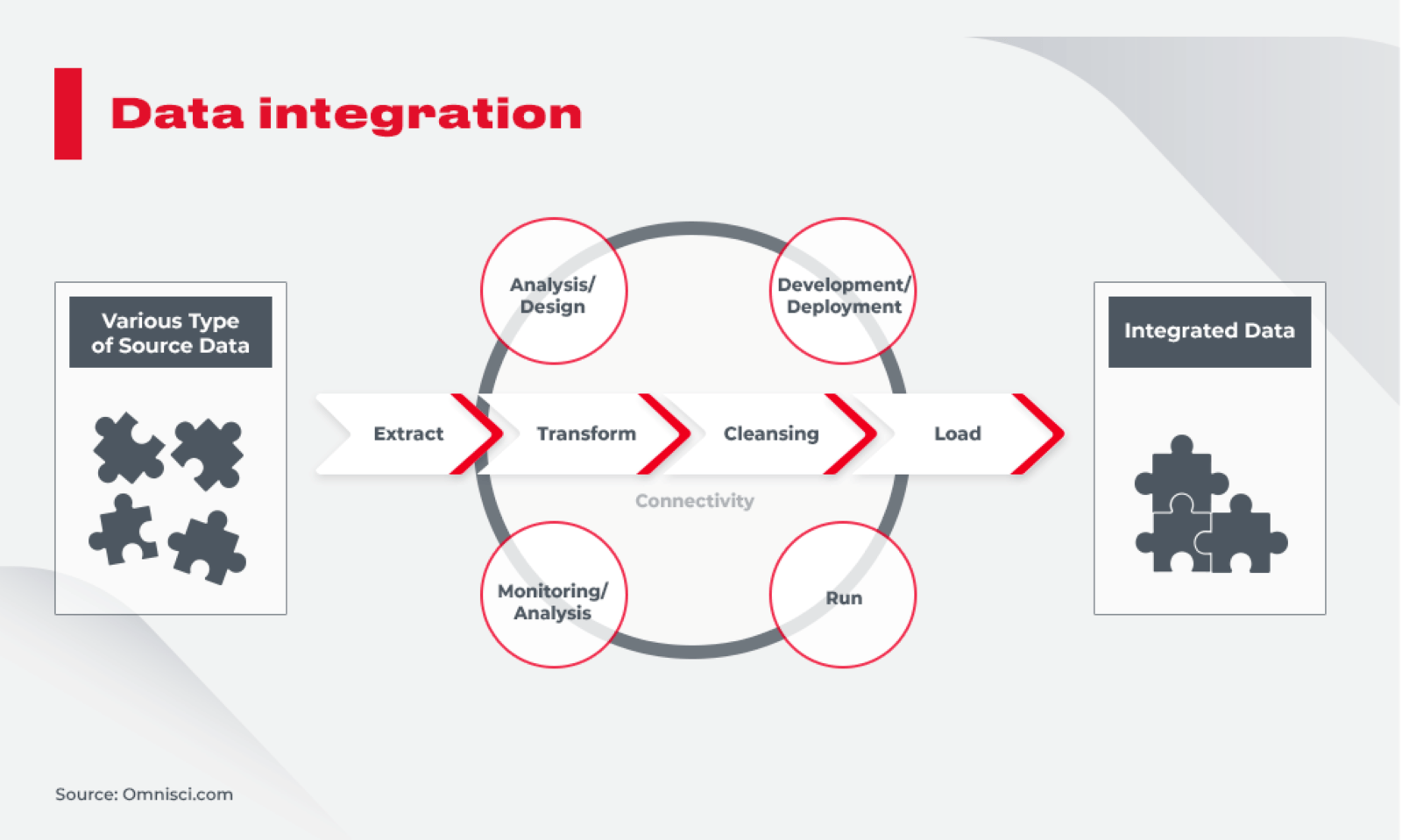

How Does Data Integration Work? Understanding The Data Integration Architecture

Data integration architecture is a structured framework for collecting and processing data from multiple sources to make it available for analysis and use. Let’s discuss in detail to understand how well-designed data integration processes can help access and use data effectively.

Data Extraction

The first step in the data integration process is data extraction. Data is extracted from source systems and transferred to a staging area. This process involves extracting the required data elements while maintaining data quality and consistency. Extraction methods can be batch-based or real-time, depending on the integration requirements.

Data Transformation

After extraction, data undergoes the transformation. Data transformations are applied to convert, cleanse, and enrich the data to meet the target format and quality standards. This step often includes data validation, normalization, deduplication, and the application of business rules.

Data Mapping

Data from different sources may not have a one-to-one relationship. Data mapping defines how data elements from different sources correspond to each other. This process helps in the reconciliation of data across various systems.

Data Integration

The transformed data is integrated into a unified format. This integration can occur at various levels, like logical integration where data is combined from multiple sources into a single logical data model, and physical integration which involves storing integrated data in a single repository.

Data Storage

Integrated data is stored in a central repository or data warehouse. This storage can be on-premises or in the cloud and it's designed to support efficient querying and analysis. Popular databases used for this purpose include SQL databases, NoSQL databases, and data lakes.

Data Delivery

Once data is integrated and stored, it needs to be delivered to end-users and applications. This can be done through various means, like APIs, dashboards, reports, and data services. The data delivery layer ensures that relevant data is accessible to those who need it.

Data Synchronization

In some cases, real-time or near-real-time data synchronization is required, especially for applications that demand the latest information. Data synchronization mechanisms like Change Data Capture (CDC) ensure that data is kept up-to-date across systems.

Data Quality & Monitoring

Data integration also involves continuous monitoring and data quality checks. Quality assurance processes help identify and address data discrepancies or anomalies. Monitoring ensures that the integrated data stays reliable and accurate.

What Are The Types Of Data Integration?

Let’s discuss 5 data integration types that will help ensure that your data is combined effectively and made available for critical business processes and decision-making.

Manual Data Integration

Manual data integration is the process of manually transferring data between different systems or applications. This is typically done by users or data analysts who extract data from one source and then load it into another system.

The manual process can be time-consuming, error-prone, and difficult to scale as it relies on human intervention to ensure data accuracy and consistency. It is suitable for small-scale data transfer needs but can become impractical for larger, more complex data integration tasks.

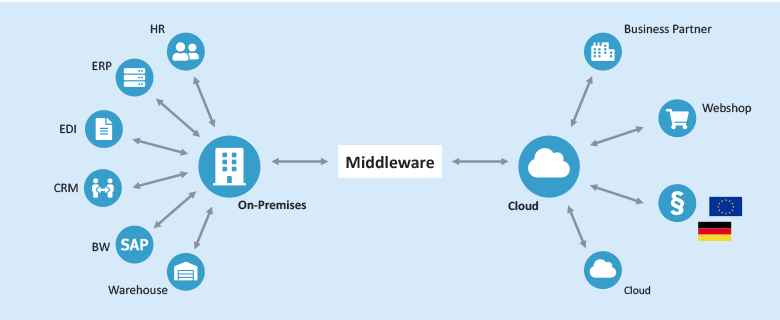

Middleware Data Integration

Middleware data integration uses middleware software, like Enterprise Service Bus (ESB) or message brokers, to connect and coordinate data exchange between multiple systems. Middleware acts as an intermediary layer that facilitates communication between applications to make sure that data flows smoothly and consistently.

When one system sends data, the middleware translates and routes it to the appropriate destination, even if the systems use different data formats or communication protocols. This type of integration provides a centralized hub for managing data flows and is particularly useful in enterprise-level applications with various systems and components.

Application-Based Integration

Application-based integration focuses on integrating data at the application level. This type of integration uses APIs software applications provide. APIs allow different software programs to communicate and share data in a structured manner.

You can build custom connectors or use pre-built APIs for data exchange between specific applications. This approach is ideal for integrating software applications within a particular ecosystem and it allows for the automation of data exchange processes.

Common Storage Integration

Common storage integration involves storing data in a centralized repository or database that multiple systems can access. Data is collected, transformed, and loaded into a common data store where it can be easily retrieved by various applications and services.

This approach simplifies data access and reduces the need for real-time data synchronization. Common storage integration is well-suited for scenarios where data needs to be shared and accessed by multiple applications within an organization.

Uniform Data Access Integration

Uniform data access integration maintains the source data in its original location while providing a consistent data access format or interface. It creates a unified layer that abstracts the underlying data sources, making them appear as if they are accessed consistently

Uniform data access integration lets applications and users interact with data without needing to know the complexities of the various data storage systems, formats, or locations. Data remains at its original destination, eliminating the need for extensive data movement which can be resource-intensive and time-consuming.

What Are The Advantages & Challenges Of Data Integration?

Advantages | Challenges |

| Data integration provides a holistic view of data for more informed and data-driven decision-making | Ensuring data security and privacy while integrating data from different sources is difficult |

| Consolidating data helps reduce data discrepancies and errors and improves data accuracy | Maintaining consistent data over time, especially when historical data is integrated with new data, can be challenging |

| Integrating data sources provides real-time or near-real-time access to data which is vital for businesses that require up-to-the-minute information | Adapting to changes in data sources, like modifications to data schemas, APIs, or databases, can disrupt data integration processes |

| Data integration helps maintain data consistency across the organization so that everyone uses the same, reliable data | |

| Eliminating data silos and duplication of efforts reduce data management and storage costs | |

| Integrating customer data from various sources provides a comprehensive view of customer interactions which helps improve customer service and relationship management. | |

| Data integration ensures that data is backed up and available from multiple sources which improves disaster recovery capabilities | |

| Data integration standardizes, cleanses, and validates data from various sources to ensure accuracy and reliability |

10 Use Cases Of Data Integration

Let's now discuss how data integration helps organizations across different sectors bring together data from various sources and formats to provide a unified, consistent view of their data.

Master Data Management (MDM)

Data integration plays a crucial role in MDM by ensuring that master data entities (e.g., customers, products, employees) are consistent and accurate across the organization. MDM solutions often use data integration to propagate these master records to other systems.

Supply Chain Management

Data integration is vital in supply chain management. It connects data from suppliers, logistics, inventory, and demand forecasting to help businesses optimize their supply chain, reduce costs, and improve delivery accuracy.

Healthcare Integration

Healthcare organizations need to integrate patient data from various sources, like electronic health records, laboratory reports, and billing systems. Data integration ensures that medical professionals have access to complete and accurate patient records for better decision-making.

Financial Services

Financial institutions integrate data from multiple sources for risk management, fraud detection, and compliance. This includes combining data from transactions, customer records, and external data sources to identify suspicious activities.

eCommerce & Personalization

eCommerce companies often use data integration to combine user behavior data, inventory data, and transaction data. This helps them personalize recommendations and streamline the shopping experience.

Manufacturing & IoT

In manufacturing, data integration brings together data from sensors and equipment to monitor machine health, production quality, and supply chain operations. This data can be used to optimize processes and reduce downtime.

Human Resources

Data integration can help HR departments by consolidating employee data from various systems such as payroll, time tracking, and benefits. This simplifies onboarding, payroll processing, and performance evaluations.

Government & Public Sector

Government agencies use data integration to combine data from multiple sources, like census data, tax records, and social services, to make informed policy decisions, allocate resources, and improve citizen services.

Customer Insights & Marketing

Marketing teams often use data integration to bring together data from sources like CRM systems, web analytics, and social media. This integrated data provides a holistic view of customer behavior and preferences which can inform marketing strategies.

Cross-Channel Sales

Retailers benefit from data integration to help customers shop across various channels seamlessly, whether online, in-store, or mobile. It ensures that customers have a consistent experience and access to real-time inventory information.

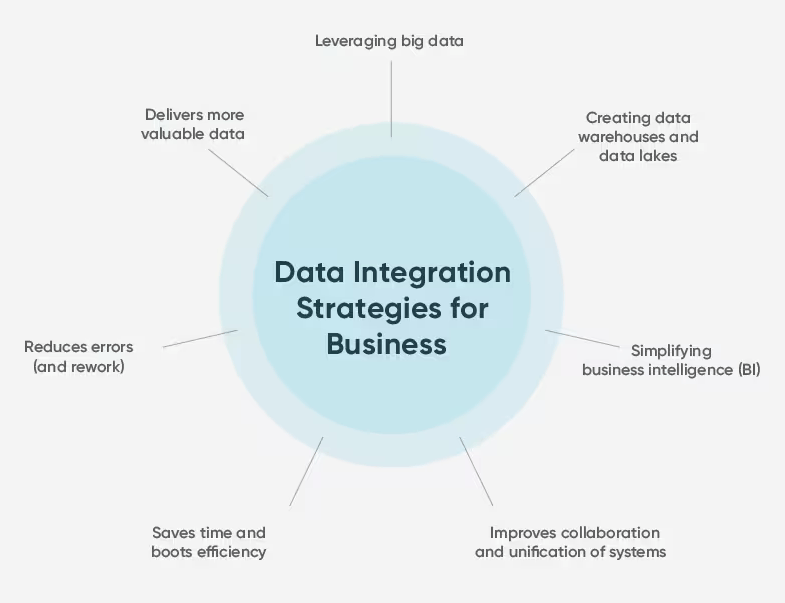

How Can You Optimize Your Data Integration Efforts?

Estuary Flow is an advanced DataOps tool for creating and managing streaming data pipelines. It has a user-friendly interface that you can access through a UI or CLI. It also gives you the added convenience of programmatic access for effortless integration and white-labeling of pipelines.

However, Flow goes beyond mere data transportation. It additionally guarantees the precision and uniformity of your evolving data. It comes equipped with built-in testing capabilities, including unit tests and exactly-once semantics, to maintain transactional consistency.

Let’s discuss its key features:

- Global Schema Queries: Estuary Flow provides global schema queries for efficient retrieval of integrated data from multiple sources.

- Security Protocols: It offers several security protocols to protect your data, including encryption, access control, and audit logging.

- Real-Time Data Integration: Estuary Flow lets you integrate data in real time which means that you always have the most up-to-date data available.

- Real-time Change Data Capture: It offers real-time CDC so you can integrate data into your data warehouse or data visualization tool as soon as it changes in the source system.

- Powerful Transformations: Flow uses stable micro-transactions to process data which ensures that your data is always consistent. It also offers powerful transformations that can be used to clean, filter, and aggregate your data.

Conclusion

Data ingestion and data integration are two concepts that might look similar but serve different roles in managing and using data effectively. Whether it's for strategic planning, market analysis, or operational improvements, organizations heavily rely on accurate, integrated data. Misinterpreting these processes can cause faulty insights and costly mistakes which can mess up the whole point of using data.

Estuary Flow is a powerful, user-friendly platform that makes data integration and ingestion a breeze. With Flow, you can easily connect to a wide range of data sources, transform your data into a unified format, and load it into your target destination. It is built on a modern, scalable architecture that can handle even the most complex data pipelines.

Try Estuary Flow for free and see how it can help you improve your data workflow. You can also contact our team for more information about data ingestion and data integration.

Frequently Asked Questions About Data Ingestion vs Data Integration

Data ingestion and data integration are 2 concepts that are closely related to each other. So, it's only natural that a host of questions arises. Let’s address some of these most frequently asked questions about data ingestion vs. data integration for better clarity and insights.

Can data ingestion and data integration be performed separately or are they typically part of the same workflow?

Data ingestion and data integration are different processes but often part of the same workflow. Ingestion involves collecting and importing raw data into a storage system, while integration focuses on combining, transforming, and harmonizing data from various sources.

Separation is possible for specific use cases but they usually work together, with ingestion as the initial step, feeding data into an integration process. Integration ensures data quality and consistency, making it ready for analysis and reporting. Together, they form a seamless data pipeline that optimizes the value of diverse data sources in analytics, business intelligence (BI), and decision-making.

What is the difference between data ingestion and ETL?

Data ingestion and ETL (Extract, Transform, Load) are important processes in data management. Data ingestion is the initial step where raw data is collected and brought into a data storage system, often in its original format.

ETL, on the other hand, occurs after ingestion and involves 3 phases:

- Extract, where data is gathered from various sources

- Transform, where it's cleaned, normalized, and structured for analysis

- Load, where the processed data is loaded into a destination, like a data warehouse

While data ingestion is about acquiring data, ETL focuses on preparing it for analysis, making data more accessible and meaningful for decision-making. Tools like Estuary Flow make use of near real-time ETL to get data from various sources, transform it, and load it into the destination system as close to real-time as possible, ensuring that businesses have access to up-to-date and actionable insights.

Are there specific scenarios where data ingestion is preferred over data integration or vice versa?

Data ingestion is preferred in scenarios like real-time analytics where immediate data access is crucial, like monitoring website traffic or sensor data. It's also ideal for log file collection, data streaming, and raw data storage, like in data lakes.

Data integration is preferable when you need to merge and refine data for reporting or business intelligence. For instance, in customer relationship management (CRM), combining data from various touchpoints (email, social media, sales) is vital. Similarly, financial analysis, where you merge data from different accounts and systems for a comprehensive overview, benefits from data integration.

Can data ingestion and data integration be accomplished in real-time or are there differences in their latency requirements?

Data integration and data ingestion can both be accomplished in real-time but they have different latency requirements. Data ingestion typically focuses on collecting and storing data as it arrives, often with low latency requirements, aiming to capture data as close to real-time as possible.

Data integration involves combining and transforming data from various sources into a unified format or structure and it may have slightly higher latency requirements depending on the complexity of the integration process. While both can be real-time, data ingestion prioritizes speed and immediacy whereas data integration may allow for a slightly longer processing time.