I love recursive acronyms in software - like GNU’s Not Unix, or PHP Hypertext Processor - because they help you remember what’s important. For me the message with CDC is to get CDC Done Correctly (CDC), because there are so many examples of CDC NOT done correctly that you need to know the difference. To get CDC right, you need to know how CDC is supposed to work normally, and the best practices that keep it running smoothly.

What is Change Data Capture

Change Data Capture (CDC) is the process of capturing data changes made to a database as they occur and sending them to targets.

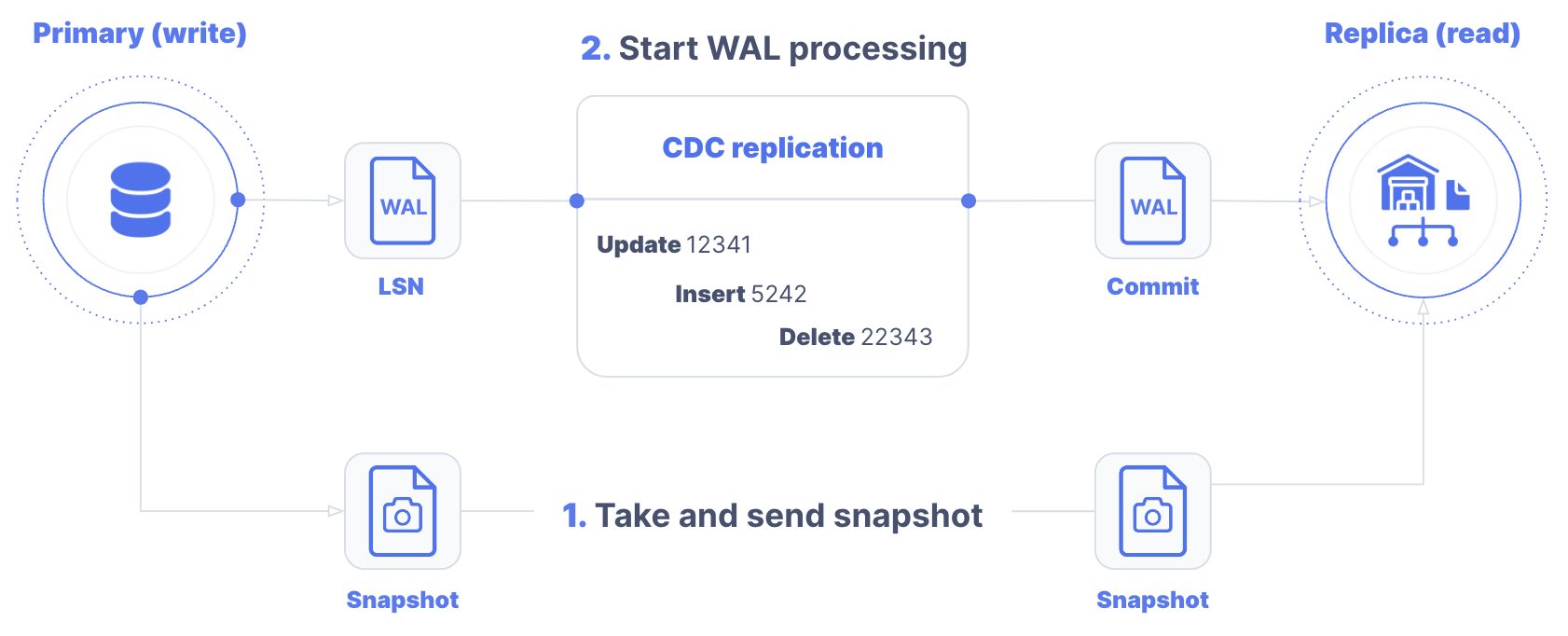

For most people CDC really refers to log-based CDC, which is the most efficient type of CDC. This is where you capture changes from the write-ahead log (WAL) that’s used to “write ahead” as part of the transactional commit for inserts, updates, or deletes before you write to database storage. Writing to a separate file is the fastest and lowest-load way to save state in case the full database write fails.

Note: Each system has different terms, like the transaction log. I’m going to refer to it as the WAL for simplicity.

If you understood that, read on. Otherwise, if you first want an intro to CDC concepts you can go here first before continuing.

By the way, the most important words to remember from the definition are “as they occur” because CDC is designed to operate in real-time.

Why CDC is So Important

Most applications are not designed to support analytics; they focus on handling transactions. They often only store the current state, and not the full history of data changes. Inserts, updates, and deletes overwrite data because all that’s needed for transactions is the current value.

Before CDC, if you wanted the historical data for analytics from the tables, your only real option was to recreate it yourself. Your options depended on what additional information the application stored.

- Periodic full extracts: this gives you an accurate view each time. But it is a heavy load on the source and you need to extract for each time period you need. You also miss multiple updates to the same row within a period.

- Incremental extracts: With a table, you can only do incremental extracts if you can tell which changes happened since the last extract, which is usually done with timestamps or a change log. You also need to track deletes if the row no longer exists. You may have heard the term “soft” delete, where a row also has a “Deleted” column to track for deletion. Otherwise you need to compare a full extract to its previous complete state.

With CDC, you have access to every change in the WAL – including inserts, updates, and deletes. If you take an initial snapshot from a certain time and then read all subsequent changes from the WAL, you capture the most changes possible.

In general, CDC is the best method for capturing data for analytics or other non-transaction use cases. CDC is often the only way to efficiently extract data changes without modifying an application. It was also designed to provide this information in the most efficient way possible and with the lowest latency for real-time replication.

When to Use CDC, and When Not To

If you need the change data for most time periods, CDC seems like the best approach, the default option.

There are, however, a few good reasons why you wouldn’t use log-based CDC.

- You only need the data once: Don’t bother setting up CDC if it’s a one-time query.

- You don’t need a lot of data: If you don’t need a lot of data, and you don’t care about changes in data like deletes, sometimes a simple query works. But if others need the data, you should make that joint case.

- You can’t get access to the WAL: There are good reasons for this, mostly around security and data access. Be patient. Build your case and make your arguments. A good CDC technology should also allow you to filter by table and column, which will help with security.

- You can’t get the right events: This may seem weird, but it does happen. The data doesn’t always represent the business logic. Sometimes you have to recreate a higher level even as a series of data changes. In this case you may need to capture that event another way. But for the rest of the data, CDC still makes sense.

- Your vendor doesn’t support CDC: or has limited or no CDC support for a particular source.

If you’re worried about the load CDC adds, don’t be. CDC is the lowest-load way to get data from a database. If you’re already replicating from the master database to a (read) replica, you could consider using the replica as the source if the additional latency isn’t a big issue.

NOTE: You may not have the option to use CDC with a given source. Only a few of any given ELT vendor’s source connectors are CDC-based. Make sure you understand each connector you’re planning on using before making a decision on a vendor.

How CDC Should Work

CDC is a proven approach for database replication. When done right as part of a data pipeline it is the most efficient, lowest latency, and lowest-load way to extract data. It’s often the only way to extract all change data.

But in the real world there are a lot of challenges with CDC in data pipelines. Challenges with CDC is one of the most common reasons companies move from another data integration vendor to Estuary.

What are the major challenges you need to watch out for, and what does CDC done correctly (CDC) look like?

How to Take The Initial Snapshot

The first real step of CDC is to get an initial snapshot. That’s because you usually don’t just want the change data; you want all the data.

This is the first big challenge with CDC. Many CDC implementations in data pipelines first take a full “snapshot”. This isn’t a snapshot the way a database takes a snapshot. It’s usually a series of SELECT statements for each table. Some vendors perform table-level locks that block transactions, which adds latency to transactions. Sometimes the CDC implementations also delay WAL reads until the snapshot is taken or loaded, which adds latency and increases the WAL size, further loading the database.

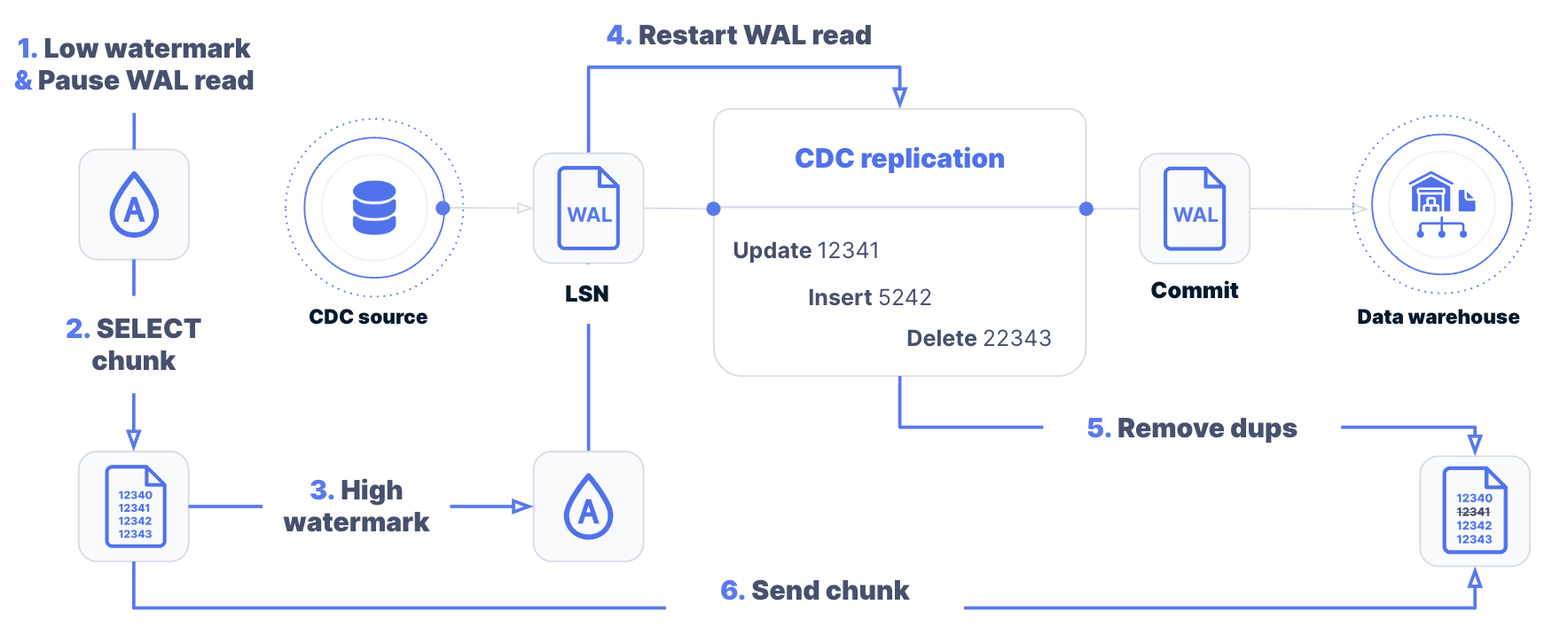

The right approach has been used for close to a decade now; incremental snapshots. For more you can read the DBLog framework Netflix implemented (link here.) The simple way to think about it is instead of taking one big snapshot, you break up your tables into many small unique chunks and replicate one chunk at a time. Usually rows are unique by how they’re written to storage, which helps you maximize I/O.

The DBLog implementation basically as follows:

- Pause WAL processing briefly and keep track of the low watermark timestamp where you paused WAL reads.

- Select the next unique chunk of a table for an incremental snapshot (based on key or even better write order.)

- When you’ve gotten the chunk, write the high watermark timestamp marking the end of the WAL read pause

- Resume WAL processing.

- In between the low and high watermarks, remove any duplicate rows from the chunk that also appear in the WAL as you process the WAL between the low and high watermarks, because the WAL writes happened after the SELECT statement, so those specific rows in the chunk are outdated.

- When you reach the high watermark write the modified chunk, and then continue to process the WAL. here it’s important to write the modified chunk before continuing to process the WAL because the chunk is older than the next part of the WAL.

It’s important to pick short intervals so that the WAL doesn’t become too long, but long enough to optimize network utilization. The intervals and size of the chunks may be optimized by vendors based on experience, and there is still a big difference in performance and scalability here. So it’s important to test each vendor for both latency and scalability, including these parameters, for varying types of data, especially where rows might be very large.

While the DBLog approach is efficient, you can make it even better by never pausing the WAL read. Estuary has implemented a similar approach to prioritize WAL reads first.

- Immediately start reading the WAL. Do not pause.

- Keep track of the low watermark time where you SELECT the next chunk of a table.

- When the SELECT is done, write the high watermark timestamp marking the end of the WAL read pause.

- For any rows in the chunk that also exist in the WAL between the low and high watermarks, remove any colliding rows from the chunk that also appear in the WAL.

- Then send the chunk and repeat.

NOTE: Estuary Flow writes all change data into collections, which are append-only durable logs similar to a WAL. Similar to how replication works, Estuary Flow transactionally guarantees change data, including the modified chunks. This mechanism helps ensure WAL and chunk data are merged properly without needing to pause WAL processing during the initial SELECT or merge at the end.

NOTE: Most SELECT statements do not require locking on their own to ensure data consistency. By default most implement a READ_COMMITTED isolation level, which eliminates dirty reads. But there are still reasons why technologies perform table locks. For example, Debezium performs table locks to prevent schema changes in PostgreSQL during a snapshot. Make sure you understand what locks are occurring during snapshots.

Incremental snapshotting helps fix the second challenge with CDC; delaying WAL processing.

How to Process the WAL

Delaying WAL processing is generally bad. The reason is most databases do not keep transaction log entries for long. If you search for PostgreSQL WAL issues, you will see why. Postgres holds onto the WAL as long as any replication slot (managing destination state) needs to still read the WAL as marked by the log sequence number (LSN). When the WAL size exceeds the max WAL size set (a soft limit) Postgres can start to free up old WAL files even if replication slots still want them. This leads to data loss in the destination, and should trigger a full refresh.

If you don’t have a max WAL size set, it can result in the WAL file growing until Postgres runs out of resources. This has been known to cause crashes.

Part of the reason people originally moved from batch to CDC was because existing batch extraction was taking longer than their permitted batch window. The same can happen with CDC executed in batch intervals. It’s possible to get into a situation under heavier loads where you can’t process the WAL fast enough within the intended batch interval windows defined by the ELT vendor. This can lead to the WAL filling up and require a full refresh or even lead to a source failure.

NOTE: While most databases do not keep their WAL for a long time, some can. Oracle Database archives its log like others. But unlike others, it allows replication to use it for CDC. Also, if your database load isn’t high, you may be able to delay WAL reads and do full snapshots. While it might be a risk during peak loads, or as your data volumes grow, the most important thing is that you understand how it works, and that you monitor it closely.

Because of the risks of delayed WAL (transaction log) processing, Estuary processes the WAL immediately, and prioritizes WAL processing over other activities including incremental snapshotting.

Most ELT vendors, on the other hand, delay WAL processing. It’s for a good reason from their perspective, but it’s not good for CDC. This is done partly because of the next challenge; mixing real-time and batch data movement in a pipeline.

How to Support Batch + Real-time Data

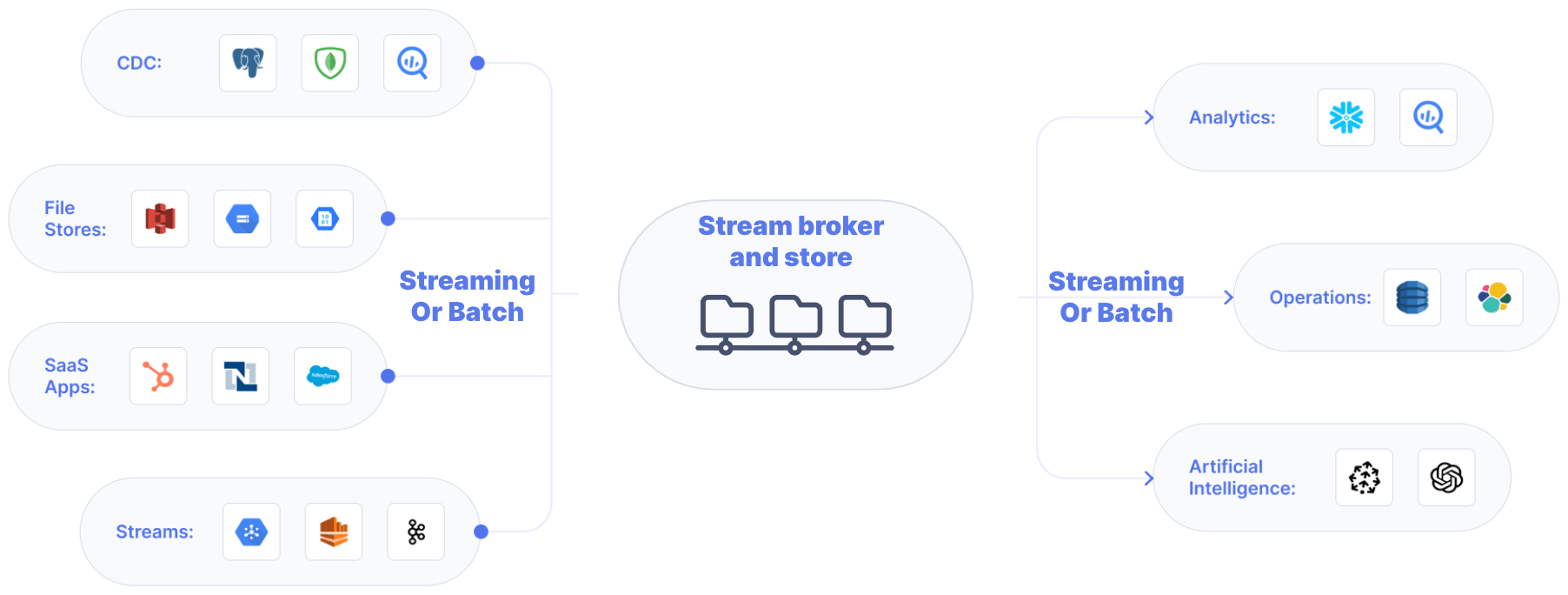

The reality of just about every data integration team is that some data sources, not just legacy systems but even modern SaaS vendors, require batch extraction. The same is true for some targets. Even the majority of data warehouse deployments are still loaded in batch mode.

This is why almost all ELT vendors are batch-based, not real-time. Most ELT vendors need to extract and load at the same speed. While there is some row-by-row processing that happens while moving data to convert from source to target formats, it’s not done using a buffer or staging area, but instead mid-stream as part of replication. There is no store where you can stream into it from a source, and then later load in batch to a destination. Since each ELT vendor needs to support batch by default, everything else needs to be batch as well.

The challenge is you need to support real-time as well:

- Support real-time analytics by streaming from real-time sources to a destination that can also support sub-second query (or search) times.

- Enrich real-time data with historical data mid-stream using ETL, ELT in a low-latency destination, or even incremental/real-time materialized views in a destination.

- Integrate a real-time source, including any CDC source or Kafka, with a batch data warehouse because CDC should be real-time but the destination is batch.

Many destinations have added direct Kafka ingestion support, which often includes buffering, but you get the idea. The moment support for a real-time source is needed for any reason, you need to support real-time and batch modes together in the same data pipeline.

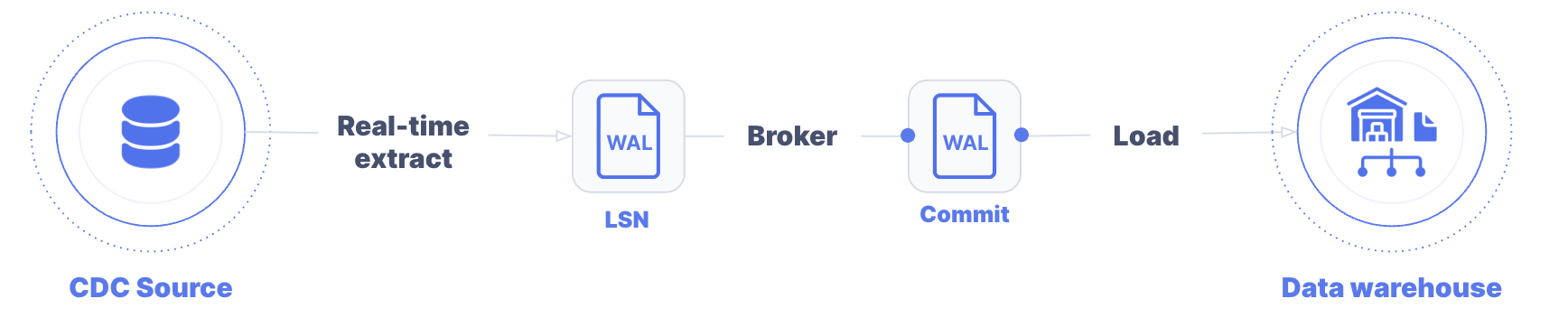

The best and proven way to support real-time and batch together is to have a durable store or staging area. It allows you to batch up a stream, or stream a store. A broker architecture, like Kafka or Gazette, is the most proven way to support streaming from real-time sources to real-time targets. Kafka is used by some ELT vendors to support streaming to batch by “batch” consuming from Kafka topics or queues in intervals.

Every ELT vendor could add a real-time data pipeline to their existing batch architecture, and let the customer choose the best target for their needs. So long as they manage real-time and batch replication side-by-side and let the customer handle all transformations inside the target, it could work.

Except it doesn’t solve the next challenge: supporting multiple destinations.

How to Support Multiple Destinations

Most ELT deployments have been used for one purpose; to load data into a cloud data warehouse, data lake, or lakehouse for analytics. But there are other types of analytics and automation.

Increasingly, technologies like Druid, Pinot, Clickhouse, Elasticsearch, and others are being used to support decision making in operations by employees. Most deployments need to support second-level response times. Many of these deployments also require real-time (sub-second latency) data.

Then there’s AI. While training generative AI or ML models can be done in batch, some retrieval augmented training (RAG) and a lot of model execution requires real-time (sub-second) data, and more importantly real-time (sub-second) responses. You’re not going to wait a long time for a chat bot, a support response, or a product recommendation.

The challenge happens when operational analytics or AI need the same data you are using for historical analytics. You end up having multiple destinations. If you decide to use different data pipelines you end up:

- Extracting the data multiple times, which adds a load on each source

- Doing the transformation work multiple times, which can also lead to data inconsistencies

- Spending more time delivering projects, because you’re not reusing work

- Spending more on vendors and on maintaining multiple pipelines

You need a data pipeline that can not only support batch or real-time sources and targets. You need one that can also support a many-to-many architecture with multiple targets, and support end-to-end real-time and/or batch.

You could build on top of Kafka the way Debezium has. But a real-time data pipeline also requires:

- Stream storage and “replay” in batch or stream mode. Kafka does not keep messages indefinitely or provide replay services that you could use for backfilling data.

- A common data model and format for translation from sources to targets, and storage, one that allows data engineers to work with schema directly when modeling data movement. Kafka only cares about messages.

- Support for transforms, preferable using SQL for analytics targets, Python for AI and ML projects, and JavaScript for customer-facing projects.

- Exactly once delivery. While Debezium doesn’t currently guarantee exactly once delivery (only at least once) Kafka now does. You need the guarantee mid-stream (for ETL) and for each destination.

One important feature of stream storage is to minimize the load on each source by extracting exactly and only once. No matter how many destinations need the data, or how many times, you should only extract that data once. It becomes the responsibility of the broker and store to ensure exactly-once delivery to each destination when the data is needed and whether it’s real-time or batch.

How to Support ELT + ETL

Why do you need ETL? Isn’t ELT enough?

The resurgence in ETL is partly because of the need for real-time. Doing your transformations as part of ELT works well with a single destination such as a data warehouse and batch mode. If specific transformations are only needed in a single destination it can make good sense to run them in that destination. But for real-time destinations, or for transformations that are needed for many targets, ETL makes more sense because you can create and deploy the same transformation once for all the targets. It’s not only less work. It also helps ensure data is consistent across the targets.

It has become more common to be able to use SQL, Python, even JavaScript for streaming transforms. Streaming transforms that either do not need state, or can hold state and process one row at a time are much lower latency than transformations built using SQL queries in databases.

Implementing streaming transformations, or compute in general, is more straightforward on a message queue or other stream store. You consume from one queue, topic, or store, compute, and publish to another. You need to pick the best compute engines, and integrate them to implement features like exactly-once consistency. Supporting ELT is easier. You might build a UI to simplify using something like dbt. At the very least, you should be able to trigger dbt or SQL once the data arrives.

Estuary to date has implemented SQL and TypeScript support for transformations with exactly-once guaranteed delivery from source to destination collections (Python support is planned.) Collections are a real-time data lake of append-only JSON documents containing change data. The transformations are called derivations in Estuary (see Estuary documentation on derivations.) Estuary also supports dbt in ELT mode.

How to ensure Exactly-Once Delivery

You only want to see each change exactly once. But CDC does not mean you’re always getting exactly once. It’s not intentional; it happens when things go wrong.

For example, Debezium is at least once because it is built on Apache Kafka, and Debezium does not (yet) support Kafka exactly-once guaranteed delivery. In at-least once delivery, Kafka resends a message when it doesn’t receive an acknowledgement. It is then up to the client to deduplicate. The reason why many data warehouses have dedup options for Kafka streams is because exactly-once delivery is relatively new for Kafka.

This is the same with most replication pipelines. With Postgres, for example, when a replication slot fails and loses its LSN (log sequence number) in memory, as it recovers it goes back to the checkpointed LSN value and starts to resend from that point. It is the client that can then ask the source to reset the LSN to the last change it received.

Ensuring exactly-once delivery can only happen if the destination can coordinate with the source. In the case of CDC pipelines, if the destination is where the data is stored, the destination connector needs to check in the destination storage or with its own internal state tracking to dedup. If it doesn’t, then you need to dedup in the target itself.

In the case of a broker architecture, you can ensure exactly once delivery from the source to the broker store using transactional guarantees, which is how Estuary Flow does works. It transactionally guarantees data from a source is written exactly once to a collection. Loading data from a collection to a target works the same way, except for one detail. For type 1 slowly changing dimensions (SCD), Estuary updates the destination in place. For type 2 SCDs it appends.

CDC Done Correctly - The Architecture

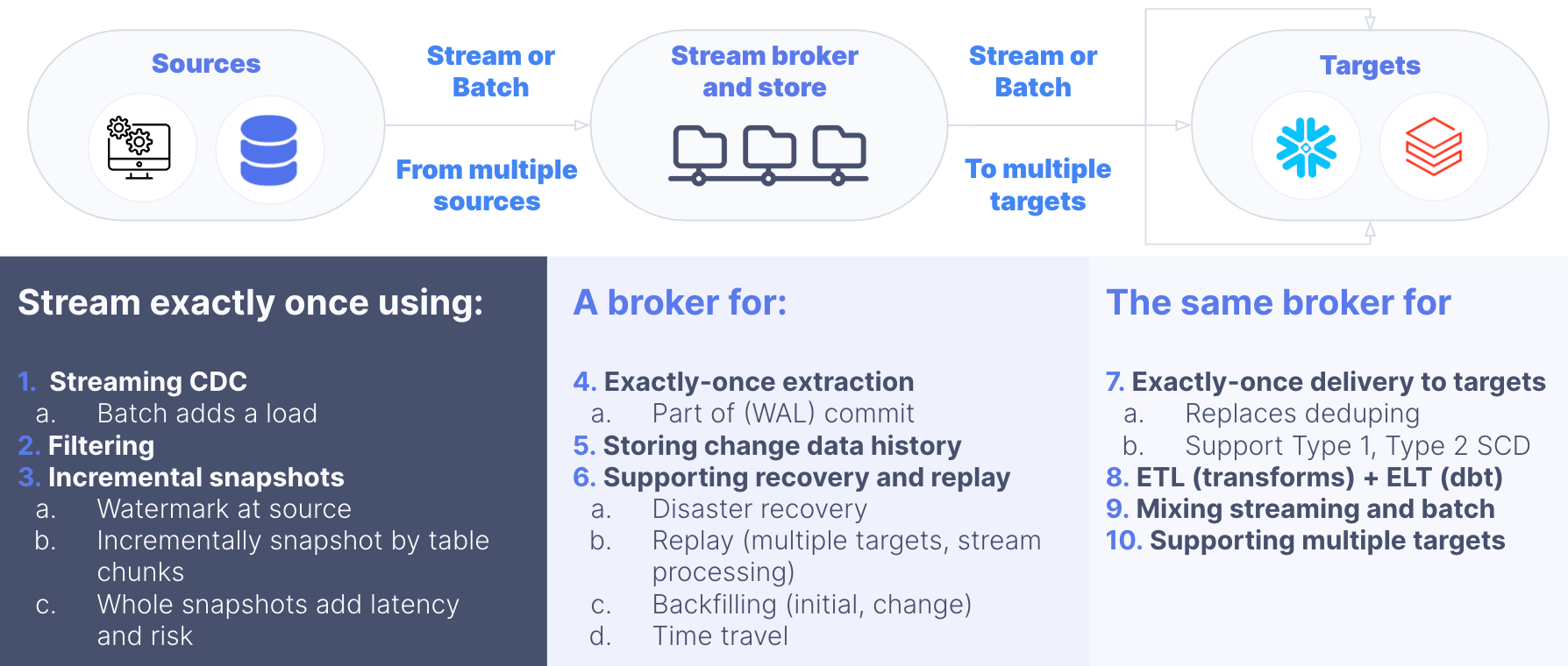

By time you have put all these best practices together, you will end up with an architecture that looks like the following:

First, you’ve addressed all your CDC challenges with a real-time streaming architecture into a stream store that closely resembles a database’s replication architecture with a durable append-only log file at the destination. Ideally you’ve also implemented filtering to minimize data movement and incremental snapshotting as well.

The broker architecture, like a WAL or transaction log, ensures exactly-once guaranteed delivery. It stores all change data so you extract exactly once, and only once from each source. Ideally you have built services on top including backfilling/replay for initial data loading of new targets or adding new tables to existing targets, or time travel similar to what Snowflake offers.

The broker architecture enables exactly-once delivery to targets. Services you should build on top are slowly changing dimension (SCD) type 1 (updates in place) and type 2 (append changes) loading, It should also enable mid-stream transformations (ETL) from store-to-store, or ELT by triggering dbt or SQL in targets.

A broker architecture also enables a many-to-many mixed streaming and batch architecture by streaming or batch-loading into a store, and streaming to targets via connectors that determine how to stream or batch load for each target.

Where to Learn More

If you want to try out CDC done correctly (CDC) as part of a complete many-to-many real-time and batch ETL and ELT data pipeline, you can sign up for a free Estuary account. I highly recommend you also sign up for the Estuary Slack community to ask questions as you get started. And don’t forget to use Estuary documentation, and try out the quickstart.

You can also watch the CDC Best Practices webinar, which goes through most of these points and shows with demos how this works in Estuary.

We’re looking forward to your questions and comments!