In the rapidly developing world of data management and analytics, Amazon Redshift has firmly established itself as a go-to option for data warehousing.

As you start to use Redshift to store and manage data, you need to be familiar with the most effective extract, transform, load (ETL) strategies to make the most of your analytics investment.

This article will discuss Amazon Redshift ETL in detail and examine the top three strategies you should know to manage data integration for Amazon Redshift.

What Is Amazon Redshift? An Overview

Amazon Redshift is a fully managed cloud data warehouse from Amazon Web Services (AWS). With its petabyte-scale cloud storage capabilities, Redshift shines in its ability to handle and process huge chunks of structured and unstructured data. It’s based on PostgreSQL version 8.0.2. So, if you’re familiar with SQL, you can use regular SQL queries to perform tasks in Redshift.

The unique part about Redshift is that it’s a column-oriented data storage approach with a huge capacity for parallel processing. This makes it ideal for analytical workloads.

Amazon Redshift: Key Features

Here are some of the reasons why so many teams rely on Amazon Redshift:

- Serverless architecture. Amazon Redshift provides a serverless architecture for data management. This feature enables you to handle analytic workloads of any size without the need to manage the structure of the data warehouse. As such, developers, data scientists, and analysts can collaborate to build data design and train machine learning models without configuring complex infrastructure tasks.

- Petabyte-scale data warehouse. The managed storage of Redshift supports workloads of up to 8 petabytes of compressed data. This robust storage capacity enables you to add almost any number or type of nodes to your data warehouse.

- Federated queries. Redshift’s federated query capability helps you query live data across one or more of Amazon's Relational Database Services (RDS). This includes querying data from Aurora MySQL, RDS, and Aurora PostgreSQL databases without the need for data migration.

- End-to-end encryption. With just a few clicks, you can configure Amazon Redshift to employ hardware-accelerated AES-256 encryption for data at rest and SSL for data in transit. All the data stored on the disc, including backups, will be encrypted if you decide to enable data encryption at rest. Additionally, complex tasks like key management for encryption are handled by Redshift by default.

What is ETL?

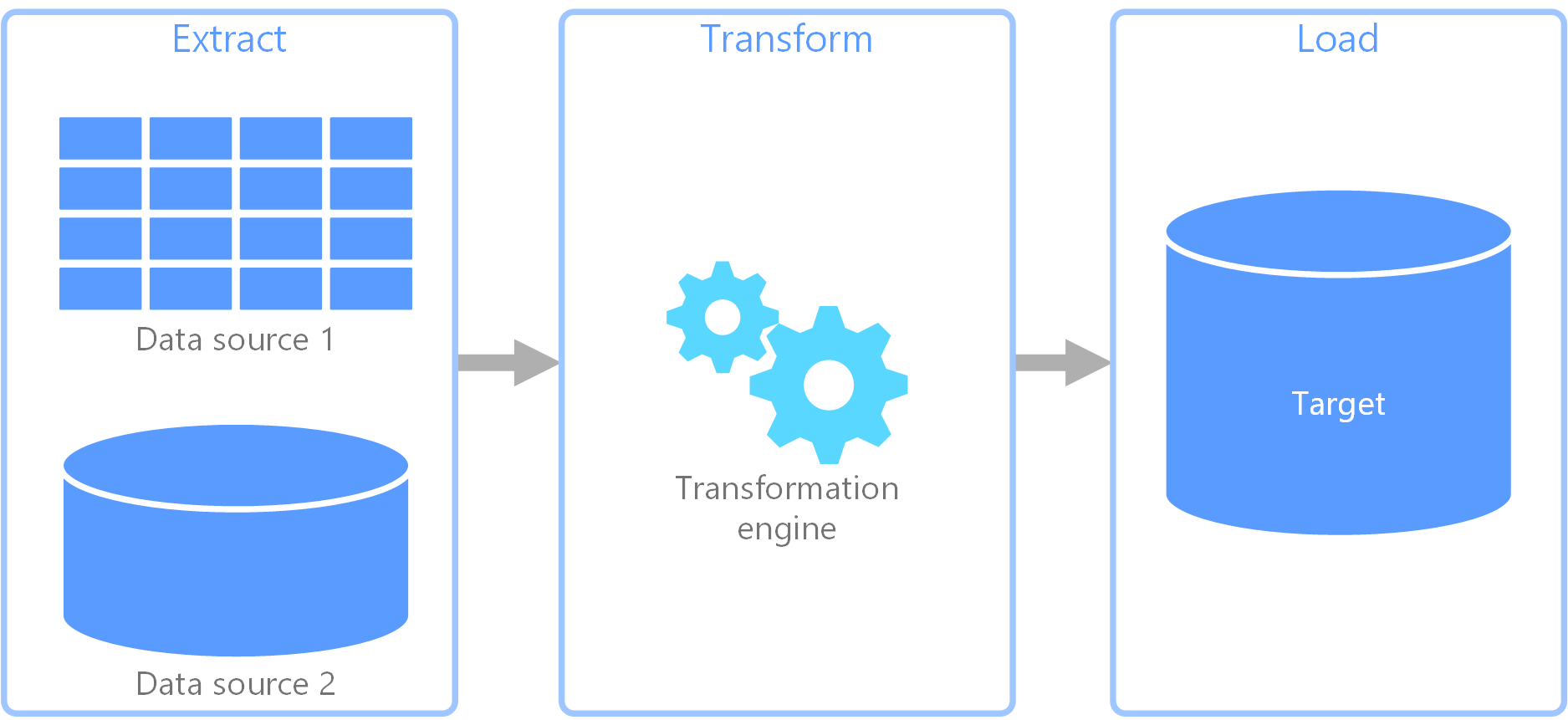

Extract, transform, load — or ETL — is a three-step process that combines multiple data sources into one data warehouse. It uses a set of practices to manage, clean, and organize the data to prepare it for storage, machine learning, and data analytics.

The initial step of the ETL process is Extraction, which involves extracting data from multiple sources — like transactional systems, flat files, and spreadsheets. The goal of this stage is to read data from source systems and temporarily store it in the staging area.

The next step is Transformation. In this stage, the extracted data is converted into a format that can be loaded into the data warehouse. Transforming the data may involve cleaning, validating, or creating new data fields.

The last step is Loading the data to the destination. In this step, the physical data structures are created and the transformed or processed data is loaded into a centralized repository — like a data warehouse or analytics platform.

The whole ETL process is crucial for data management and analysis since it ensures data in the data warehouse is accurate and up-to-date.

Top 3 Approaches for Amazon Redshift ETL

When it comes to conducting ETL with Amazon Redshift, there are several different approaches you can take. That being the case, it’s important to choose the right approach for carrying out ETL based on your needs.

With that in mind, let’s take a look at three different ways you can use Amazon Redshift for ETL:

- Using ETL Tools for Amazon Redshift ETL

- Using Native Amazon Redshift ETL Functions

- Redshift ETL with Custom Scripts

1. Using ETL Tools for Amazon Redshift ETL

When it comes to integration, Amazon Redshift can be seamlessly connected with numerous third-party ETL tools. These tools serve to bridge the gap between data sources and Redshift by offering features such as automation, monitoring, and scheduling.

In this section, we’ll examine some of the popular tools for Amazon Redshift ETL.

Estuary Flow

Estuary Flow offers a no-code data pipeline that enables a fully automated secure data transfer from the source to the destination of your choice. With its user-friendly interface, you can perform complex data integration tasks with a few clicks. To set Redshift as the destination for your selected data source, simply click on this link.

Amazon Glue

Amazon Glue is an event-driven computing platform launched by AWS. It offers a service that automatically manages the computing resources needed to run code when events occur. Using Glue, you can streamline various data integration tasks, such as schema evaluation, automated data discovery, and job scheduling. Additionally, it provides a serverless data integration solution for various AWS services, including Redshift, by combining AWS Lambda and Amazon S3.

Talend

Talend is an open source ETL tool for data integration. It has a rich set of tools for connecting with many data sources and destinations, including data warehouses. With its visual interface, you can quickly design data pipeline workflows, enhancing the overall ETL process.

2. Using Native Amazon Redshift ETL Functions

Leveraging Amazon Redshift’s native capabilities is one of the most straightforward ways to perform ETL operations. With a robust data warehouse capacity and Amazon’s server support, Redshift offers many features to streamline the ETL process.

If you go this route, keep these best practices in mind while using native Redshift functions for ETL:

- Load data in bulk. Redshift was built to handle huge amounts of data. You can collect the data from all the S3-supported sources and then perform a COPY operation to load it into Redshift directly from an S3 bucket.

- Extract large files using UNLOAD. Redshift allows you to extract the files using two commands: SELECT and UNLOAD. SELECT is ideal for performing extraction in small to medium-sized data files, but it operates sequentially, which puts a lot of pressure on the cluster when dealing with large files. UNLOAD, on the other hand, is designed to extract large files from Redshift efficiently. It offers many benefits, including Amazon S3 integration, parallel loading, data compression, and more.

- Regular table maintenance. Redshift’s capacity to quickly perform data transformation results in the constant creation of tables and rows. Even after not being used for a while, many tables might not be automatically deleted after their creation. Therefore, your cluster may become disorganized as outdated data takes up excessive space. To address this issue, you can perform regular table maintenance and functions like VACUUM and ANALYZE to keep your Redshift cluster optimized.

- Workload management. Use Workload Management (WLM) in Redshift to prioritize different tasks by creating a queue for each one. This feature allows you to prioritize tasks within the data pipeline, ensuring that short-running queries don’t get stuck by long-running ones. It also helps manage query concurrency and resource allocation in a data warehouse.

3. Redshift ETL with Custom Scripts

Custom scripts are pieces of code written to address an organization’s particular data integration and transformation needs. Usually, data engineers or developers write these scripts to automate data movement and enhance the ETL process from many source systems to a desired location.

Here are some of the advantages of using ETL scripts:

- By writing customized ETL scripts, you can define the exact execution process of data extraction, transformation, and loading according to your requirements.

- Custom scripts come in handy when you have evolving data integration requirements. By writing your own scripts, you can adapt changes in data sources, business logic, and schemas.

- You can write custom scripts for tasks like data partitioning, parallel processing techniques, and query mechanisms to optimize the performance of data processing in Redshift.

- Amazon Redshift ETL tools have predefined data governance and policies according to each tool’s guidelines. However, custom scripts give you more flexibility. You can use custom scripts to enforce data access control and governance policies in Redshift by implementing role-based access control, employing data masking and encryption techniques, and defining your data retention policies.

- Many ETL tools have subscription models that charge according to your usage. With the help of custom scripts, you can choose to modify resource usage. By only using the compute capacity and functionalities you require and eliminating those you don’t, you will be able to make your ETL operations more cost-effective.

The Takeaway

As you can see, each of these practices has unique qualities that can empower organizations to unlock the full potential of Redshift.

Using Native Amazon Redshift ETL functions allows you to leverage the capabilities of Redshift in a straightforward and cost-effective way and use it to perform data integration. Redshift ETL with custom scripts gives you the flexibility to write ETL processes according to your specific requirements; of course, more work is involved. Employing third-party tools for Amazon Redshift ETL — like Estuary Flow — helps you streamline the data integration process over a user-friendly interface, with no coding required.

Ultimately, the best approach for Amazon Redshift ETL depends on your preferences, resource availability, and specific needs. Here’s to making the best decision for your unique situation!

We might be a bit biased, but we suggest using Estuary Flow to perform Amazon Redshift ETL workflows. Its user-friendly interface streamlines the whole ETL process. But don’t just take our word for it. Create a free account and start using Estuary Flow today.