Change Data Capture (CDC) refers to the process of identifying and capturing data changes from a source data system and propagating the changes to a destination data system.

CDC is often used as a data integration method to ensure data consistency across multiple systems within an organization.

A major benefit of CDC is that it enables incremental load of data; rather than replicating the entire data source on every run which could be extremely resource-intensive.

With CDC, only the incremental changes are replicated, saving both time and resources. A comprehensive introduction to change data capture can be found here.

Many CDC tools have surfaced in the market in the last few years. When evaluating which product best meets your organization’s needs, it is important to understand their scalability. Some common factors to consider include:

- Method: Is the CDC method log-based, trigger-based, or query-based?

- Latency: Does the platform support real-time streaming or scheduled batch processing?

- Connectors: How many different data sources and targets does the platform support?

- Complexity: How complex is it to implement and support?

- Automation: Does the platform handle schema migrations automatically?

- Pricing: Will the cost become astronomical as you scale up?

Salesforce CDC Integration

In addition to the general considerations for CDC platforms, organizations using Salesforce as their CRM system often require specific integration solutions to extract data efficiently. Salesforce Change Data Capture (CDC) integration allows businesses to capture changes in their Salesforce data and synchronize it with other systems.

If you are looking for information on Salesforce CDC integration and how to efficiently extract data from Salesforce, you can explore our dedicated blog post on the topic: Salesforce CDC Integration.

We will dive into each of these considerations and provide a snapshot of the current market landscape.

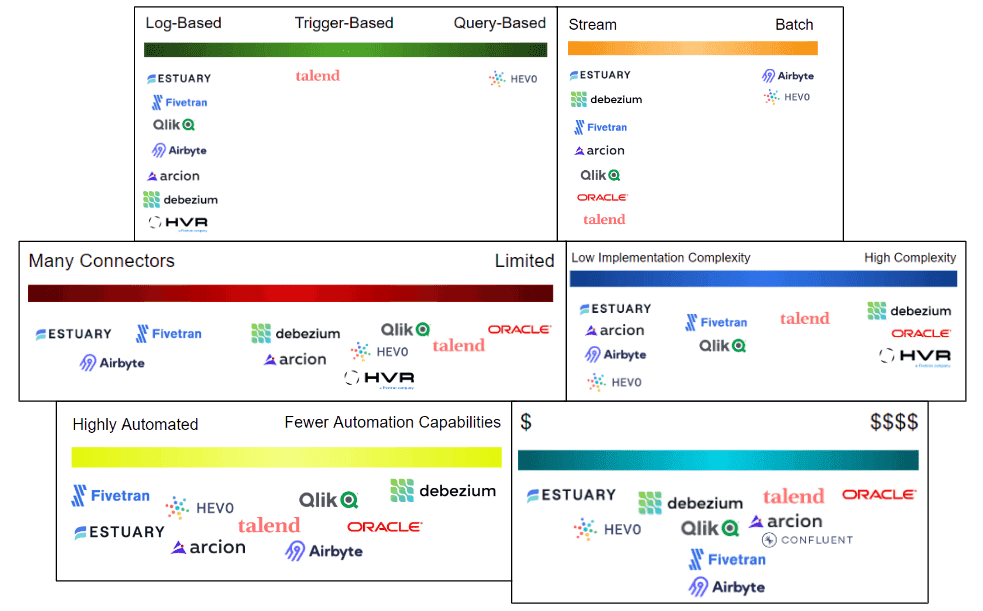

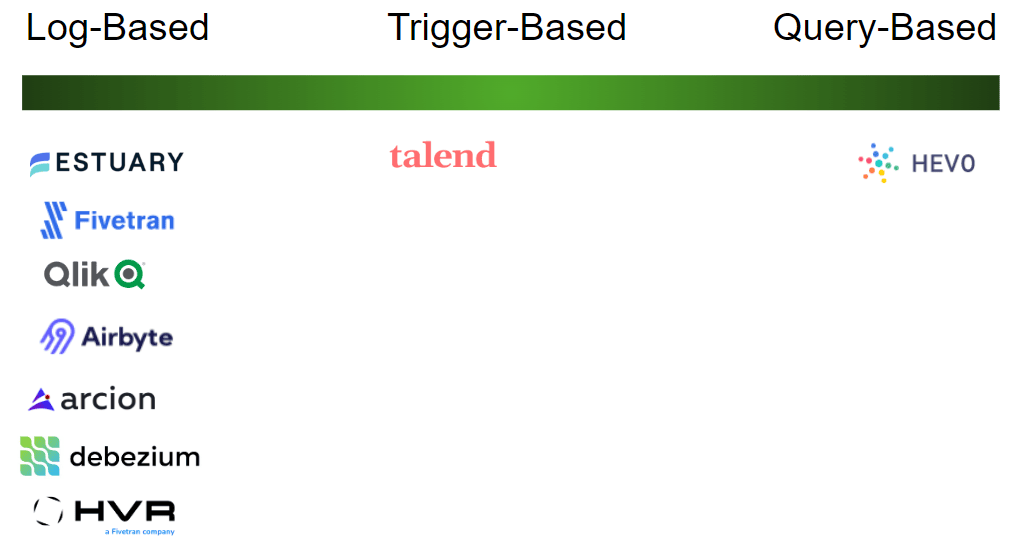

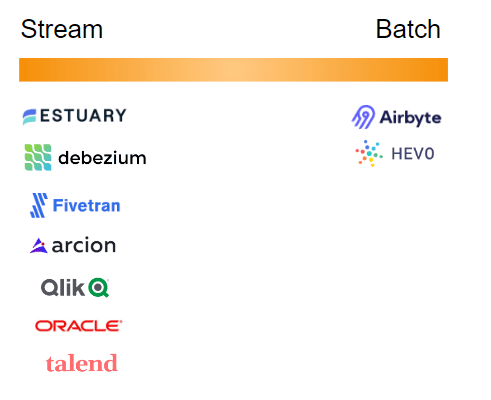

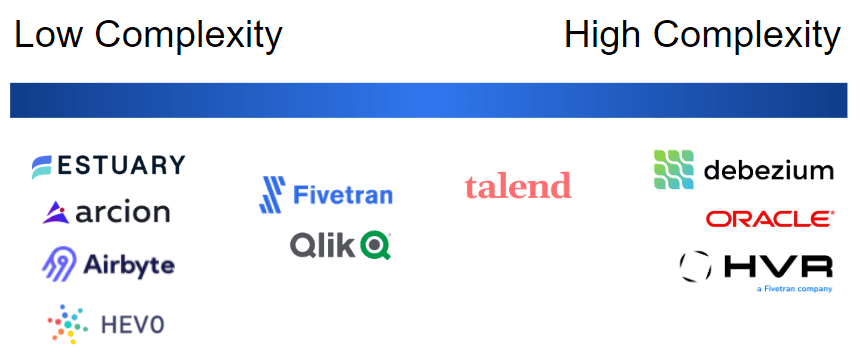

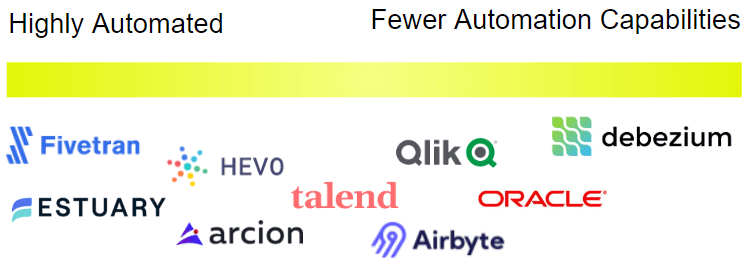

Note that the diagrams below are inevitably oversimplified, as many companies’ offerings are not restricted to one part of the scale, but we are putting logos in areas where they are primarily associated.

CDC Methods

As discussed in our comprehensive introduction to change data capture, there are three primary methods for capturing change data.

- The log-based method is where database logs are reviewed and reverse-engineered to track change events. Examples are the write-ahead log (WAL) in PostgreSQL and the binary log (binlog) in MySQL. This is the most efficient method as it adds no additional load to your source database, and it’s also reliable and fast.

- The trigger-based method uses database triggers to fire an action whenever there’s a change to the source data. The trigger listens for insert, update, and delete events to your database tables and builds a changelog. Triggers are implemented at the SQL level, and the output is typically immediate. The downside is that trigger operations add additional burden to the source database, especially at scale, reducing database performance. Additionally, if your target database is on a separate system from your source, which is often the case, then the solution can be complex, involving polling the changelog, which introduces latency.

- The query-based method requires that the source data has a timestamp column. This is probably the oldest method back in the days when tracking timestamps was the only option. It involves simple SQL queries to identify changes to a table by selecting records where the timestamp value is later than the last-run time. There are a number of shortcomings to this approach, including:

- deletes cannot be captured;

- latency is expected as there will always be a lag between updates in the source vs. target, depending on your query frequency, so you’ll need to offset the timestamp by an arbitrary period to handle late-arriving data; and

- a negative impact on your source database performance because every time you run the query, you are polling the entire table.

- deletes cannot be captured;

Estuary along with some of the other companies shown here use logs such as the WAL to track changes to the sources.

Talend supports log-based CDC for Oracle only but uses the trigger-based approach for other databases.

Hevo’s CDC is query-based which requires the source data to have a timestamp column tracking the latest update date, placing an extra load on the source database.

Latency & Scale: Streaming vs Batch Processing

Is low latency a requirement for your use case? This may be one of the first questions you need to ask when designing your data pipeline.

The latency requirement determines whether stream or batch processing is the best approach for your use case. This video explains the difference between streaming and batch processing.

In summary, streaming is taking action on a series of data at the time the data is created. It allows you to replicate data in near real time with low latency. On the other hand, batch processing requires scheduling the replication to take place over a predetermined interval of time.

Streaming is ideal for use cases that require:

- Low latency

- High volumes of data

- Frequent updates

- Strict operating budget

Estuary provides low-latency streaming in near real time; the latency is as low as milliseconds. Airbyte and Hevo offer batch processing where batch jobs can be scheduled in small regular intervals but not in real time.

Depending on the requirements of your use cases, it’s good to consider how much latency you can tolerate. This article goes over a number of use cases where real-time streaming is most ideal due to low latency.

Connector Support

Suppose you need to replicate data from SQL Server to Snowflake. One of the first things you will likely check is whether the CDC platform supports SQL Server and Snowflake.

Some platforms are inherently more focused on a few database vendors, such as Oracle GoldenGate which is understandably more focused on Oracle databases.

Another example is Arcion Cloud, which supports only a few source connectors like MySQL, Snowflake, and Oracle, and only targets connectors for Databricks, Snowflake, and Singlestore.

Debezium and Airbyte support many connectors but some have scalability issues.

For example, for Postgres, you could run into out-of-memory exceptions when using plugins to convert WAL to JSON. Airbyte’s doc states that their Postgres source connector performs best on small databases under 100GB. Also, at this time, Airbyte only has three destination connectors that are GA: BigQuery, S3, and Snowflake.

In the case of Hevo, CDC is supported in limited databases such as Postgres, MySQL, and Oracle.

While Qlik supports many data sources, the CDC feature has limitations on the data sources. For example, it does not support batch operations with primary keys for Oracle source and does not support column-level encryption for SQL Server. Masked data in a source database on SQL Server will appear in plain text in the target database.

In the case of Fivetran’s HVR platform, DDL replication is limited to some sources and destinations but not all.

These less obvious limitations have an impact on scalability even though the platforms might support a large number of connectors.

Estuary provides a wide selection of pre-built connectors that allow you to quickly connect to your sources and destinations just by filling out a few fields to set up endpoint configurations, without extensive development and implementation configurations.

Implementation Complexity

A major consideration when it comes to scalability is implementation complexity.

Down the road when you expand your data capabilities and integrate with more databases and application systems, will you need an extensive implementation effort each time you need to replicate data from a new source or to a new target?

Estuary allows users to build a complete data pipeline end to end within a few clicks:

- connect to the source (what we call capture)

- connect to the destination (what we call materialization)

The UI is simple with few fields to fill out and few places to click. Some of the other products shown here also have simple UIs, ranging from forms for you to fill out your configuration fields, to a studio where you can build a map or a flow.

On the other hand, some of the enterprise products out there require complex installation and configuration setups.

For example, Oracle GoldenGate is more suited for large enterprises with database administration teams because the installation and configuration process requires advanced and specialized skills.

On this note, Debezium is open-source, but it is not a stand-alone product with out-of-the-box functionalities; it requires complex setups with message brokers such as Kafka. Each time a new data source or destination is added, implementation effort is required to get all the moving pieces integrated.

HVR, acquired by Fivetran, has a separate CDC product from Fivetran, and unlike Fivetran, HVR is extremely complex to implement.

In the middle of the spectrum we have Talend, of which the implementation complexity is not as high as Oracle GoldenGate or Debezium, but the setup is not completely no-code. Users have to write code and queries in many cases.

In summary, getting your hands on the UI to try building a pipeline end-to-end will give a good sense of the implementation complexity required and what it would take to maintain and scale your data pipelines going forward.

Automation

Automation capabilities are important to consider in the context of scalable data pipelines. For instance, does the CDC platform handle schema migrations automatically?

Modern cloud applications are constantly changing. As a result, data table structures and schemas evolve.

Schema changes can disrupt data pipelines, which is why automated schema migration is an important capability that unfortunately many CDC platforms do not currently offer.

Schema migration involves the ability to automatically and incrementally propagate schema changes from your source database to your destination data warehouse.

Some scenarios where schema migration may be needed include:

- a new table gets added to the source

- a new column is added to a table in the source

- an existing column is removed from the source

- data types have changed

Fivetran offers the ability to do automated schema migration, and Estuary is offering this capability in an upcoming release.

Estuary also offers the ability to do schema inference. Flow generates suitable JSON schemas on your behalf during the discovery phase of capturing the source data.

Flow also leverages static inference over your schemas to perform various build-time validations of your entities, helping you catch potential problems early.

Read more about how we handle schemas in our docs or watch our YouTube video on schema inference.

Hevo has a Schema Mapper functionality that helps automate schema detection and mapping.

Pricing / Costs

Finally, product cost directly impacts scalability. Estuary was designed with developers in mind. The first 10 GB of data is free. After that, it’s $0.75/GB, accommodating sizable databases at a reasonable cost.

Also, after the initial load, the cost is applied to the incremental changes only, so for the vast majority of use cases, it’s reasonable to expect the monthly replication data to be under 100 GB, which would total to $75 or less. This same amount of data replication would cost thousands of dollars each month on some of the other platforms shown here.

Many CDC platforms are more targeted towards large enterprises, and the cost would be prohibitive to many developers or small to medium businesses.

For example, Talend is free for development only, and the server installation is licensed and costly.

Additionally, many vendors don’t provide transparent pricing and users have to engage the sales team to go over customized pricing options rather than having a self-serve option. Qlik, Talend, Fivetran, and Oracle all fall into this category.

Estuary’s pricing is transparent and affordable.

Disclaimer: Because pricing is not always publicly disclosed, the above diagram requires some speculation based on info publicly available on websites. Also, pricing models are often not linear since many factors including usage go into the calculation, so we are not always comparing apples to apples unless we evaluate one specific use case only.

It’s also worthwhile to mention that cost is not dependent solely on product pricing.

For example, Debezium is open-source but due to implementation complexity, engineering teams, and resources will need to be allocated to support implementation and ongoing maintenance. The hidden costs in technical resources outside of product pricing should not be neglected when considering future scalability.

Conclusion

When evaluating which CDC platform best meets your organization’s data pipeline needs, it is important to understand their scalability.

Some common factors to consider include:

- CDC method

- Latency

- Available connectors support

- Implementation complexity

- Automation capabilities

- Costs

It’s best to get your hands on a CDC product and try building a pipeline end-to-end to get an understanding of the implementation complexity, supported features, and scalability.

To take a deeper dive into this topic, check out this article on Debezium alternatives.

And of course, you can try Estuary Flow for free here.