Whether you aim to perform a one-off data migration from MySQL to PostgreSQL or continuously replicate data between the two, there’s lots to consider and many ways to approach the task.

In this post, we’ll provide a complete MySQL to PostgreSQL integration tutorial using Estuary Flow. This method allows you to migrate historical data and continuously capture new data from MySQL to PostgreSQL using change data capture (CDC).

We’ll also list a few more popular methods for transferring data from MySQL to PostgreSQL.

But first, a few prerequisites. To be successful in your migration, it’s important to understand both databases, the ways in which they differ, and what you hope to achieve with migration. Let’s start with a brief (re)introduction to MySQL.

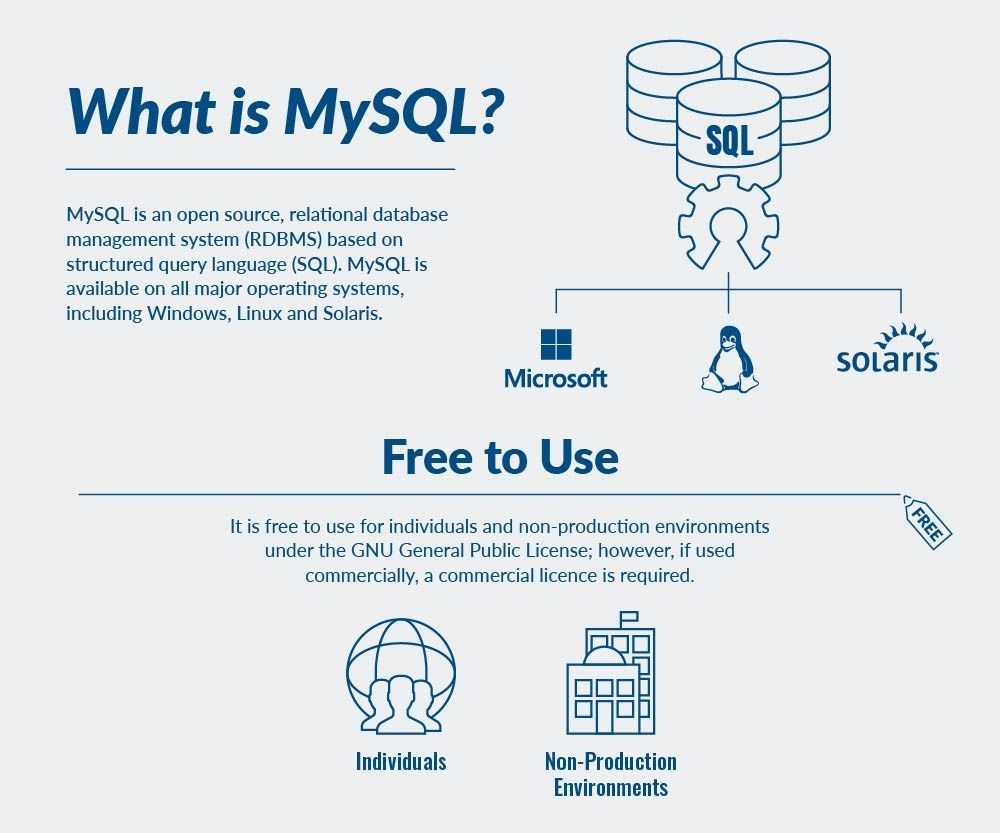

What is MySQL?

MySQL is a relational database management system (RDBMS) based on SQL. Maintained by Oracle, the platform is a cornerstone of many tech stacks. It allows teams to build and maintain powerful data-driven B2B services and customer-facing web applications.

MySQL is one of the most common and popular RDBMS available today. It is open-source and has a rich feature set with excellent reliability.

Many internet-critical organizations such as Facebook, Twitter, Flickr, Wikipedia, and YouTube use MySQL as their main RDBMS.

Advantages of Using MySQL

There are many SQL-based RDBMS available today. All of them fulfill the basic function of storing data in a tabular format that’s accessible by query. So why use MySQL?

As mentioned above, MySQL is open-source with enterprise-level support. Your business can deploy it instantly with no costs attached. The database is also cross-platform and supports popular operating systems, including Windows, Linux, NetWare, Novell, Solaris, and other variations of UNIX.

Here’s a rundown of the major advantages you can expect with MySQL.

- Extremely fast for simple read-only operations.

- Extremely secure with a unique data security layer.

- Flexible to work with since it supports a vast number of embedded applications.

- The program is simple to use and requires only a basic knowledge of MySQL and a few simple SQL statements.

- Can handle more than 50 million rows and is scalable to handle any amount of data.

- Allows transaction rollbacks.

Disadvantages of MySQL

While it is an excellent database, MySQL is not without its flaws. Here are some prominent missing features.

- MySQL loses its efficient characteristics at scale.

- Other paid databases have better debugging and development tools.

- Less efficient for use cases that include heavy read and write operations, or complex queries.

What is PostgreSQL?

Like MySQL, PostgreSQL — also known as Postgres — is a popular, open-source database, but it has some additional advantages and complexity.

Postgres is an enterprise-class object-relational database management system (ORDBMS). It supports SQL (relational) and JSON (non-relational) querying.

The platform is just as stable as MySQL, but is supported by an active community of open-source contributors rather than a corporation. All of these factors have enabled PostgreSQL to become a platform with high levels of resilience, correctness, and integrity.

The platform is often used as the primary transactional database in a company’s tech stack. Many popular websites, mobile apps, geospatial, and analytics applications rely on PostgreSQL for their data querying.

Advantages of Using PostgreSQL

PostgreSQL is generally regarded as more extensible and adaptable than MySQL. As an ORDBMS, it supports tabular data with standard SQL, while also recognizing objects and classes, similar to an object-oriented programming language.

PostgreSQL, like MySQL, is also an open-source system — it’s freely available to businesses. Users can set up, modify, and implement the database as they see fit.

Here’s a quick summary of other advantages you can expect with PostgreSQL.

- Performance degrades less at higher scales.

- Fast handling of complex queries and read-write operations.

- ACID compliance and fault tolerance with write-ahead logging.

- Large number of data types, including user-defined types, which can be combined in the same table.

- The database has support for geographic objects and can be used to enable location-based services and geographic information systems.

Disadvantages of PostgreSQL

Let’s take a quick look at some of the drawbacks of using PostgreSQL as your database.

- Learning curve can be higher than MySQL.

- Slower at smaller scales and for simple operations.

- More work may be required to enhance performance and speed compared to MySQL.

Why is Database Migration Important?

At some point, database migration will become necessary for most businesses. The reason for this is usually a functional one: you’ve determined that a different database would better suit your changing needs.

Companies moving from traditional on-premise setups to the cloud can also find it necessary to ramp up their migration strategies.

In addition to the benefits mentioned above, here are some popular reasons why you may want to consider data migration.

Save Money

All databases are ideally suited for certain workflows, but may not perform as well at others. You may find that the database you’re currently using is not designed to perform your most common operations efficiently, resulting in an unnecessarily large hosting bill.

Additionally, if the database isn’t user-friendly, you’ll be wasting your team’s valuable time. Hiring more IT professionals and engineers can help but may not be the most efficient solution when migration can smooth entire workflows.

Eliminate Outdated Tools

Outdated and redundant tools can set your company back in how efficiently it processes, stores, and queries data. Newer solutions can help speed overall processes and enable a smoother workflow for all teams utilizing datasets.

Unify Disparate Data

Most companies can simplify their data management processes by unifying disparate data. The simplest method to achieve this is through using one database instead of two or more; especially if they all perform similarly. You can also access data easier and faster with modern platforms.

Data Migration vs Data Replication

So far, we’ve been talking about database migration, but database replication is another equally important — and distinct — goal. It can be easy to confuse these two terms, but they differ in their intent.

With database replication, the process of copying data from one database to another is ongoing. Once the replication process has begun, it continues to replicate new data from the source to the target and does not specify an end to the replication process.

Replication is useful if you want to power a variety of different workflows from a single dataset. In the case of MySQL and Postgres, you might want to run quick, simple queries on your MySQL instance to power one application. At the same time, you might want a replica of the data in Postgres where you can perform more complex read-write operations.

By contrast, migration is inherently a temporary process that replicates a source database to a target database. The end goal of most (if not all) database migrations is to eventually turn off the source database.

In the case of MySQL and Postgres, you may have decided that Postgres’s flexible ORDBMS nature is better suited to your overall mission. You can migrate all your data from MySQL to Postgres, and then shut down your MySQL instance.

Put another way, database migration is the controlled finale of database replication. You can use a data replication tool to perform a full migration, but the reverse isn’t necessarily true.

Why Migrate From MySQL To PostgreSQL?

While the two platforms share similar development timelines, levels of support, and community backing, they have different strengths and weaknesses. And depending on your use case, migrating or replicating your MySQL database to PostgreSQL might be the right choice.

MySQL, while reliable and competent for basic use cases, can become challenging to manage as your data grows and your needs become more complex. This can cause a lot of downtime and lags across your data stack. Data analysts and software developers may have problems troubleshooting and accurately accessing data.

And if your company uses a lot of ad-hoc queries and/or complex longer-running queries and read-write operations, PostgreSQL is the best way to go since it uses indexes in moderation which reduces the load time.

As we’ve discussed, the fact that you need Postgres for some use cases doesn’t make it perfect for everything. If you still want to perform simple queries with a focus on speed, you might want to keep MySQL but and continuously replicate it to Postgres.

You can use the method below for a complete migration, or ongoing replication.

How to Build a MySQL to PostgreSQL Data Pipeline: Easy Steps

Method 1: Migrate Data from MySQL to PostgreSQL Using Estuary

This method uses Estuary Flow, a powerful data integration platform that simplifies the process of migrating databases from MySQL to PostgreSQL. Follow these steps to get started.

Step 1: Sign Up for Free on Estuary

- You can build your first pipeline with a free trial account — go to the web app to register. For large production workflows, contact our team to set up an organizational account.

Step 2: Get Set Up

- Go to the Estuary web app at dashboard.estuary.dev and sign in.

- Make sure your MySQL database meets the Estuary prerequisites.

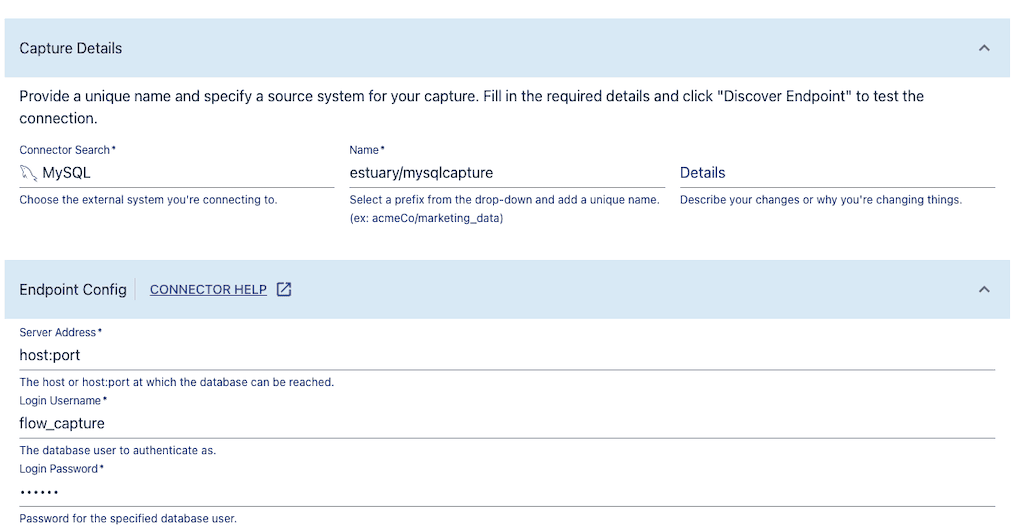

Step 3: Capture Data From MySQL

- Click the Captures tab and choose New Capture.

- Click the MySQL tile. A form appears with the required fields for the MySQL capture.

- Choose a unique name for your capture.

- Provide the MySQL server address, database username (if you followed the prerequisites exactly as written, this should be “flow_capture”), and a password.

- Click Next. Flow lists all the tables in your database. These will be converted into Flow data collections. You can remove any you don’t want to capture.

- Click Save and Publish.

- Click Materialize Collections.

Step 4: Materialize to Postgres

- Choose the PostgreSQL tile.

- Choose a unique name for the materialization.

- Provide the database address, and the username and password for a Postgres user with write access to the database.

- Scroll down to view the Collection Selector. Each data collection you just captured from MySQL will be mapped to a new table in Postgres. Provide a Table Name for each.

- Click Next, and then Save and Publish.

All existing data from your MySQL database will be copied to your Postgres database. Any new data that appears in MySQL will also be copied to Postgres in real-time.

For more help with this method, see the Estuary Flow documentation on:

Benefits of Using Estuary Flow for Data Migration

Estuary Flow is a real-time data integration platform that you can use to integrate data across a variety of systems, including MySQL and Postgres. It’s not the only system that can perform a migration, though, so why use Flow specifically?

In general, Flow stands out in how it facilitates ongoing replication over a one-and-done migration. Even if your ultimate goal is to migrate completely from MySQL to Postgres, it’ll make the process much easier if you set aside more time to switch all your dependencies and processes over to Postgres.

Flow allows you to operate both databases in sync for an extended time, affordably.

Let’s break this down into more specific benefits.

#1 Easy user interface

Flow’s web application is a breeze to use regardless of technical skill level, especially compared to command-line or scripted tools. This makes the pipeline quick to set up and easy to manage.

#2 Real-time data transfer

When you create a MySQL to Postgres data pipeline with Flow, the process first performs a historical backfill: copying all data in your MySQL database to Postgres.

At this point, the pipeline transitions to instantly copying all new changes that appear in the MySQL database. These changes appear in Postgres within milliseconds, so that the two sources of data remain in sync.

#3 Affordable for ongoing replication

Flow’s MySQL integration uses change data capture (CDC).

CDC is a methodology for capturing changes to databases in real-time, but it also has important performance implications. CDC integrations don’t need to scan the entire database to check for changes; instead, they react to events in database logs (in the case of MySQL, the binary log) and copy these changes into the pipeline.

This makes the process much less compute-intensive than other methods. These performance savings translate into cost savings.

Check out our guide to MySQL change data capture here.

#4 Resilience against failure

When you create a pipeline like this one, Flow stores the data captured from MySQL in cloud-backed data collections before writing them to Postgres. These serve as backup datasets.

Flow and its integrations have exactly-once semantic and distributed storage.

Other Migration Methods

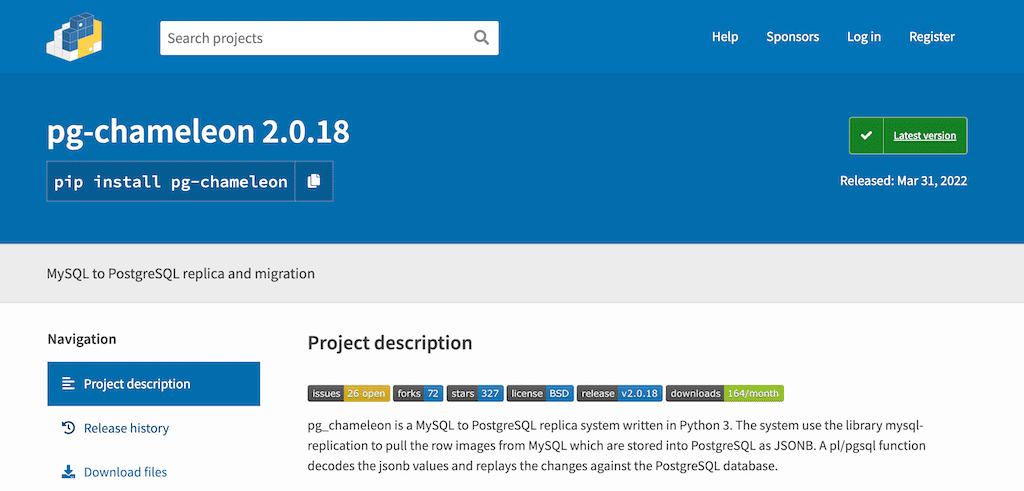

Method 2: pg-chameleon

pg-chameleon is a Python system that leverages MySQL’s native replication and converts the results to Postgres. It can be used for one-time migration or ongoing replication. For the latter, note that the process adds a time delay.

Method 3: pgloader

pgloader is a command-line tool for one-off migrations into Postgres. It supports MySQL as well as other source systems.

Data pipeline tools

There’s a variety of tools on the market today that provide user-friendly interfaces for creating data pipelines. Estuary Flow falls into this category, but there are others (we’ve written some comparisons on the blog before; see here and here).

Many of these tools offer integrations between MySQL and Postgres. But there are a few important things to keep in mind before choosing a data pipeline tool:

- How does the integration work? Especially if you want to continue to perform ongoing replication, it’s important to know the data pipeline mechanism. Many tools use a batch processing framework that can add a time delay and may need to repeatedly scan your entire MySQL database. This brings us to our second question…

- How much will it cost? Relational databases are often the largest repositories of data in a business, so migrating them can be costly. Repeatedly updating a replica using batch processing can get even more expensive. Be sure to get a specific quote before using a data pipeline service.

Conclusion

Database migration and replication is an important facet of every business’ data strategy and architecture. As requirements and use cases evolve, it’s more important than ever to have the right type of database.

MySQL and PostgreSQL each have their place and optimal use cases. Whether your goal was to permanently move from one to the other or maintain both side-by-side, we hope this guide has simplified the process for you.

For more data integration tutorials, check out the Estuary blog and documentation.