You’re trying to figure out how to move data from MongoDB into Snowflake. Great! Let's get started.

Before anything else, you first need to understand the given use case, and why this body of work is important to fulfilling business objectives. This is important so the solution you come up with both fulfills the requirements and adheres to your organization’s larger data strategy.

For this example, let’s assume the following:

- You’ve been asked to move source data from MongoDB into your company’s data warehousing tool, Snowflake. You’re using a cloud provider like AWS, or Azure.

- You need this pipeline to capture both historical and incremental changes happening within the source data. You need those changes to become available in Snowflake in near real-time.

- This data is going to be used for downstream analytics purposes in a 3rd-party business intelligence tool, queried with SQL.

- You have a fairly low appetite for open-source tools and frameworks.

Given these parameters, there are a variety of options to choose from. Below, I’ll walk through a few possible ones.

While considering different approaches to move data from MongoDB to Snowflake, you should be focused on:

- How well it fulfills your current and future requirements.

- How well it performs, scales, and stays up and running.

- What resources, including staff, are needed and available?

- How long it will take to implement.

- How easy it is to maintain and change.

- How much will it cost the business overall, including labor costs.

Introduction to MongoDB

MongoDB is an open source NoSQL document database that can be deployed on premises or in any cloud service provider. MongoDB the company is the leading contributor to the open source project also provides a cloud service, Atlas. MongoDB supports JSON-based documents. It is designed to provide an efficient way of handling data sets and to quickly adapt and change the structure and functionality of your applications, including horizontal scaling and automated failover functionality.

Introduction to Snowflake

Snowflake is a cloud data warehouse built for speed and scalability. It lets you run complex analytical SQL queries across massive datasets. Unlike traditional data warehouses, Snowflake separates storage and compute, allowing you to scale dynamically based on current computing needs, which helps lower costs and optimize performance.

Methods to Connect MongoDB to Snowflake data warehouse

- Method #1: Connect MongoDB to Snowflake With Native Cloud Provider Tools & Snowpipe

- Method #2: With a custom Python Script and Airflow

- Method #3: Connect MongoDB to Snowflake using Estuary Flow

Method #1: Connect MongoDB to Snowflake With Native Cloud Provider Tools & Snowpipe

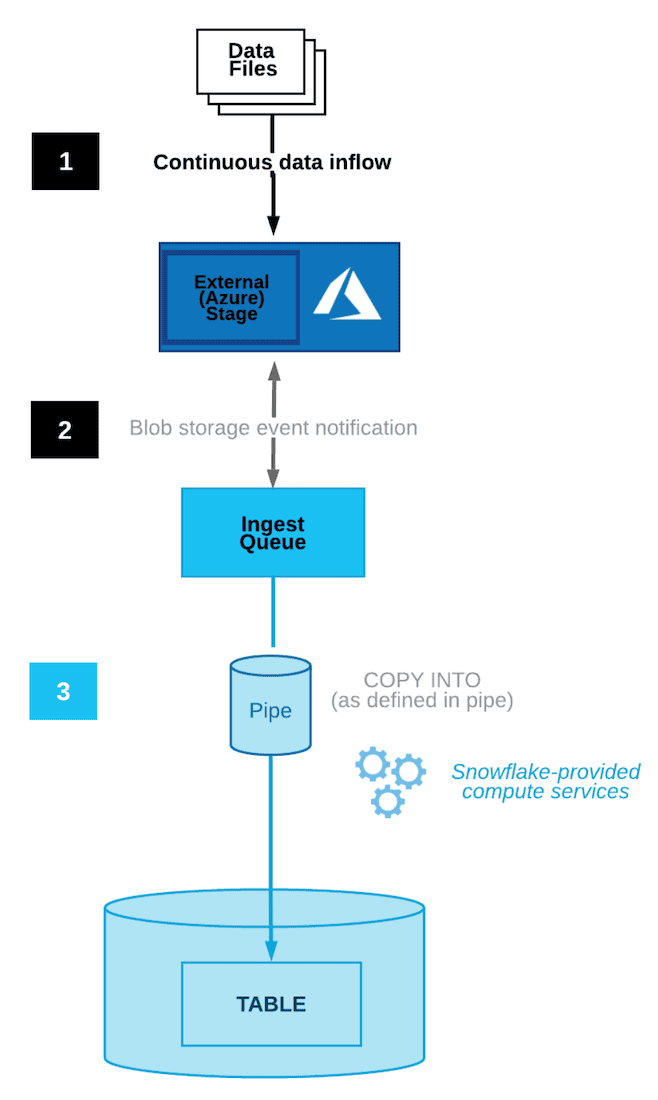

Snowpipe is a Snowflake service for ingesting data. It allows you to move away from scheduled batch loading techniques like the SQL COPY into command towards automated ingestion or near real-time streaming.

At a high level, Snowpipe performs this by loading data as soon as it’s available in a staging environment. You can create smaller micro batches to reduce latency. Your other option for more real-time streaming is to use Snowpipe streaming, which is a separate approach.

YYou might use Snowpipe with the native services offered by your cloud provider, like AWS or Azure. Here are just two examples, one for each cloud provider, of how your platform infrastructure could be designed, and given that, how you could move data from MongoDB to Snowflake with that cloud provider.

- AWS — You could have a Kinesis delivery stream responsible for landing your MongoDB source data into an S3 bucket. If you have an SNS system enabled, you can use that associated successful run id to load data into Snowflake via Snowpipe.

- Azure — You could trigger Snowpipe using an Event Grid message for Blob storage events. Basically, your MongoDB files would be loaded into an external Azure stage. You’d create a blob storage event message, that would notify Snowpipe via Event Grid when those files are ready to be dropped into Snowflake. Snowpipe would then copy those queued files into a target table you created in Snowflake.Snowflake has a complete guide on connecting

Snowpipe to Azure blob storage, which you can find here.

There are a few important considerations with this approach.

First, it requires a thorough understanding of NoSQL databases like MongoDB, Snowflake, and your cloud provider. Troubleshooting and identifying the root cause of problems within a complex data pipeline like this requires significant domain expertise. This can be a difficult hurdle to overcome for immature or small data teams who “wear many hats”.

Second, this approach can be difficult to assign ownership over, especially regarding long-term management. This is because the resources you’re using to create this data pipeline are typically owned by a totally different team outside of Data. Choosing this approach would require significant discussion across other engineering teams to establish clear boundaries of ownership and long-term responsibility.

Third, it will be difficult to schematize your data. Without native tools to add schema to noSQL data, it can be of limited value in your data warehouse.

Method #2: Connect MongoDB to Snowflake With a custom Python Script via Data Orchestration Tool

A second option is to write an ETL pipeline in Python, orchestrated by Airflow or a similar tool.

Assuming you’re using AWS, you could use the MongoToS3Operator to move data from MongoDB to your S3 bucket, then use the SnowflakeOperator to move said data from the S3 bucket into a Snowflake table.

Astronomer, a popular managed service for Airflow, provides useful pipeline examples in their GitHub repository. Astronomer also offer a tutorial you can follow to practice building an ETL pipeline with Python on your local machine.

Pros of connecting MongoDB to Snowflake with Python and Airflow include:

- Coding gives you the flexibility to customize each pipeline.

- Airflow has a large offering of custom sensors, hooks, and operators that can address a wide variety of potential use cases for your organization.

- Airflow offers a visual monitoring and management interface, which is helpful when you're troubleshooting a pipeline error and need to see what's going on at each step.

Of course, there are cons to consider.

Cons of this approach:

- You have to maintain the code for each pipeline. This can become difficult as scale and complexity increase, or if the pipeline needs to be changed regularly.

- The process is slower. This is because you have to wait for an engineer to modify the code itself before you see the changes you requested be enabled. Depending on the urgency of a particular request, and an engineering team's current bandwidth, these requests can remain in the backlog for weeks, or months at a time.

- It can be difficult to maintain consistency across each pipeline if a clearly defined standard doesn't exist for the team to follow. Each developer has their own “style” of approaching problems, syntax, etc. This approach requires teams to spend significant time establishing and documenting a quality standard for design, coding, use of frameworks, hooks, and operators, and more to ensure pipelines are well maintained and expectations are clear.

- As per method #1, it can be quite difficult to manage schema using this method, especially as schema changes.

- It’s a batch process, not real-time. If you need low latency MongoDB to Snowflake, this is not the best approach.

Method #3: Connect MongoDB to Snowflake using Estuary Flow

There are a number of available SaaS tools that can handle your data ingestion needs for you.

This approach is helpful for teams that:

- Need to make data available downstream quickly as possible.

- Prefer not to deal with challenges associated with manual deployment.

These tools can get your source data from MongoDB to Snowflake via packaged connectors. The individual fields from your MongoDB source data may become varchar data type columns as JSON objects in Snowflake, or if you're using a system like Estuary Flow, which handles automated schema conversion, you may be able to sync it in a tabular format.

Once your data becomes available in Snowflake, you can begin using SQL to explore your source data. Because of the nature of this ingestion process, it's important to build a clear strategy around schemas and data organization, and create clear documentation the entire team can access.

There are a number of pros that come with choosing a managed service for your data pipeline. Here are just a couple:

- You can execute much faster. This is true for both making data available to your stakeholders, and resolving issues within the pipeline itself. This is because the managed tool is handling what's going on “under the hood” for you. You no longer need to spend hours troubleshooting in your CLI, or guessing which part of the pipeline is causing your log file error.

Basically, it allows you to skip all the annoying parts of a more typical data ingestion process and deliver faster. This is particularly relevant for teams who own the data ingestion process, but have limited platform, data engineering, or other specialty resources. - It allows you to expand client offerings and services. Most managed tools offer hundreds of existing connectors for various sources and destinations. This gives you more bandwidth to support additional clients, and more complex use cases that may be unfeasible otherwise.

Estuary Flow is one such data pipeline tool. It allows you to create a data flow that connects MongoDB to Snowflake using a web-based UI or command-line interface (CLI) for free, as well as automate how to update schema in Snowflake as it changes in MongoDB.

Steps to move data from MongoDB to Snowflake Using Estuary

Pre-requisites: (see Estuary documentation for MongoDB to Snowflake)

- A MongoDB instance or MongoDB Atlas account

- Ensure you have read access to your MongoDB database ans oplog.rs

- Access to a Snowflake account that includes appropriate access levels to an existing target database, schema, and virtual warehouse.

- Snowflake account host URL formatted using the Snowflake account identifier

Step1: Sign Up for Free on Estuary

Head over to Estuary's website and create a free account. This unlocks access to their streaming connectors for MongoDB and Snowflake.

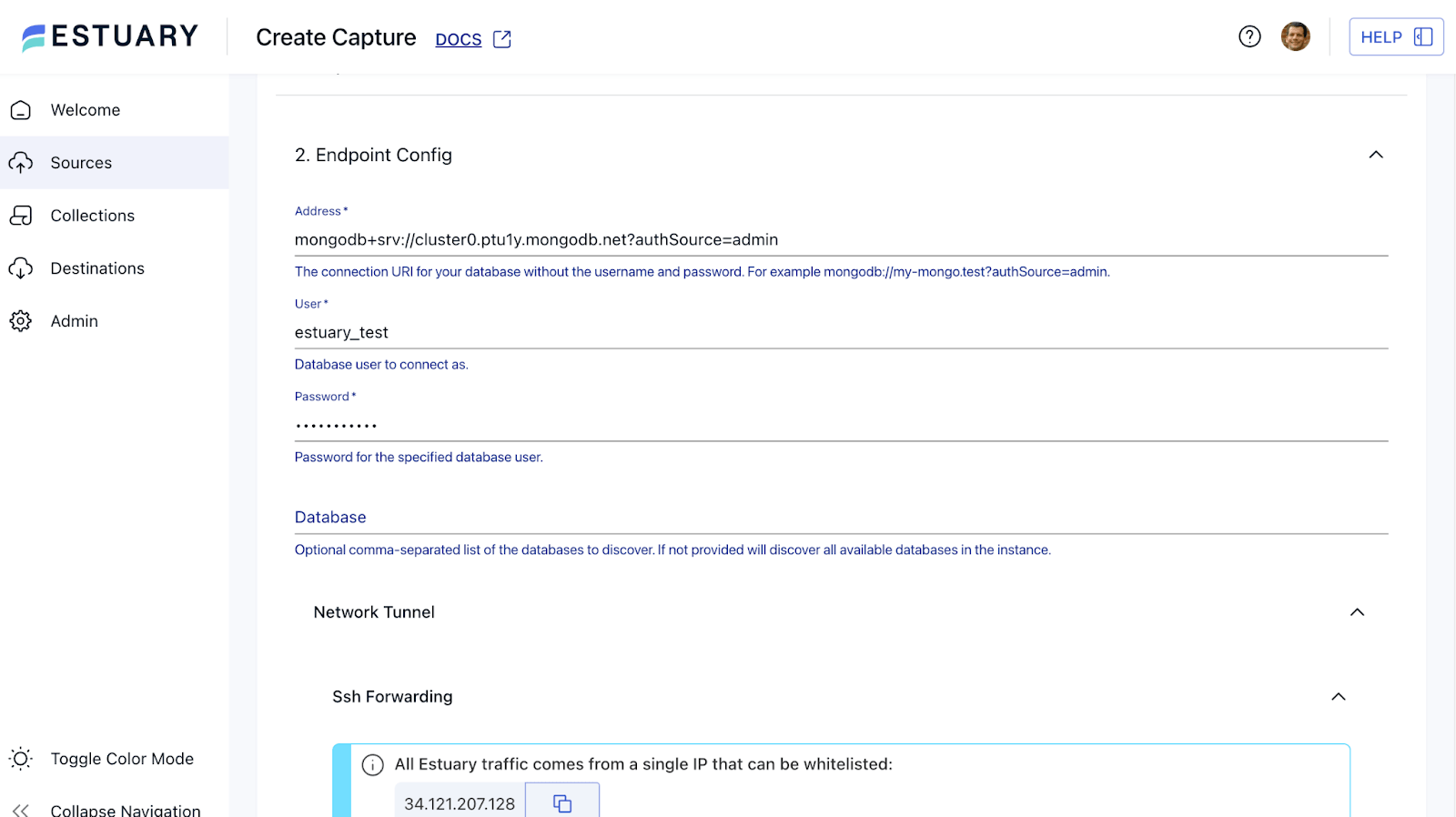

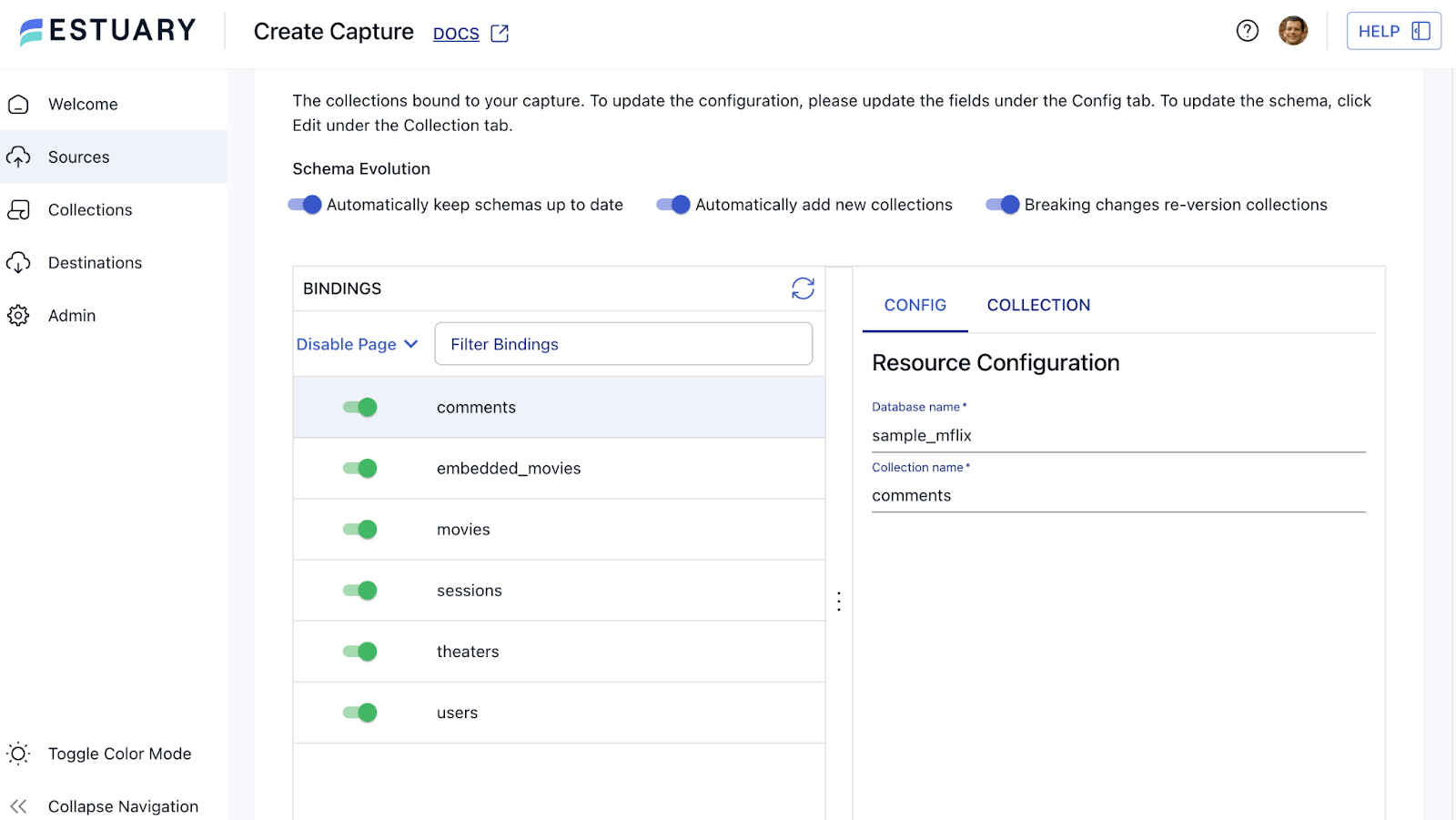

Step 2: Set Up MongoDB Capture

- Within Estuary's platform, navigate to the "Sources" tab and click "New Capture".

- Select "MongoDB" as the data source and choose "Create New Capture".

- Provide your MongoDB credentials (ensuring read access) and, for Atlas users, any necessary whitelisting information.

- Click "Next" to initiate the connection test

After a successful connection, Estuary will automatically discover available databases and schemas within your MongoDB instance. This allows you to easily choose which MongoDB collections you want to extract.

Choose the collections you want, and click NEXT, then TEST your collection or just click SAVE AND PUBLISH. Estuary will automatically start to extract all the historical data and also start to capture all changes as they happen.

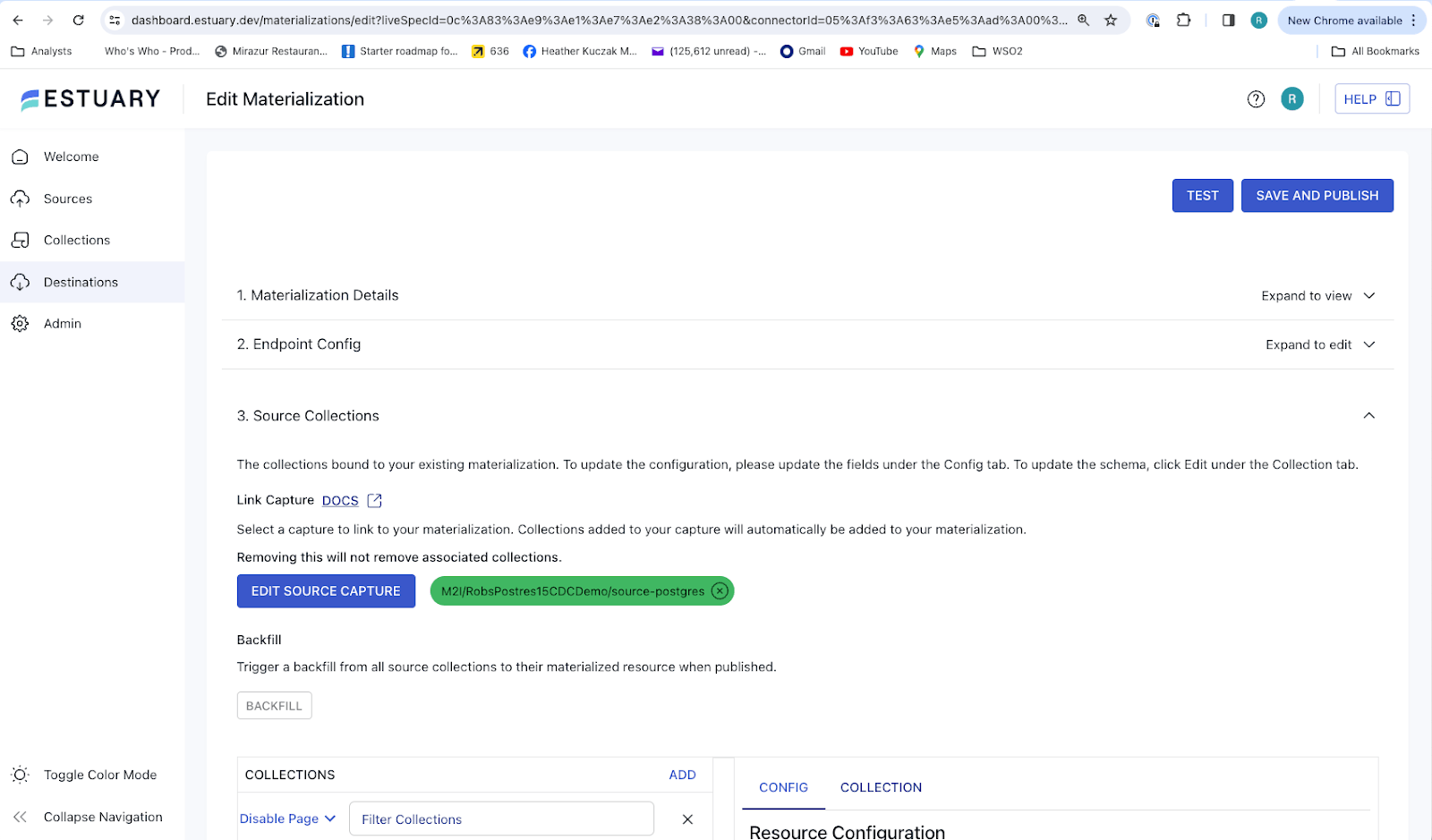

Step 3: Configure Snowflake

Next, go to Destinations and click on NEW MATERIALIZATION. Search for Snowflake and click on Materialization. Enter a unique name and details, the host URL, account, database, and schema, warehouse, role, username and password. Then choose the collections you want to add. Then on TEST to make sure it’s configured correctly, and SAVE AND PUBLISH.

That’s it!

Once everything is configured, Estuary Flow will begin streaming data from MongoDB to Snowflake. Estuary takes care of the continuous data transfer.

Step 5: Monitor Your Data in Snowflake

With the capture running, you can access your newly populated collection within Snowflake for analysis and further processing. You can also set up alerts.

What’s the best way to move data from MongoDB to Snowflake?

As we’ve learned, the best method to load from MongoDB to Snowflake depends on your use case, your team, and the other technology resources at your disposal.

But to put it as succinctly as possible…

- If you have a large team of engineers that excels at building pipelines, and batch is OK, it may make sense to use cloud provider tools and Snowpipe, or better yet, Airflow.

- Otherwise, if you want to focus on delivering business value faster with fewer resources, or need lower latency, you should focus on a managed data pipeline tool like Estuary Flow.

Related articles:

Frequently Asked Questions (FAQ)

1. What are some of the challenges with moving data from MongoDB to Snowflake?

The first is the actual integration. Setting up connections can be hard to setup and even harder to troubleshoot. The second is transforming documents, which are non-relational, into a relational schema. The final challenge is implementing real-time streaming using change data capture (CDC.) It is not recommended to do this on your own. Evaluate vendors carefully, as many implement batch CDC, not streaming.

2. What is the best way to move data from MongoDB into Snowflake?

There is no one good answer. For low latency or near real-time integration, change data capture (CDC) to snowpipe (streaming) is the best answer. When using dbt be sure to use incremental materializations. For batch, there are several answers Airflow is a great option for batch if you have lots of custom tasks and sources and are comfortable investing in staff to implement it. ELT vendors provide good batch integration options that require less staff. Most support dbt, which is the most commonly used technology for transforming data in Snowflake other than custom SQL.

3. Is it better to implement batch or streaming integration?

It depends. In general, CDC that captures changes from the transaction or write-ahead log (WAL) is much more efficient with the lowest load on the source database, and delivers the lowest latency. This is also true for MongoDB. But it is also the most complex for a vendor to get right, and vendors often charge more. So evaluate CDC vendors carefully. If you only need a small amount of data, and don’t care about low latency, direct queries may be the better answer.