Are you trying to figure out how to migrate data from Kafka to Redshift? Well, you’ve found a perfect guide.

Before beginning, let’s start with a use case that might sound familiar to set the stage for why this migration is crucial. It’s important to understand the requirements because the solution that you choose should fulfill your organization’s larger data processing strategy.

In order to complete this use case, let’s assume you have been asked to:

- Move source data from Kafka to a warehousing tool, Amazon Redshift. And to complete this, you might use Kafka Connect to import/export data from/to other systems.

- Capture historical data as well as real-time data from the source. And then these changes should reflect in your Amazon Redshift warehouse in real time.

- The data is going to be used by downstream applications for analytical purposes.

There are a variety of tools available in the market to fulfill the above parameters. This article will feature a few of the possible approaches. Again, before choosing any of the tools or approaches, you should consider:

- Maintenance

- Scalability

- Available resources

- Potential benefits

First, some introductions…

What is Kafka?

Kafka, developed at LinkedIn in 2011 and later donated to the Apache Foundation, is an open-source, distributed Event Streaming software Platform (ESP). It allows the development of real-time event-driven applications.

Kafka was initially developed to handle data (referred to as messages) queues and to manage the real-time streaming of data from different applications. Data generated by producers (front-end servers) is stored in the form of a stream in the Kafka cluster (also referred to as a data broker). This data broker acts as an intermediate system between all producers and consumers (end-user). A Kafka topic is a user-defined category to organize messages. Each topic has a unique name across the entire Kafka cluster. So the producers write data on topics in Kafka, and the consumers will read the data from these topics. Whenever a consumer generates a request, it will consume the data from the cluster.

Let’s understand some of Kafka’s best features:

- Scalability: It’s a highly scalable distributed system with no downtime.

- High volume: It can continuously handle terabytes of data generated by producers and seamlessly send it to consumers.

- Fault tolerance: It handles failure and data recovery with replicas stored on some other systems.

Apache Kafka can be used either on its own or with its managed version: Confluent. Basically, the Confluent platform is built on Apache Kafka, which makes it easy to manage data streaming.

What is Redshift?

Redshift, developed by Amazon, is a cloud-based, petabyte-scale, fully-managed data warehouse service. It is specifically built to support large-scale data analytics and data warehousing. It stores data from various sources and applications for further analysis with business intelligence tools or supports machine learning workflows.

Some of the advantages of Redshift are:

- Cloud Data Warehouse: You don’t need to provision hardware and set up your server/database. This overcomes the additional cost and overhead maintenance.

- Easy to Set Up and Manage: You just need to launch your data warehouse with the desired configuration that you need.

- Massive Parallel Processing (MPP): It enables fast execution of complex queries operating on voluminous data.

- Columnar Storage: Redshift stores data in column-based format. This type of storage optimizes the analytical performance of the query as it reduces the overall disk I/O requirement.

- Result Caching: This is one of the important features of Amazon Redshift to reduce query execution time and improve system performance. It caches the result of repeated queries, and when these queries are rerun, it returns the cached result.

Move Data from Kafka to Redshift

Using the below methods, you can quickly migrate your data from Kafka to Amazon Redshift:

Method 1: Kafka to Redshift using Kafka Connect Amazon Redshift Sink Connector.

Method 2: Using SaaS Alternative to Move Data from Kafka to Redshift.

Method 1: Kafka to Redshift using Kafka Connect Amazon Redshift Sink Connector

Kafta Connect is an open-source tool to stream data between Apache Kafka and other systems. The Amazon Redshift S3 Sink Connector allows you to export data from Kafka to Amazon Redshift.

Features of the Redshift Sink Connector

Let’s take a look at the key features of the Kafka to Redshift Connector:

- Delivery at least once: The connector guarantees the delivery of records from Kafka topics at least once.

- Dead letter queue: This feature in Kafka Connector acts as a service that stores messages that fail to reach their destination due to improper message format or deserialization.

- Supports scheduled rotations: The connector supports regularly scheduled rotations for uploading files.

- Multiple-tasks: The connector supports executing multiple tasks at a time.

- Time-based partitioning: The connector supports time-based partitioning on an hourly or daily basis.

- Data format: Supports data format with static or dynamic schema.

Amazon Redshift Sink/S3 connector can be used with:

1. Confluent Platform: This is a self-managed, enterprise-grade distribution of Apache Kafka.

2. Confluent Cloud: This is a fully managed, cloud-native service for Apache Kafka.

Confluent Platform

You can use an Amazon Sink connector for the Confluent platform to export data. It will continuously poll data from Kafka and then write this data into Redshift.

Confluent Cloud

You can use an Amazon S3 connector for the Confluent Cloud to export data. It exports/streams data from Kafka and loads it into Amazon Redshift. The connector supports data from Kafka in JSON, Bytes, Avro, or Protobuf format and exports data to Amazon S3. Then you can use a copy command to move data from Amazon S3 to Redshift.

Limitations of the Sink Connector

- Poor network environments can affect the migration process. You need to ensure that the connector can reach your service.

- This connector cannot consume data that contains a nested database structure. Hence, cleaning data before transformation is an important step.

- The connector doesn’t support array data types.

- While exporting data from Kafka, the confluent cloud cluster and target Redshift/AWS S3 cluster must be in the same AWS region.

- If you are using a schema-based message format, check if a valid schema is available in the confluent cloud schema registry.

For a detailed step-by-step guide to move data from Kafka to Redshift, see resources for:

- Confluent Platform

- Confluent Cloud

Method 2: SaaS Alternative

There are various tools available in the market that can migrate your data from Kafka to Redshift. But, this approach is best-fitted if:

- You want to reduce the manual deployment and challenges

- But you still want your data to be streamed immediately to a variety of downstream applications

Estuary Flow is one such SaaS alternative that allows you to create a data flow connecting Kafka to Amazon Redshift. Once deployed, the pipeline operates continually in real time with no repetitive efforts.

Like Confluent is built on Kafka, Flow is a managed tool built on Gazette. It provides a platform for historical and real-time data operations, and offers exactly-once processing guarantees.

You can work with the Flow in two ways: CLI tool or graphical user interface. From collection to operalization, Flow guides data through its life cycle in real time.

Prerequisites to Move Data from Kafka to Redshift with Estuary Flows

- Estuary Flow account.

- A Kafka cluster with bootstrap.servers, connection security enabled with TLS.

- S3 bucket for staging temporary files.

- AWS root or IAM user with read and write access to the S3 bucket.

Steps to Move Data from Kafka to Redshift with Estuary Flow

- Login or sign up for a new Estuary Flow account.

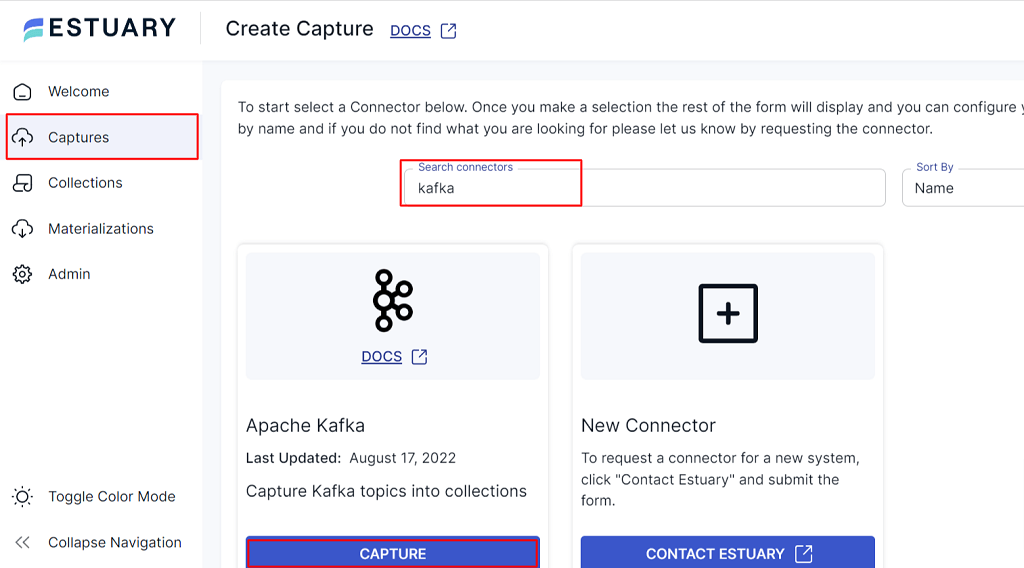

- Once you have logged in, click on Capture > + New Capture.

- Search for the Kafka connector and click Capture.

- Provide enter a unique name for your capture.

- Similarly, fill in the details for Endpoint Config. Enter the required details for Bootstrap Servers, TLS connection settings, and Authentication, and click "Next" to test the connection.

- Flow uses the provided information to initiate a connection to Kafka. Next, a Collection selector will appear, showing the list of collections, representing Kafka topics. In this list, deselect the name of connections that you don’t want to capture.

- You can view the generated capture definition and the schema for each collection in the Specification Editor. For each collection, modify its JSON schema. The schema will be essential to how the data is mapped to Redshift.

- Click Save and Publish. When the capture is published successfully, you will receive a notification.

- In the open dialog box, click Materialize Collections to continue.

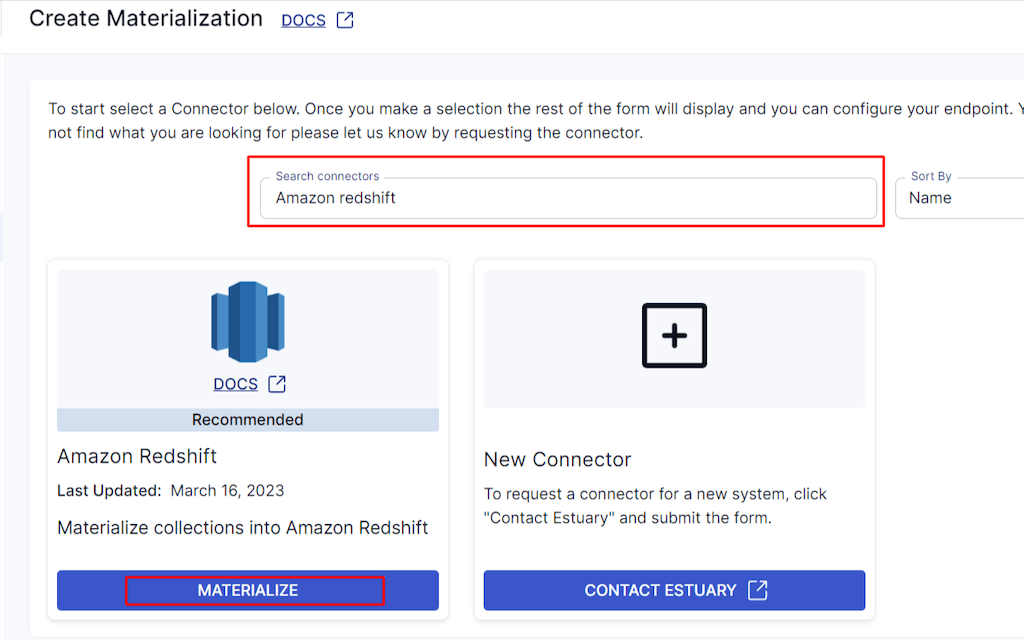

- A Create Materialization window will appear. In the search connectors, type Amazon Redshift and enter.

- Now an Amazon Redshift tile will appear. Click on Materialize.

- Choose a unique name for the materialization and fill in the Endpoint Config details. This connector materializes Flow collections (from your Kafka topics) into tables in an Amazon Redshift database.

- Click on Save and Publish. You will receive a notification when the data Flow is published successfully.

- From now on, as soon as new data that streams through your Kafka topics will be materialized to the appropriate Redshift table immediately.

Features of Estuary Flow

- Estuary Flow handles batch data as well as event data (real-time data).

- Historical data is handled more seamlessly than in Kafka.

- It offers a wide range of data connectors, including databases and SaaS applications.

- Once deployed, the pipeline will operate continuously without any manual work.

Summary

For moving data from Kafka to Redshift, you can opt for several tools available in the market. Depending upon the requirements of your application and software availability needs, any of the above approaches might be more suitable than others.

If you are a team of engineers and prefer the flexibility of a programmatic approach and software deployment, use the Amazon Redshift Sink/S3 connector method.

However, if you are looking for a seamless automated tool that will set you free from all the hassle and engineering burden, you might benefit from the SaaS Alternative approach. Try Flow today... Your first pipeline is free!