Google has you covered when it comes to data management services. It offers advanced tools and applications for data handling, including storage, processing, visualization, and analytics. Google Cloud Storage and BigQuery are two of the five storage solutions available in Google Cloud's application suite. In this article, we’ll guide you through the process of migrating data from Google Cloud Storage to BigQuery.

Google Cloud Storage Overview

Image Source: Google

Google Cloud Storage (GCS) is an object storage service provided by Google Cloud. An object is an immutable (unchangeable) piece of data that consists of a file with any format. GCS lets you store objects in containers known as buckets for improved accessibility. All the buckets in this platform are associated with a particular project, and you can choose to group multiple projects to make a collaborative workspace. The hierarchy to store data in GCS is Organization, Project, Bucket, Object, and Managed folder.

Key features of Google Cloud Storage include:

- Global and Regional Storage: To store data, GCS provides two choices—regional and multi-regional storage. The regional storage offers reduced latency within a particular region. On the other hand, multi-regional storage enables improved availability by storing data across multiple regions. You can select the option that best fits your specific requirements.

- Object Lifecycle Management (OLM): OLM allows you to control the objects' lifecycle in GCS. It contains a set of rules that apply to your selected objects in the bucket. When an object meets certain criteria, the cloud storage executes the specified action on the object.

- Scalability: With features like automatic scaling, load balancing, and throughput maximization, GCS offers impressive scalability. This adaptability makes GCS a great storage solution, whether you want to store a few files or petabytes of data.

Google BigQuery Overview

Image Source: Google

BigQuery is a cloud-based enterprise data warehouse from Google Cloud. It allows you to manage and analyze your data with cutting-edge built-in features like geospatial analysis, machine learning, and business intelligence. BigQuery improves query processing performance by storing data in columnar format, which helps reduce the amount of data processed for each query. Additionally, BigQuery offers a scalable distributed engine that lets you query terabytes of data in seconds and petabytes of data within minutes.

Key features of Google BigQuery include:

- Unified Solution: With the introduction of BigQuery Studio, you can leverage a unified interface for end-to-end analytical workflows from data ingestion and preparation to data visualization and exploration.

- Code Assistance: BigQuery offers an integrated AI collaborator called Duet AI, which gives contextual code assistance for writing Python and SQL code. Duet AI auto-suggests code blocks, functions, and fixes. Utilizing its chat-based interface, you can get real-time guidance for performing specific tasks.

Methods to Load Data from Google Cloud Storage to BigQuery

- Method 1: Load Data from Google Cloud Storage to BigQuery Manually

- Method 2: Load Data from Google Cloud Storage to BigQuery Using Estuary Flow

Method 1: Load Data from Google Cloud Storage to BigQuery Manually

In this method, you’ll manually load GCS data to BigQuery using the default features provided by both platforms. Since GCS and BigQuery are a part of the Google Cloud suite, you’ll use Google Console to ensure a streamlined process. Here’s the step-by-step guide:

Prerequisites

Permissions Required

Before you continue, ensure that you have the following permissions to carry out the data transfer:

For BigQuery

- bigquery.transfers.update permission for creating a transfer.

- bigquery.datasets.get and bigquery.datasets.update permissions on the BigQuery dataset.

For GCS

- storage.objects.get permissions for individual buckets from which you want to export data.

Step 1: Create a Project and Set Up BigQuery

- Go to the Google Cloud console and navigate to the project selection page.

- Select an existing project or create a new one.

- Verify that BigQuery API is activated for the project you selected. If not, enable it here.

- Go to the API library in Google Console and open BigQuery Data Transfer API. This is required to enable data transfer in your BigQuery project.

- Select your existing project on the API library page and click the ENABLE button.

Step 2: Create a BigQuery Dataset to Store GCS Data

- Go to Google BigQuery.

- In the Explorer menu, create a dataset for your project.

- Click Actions > Create dataset.

- On the Create data set page, enter your unique dataset name for Data set ID, choose a location for Location type, and configure other details as required.

- Click CREATE DATA SET.

Step 3: Transfer Google Cloud Storage Data to BigQuery

- Navigate back to the BigQuery dashboard.

- Click the Data transfers option from the left pane.

- On the Data transfer page, click + CREATE TRANSFER.

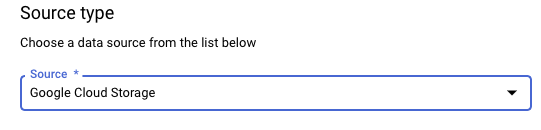

- On the Create transfer page, there are multiple sections to fill out. In the Source type section, choose Google Cloud Storage for Source.

Image Source: Google

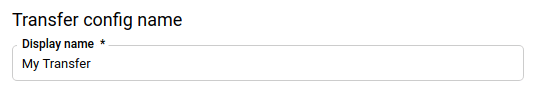

- Enter a unique Display name for transfer in Transfer config name. This can be any label that helps you identify the transfer if you need it later.

Image Source: Google

- Setting the Schedule:

- Choose the frequency for the transfer to occur under Repeat Frequency. You can select between three options: Hours, Days, and On-demand.

- Select Start now or Start at a set time according to your requirements.

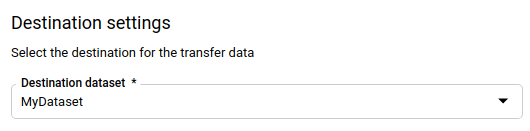

- Now, in the Destination settings section, choose the dataset you created in Step 2 as the Destination dataset.

Image Source: Google

- In Data source details, enter the name of the destination table in the BigQuery dataset for the Destination table. For Cloud Storage URI, enter your GCS URI. Configure other details accordingly.

- Navigate to the Service menu and select a service account that is associated with the Google Cloud project.

- You can also choose to adjust the Notification option and Advanced options sections.

- Click Save.

By following these steps, you’ll successfully migrate your data from GCS to BigQuery.

Method 2: Load Data from Google Cloud Storage to BigQuery Using Estuary Flow

Estuary Flow is a cloud-based data integration tool that automates the process of data loading from GCS to BigQuery in real-time. With features like 150+ pre-built connectors, monitoring capabilities, and incremental updates, it streamlines the complete data migration process. So, if you're looking for an efficient and reliable method to load GCS data into BigQuery, SaaS tools like Estuary Flow are a great option. Here's a detailed guide to go about the process:

Step 1: Configure Google Cloud Storage as a Source

- Sign up or sign in to your Estuary Flow account.

- After logging in, click the Sources tab from the left navigation menu in the dashboard.

- On the Sources page, click the + NEW CAPTURE button.

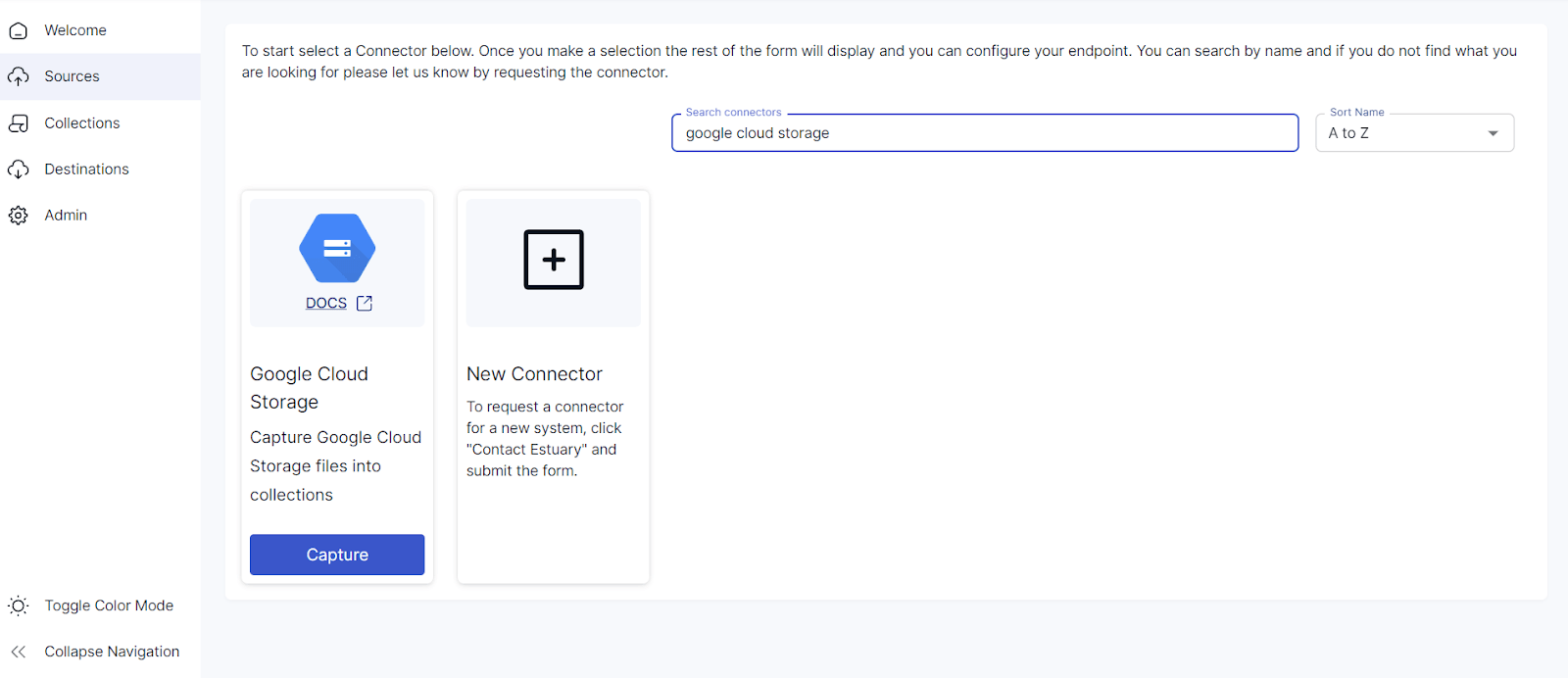

- Next, type in Google Cloud Storage in the Search connectors field.

- Click the Capture button of the GCS connector when you find it in the search results.

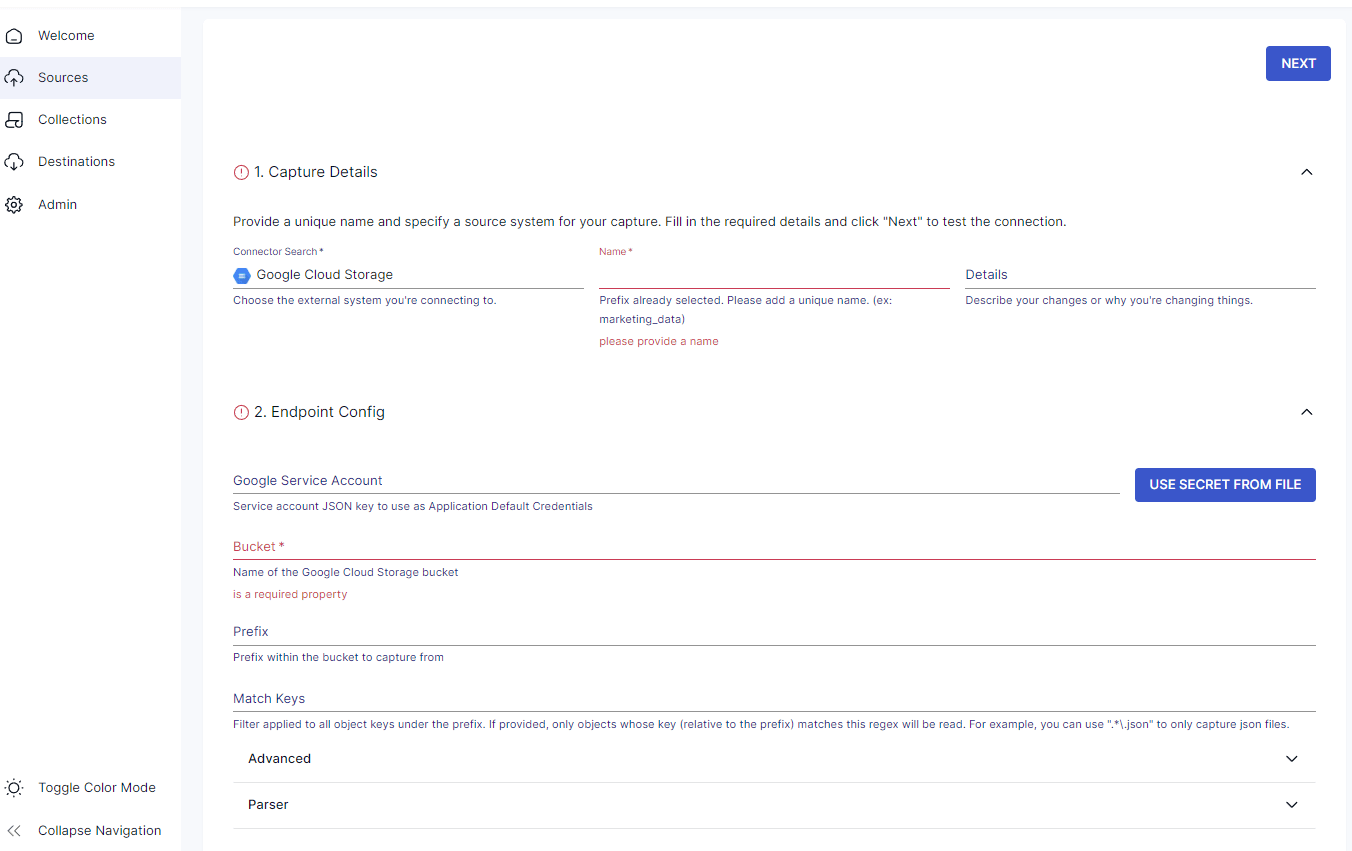

- You'll be navigated to the Create Capture page. Fill in a unique name for the capture in the Capture details section.

- In the Endpoint Config section, enter the details of your Google Service Account, Bucket, Prefix, and Match Keys.

- Click NEXT > SAVE AND PUBLISH.

Step 2: Configure BigQuery as a Destination

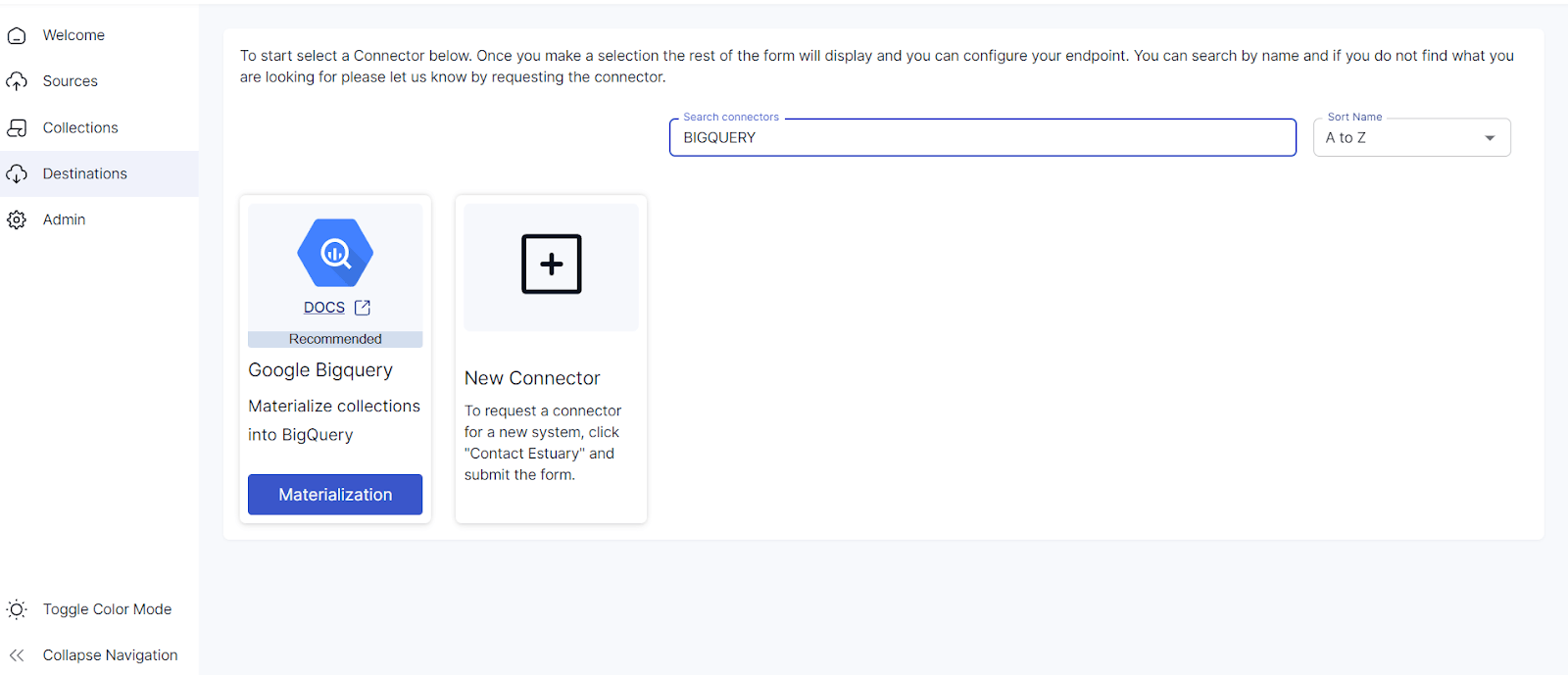

- Go to the Estuary Flow dashboard and click the Destinations tab from the left navigation bar.

- Click the + NEW MATERIALIZATION button on the Destinations page.

- Type BigQuery in the search field and select the Materialization option from the Google BigQuery connector.

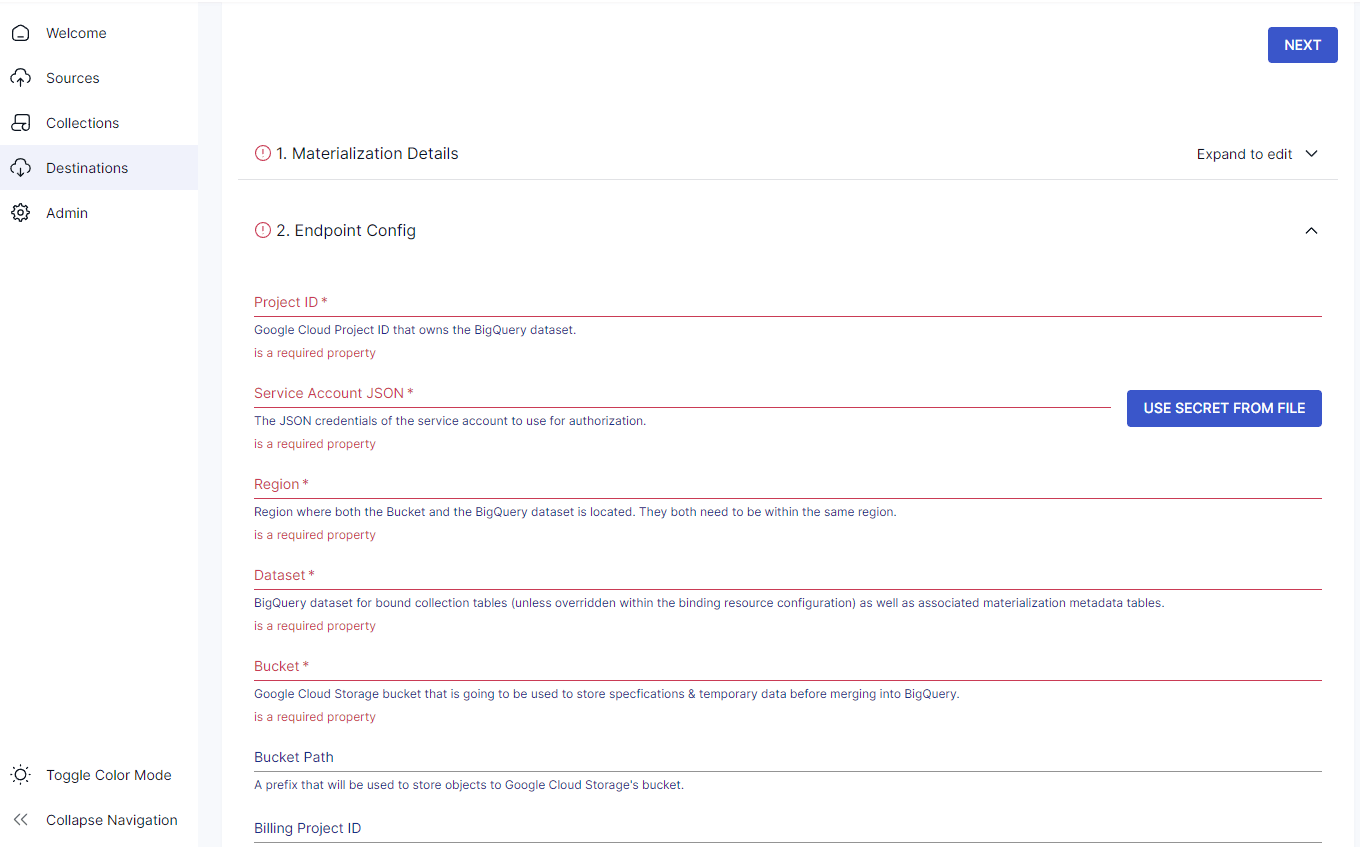

- Provide a Name for the capture and fill in your source database details, such as Project ID, Service Account JSON, Region, Dataset, and Bucket.

- Once you have filled in the required details, click NEXT. Estuary Flow will connect with your BigQuery account and identify data tables. Click SAVE and PUBLISH.

Flow will now continuously replicate your Google Cloud Storage data to BigQuery in real time and ensure that your data warehouse is always up-to-date.

For more information, refer to Estuary Flow’s documentation:

Benefits of Using Estuary Flow

- Increased Accuracy: Estuary Flow automates the process of extracting data from the source and loading it into the destination. This helps reduce the chances of mistakes or human errors that are common in manual migrations.

- Low Latency: Estuary Flow provides real-time data streaming capabilities with just milliseconds equivalent latency. You can use this capability to perform any data handling tasks between GCS and BigQuery within seconds.

- Scalability: Estuary Flow gives you the ability to handle increasing data volumes efficiently to accommodate demanding workloads. The platform supports CDC of up to 7GB/s, irrespective of the database size.

Conclusion

In this article, we explored two straightforward methods to replicate data from GCS to BigQuery. The first method uses Google Console to connect GCS to BigQuery, and the second uses Estuary Flow. Despite its complexity, you can choose the manual method if you have a one-time need for data migration between both tools.

However, Estuary Flow provides a hassle-free solution compared to the manual approach. With Estuary Flow, you can automate most data integration tasks and move data from GCS to BigQuery in real-time, ensuring your data is readily available for analysis and decision-making.